Scaling normalization and offsets

Koen Van den Berge

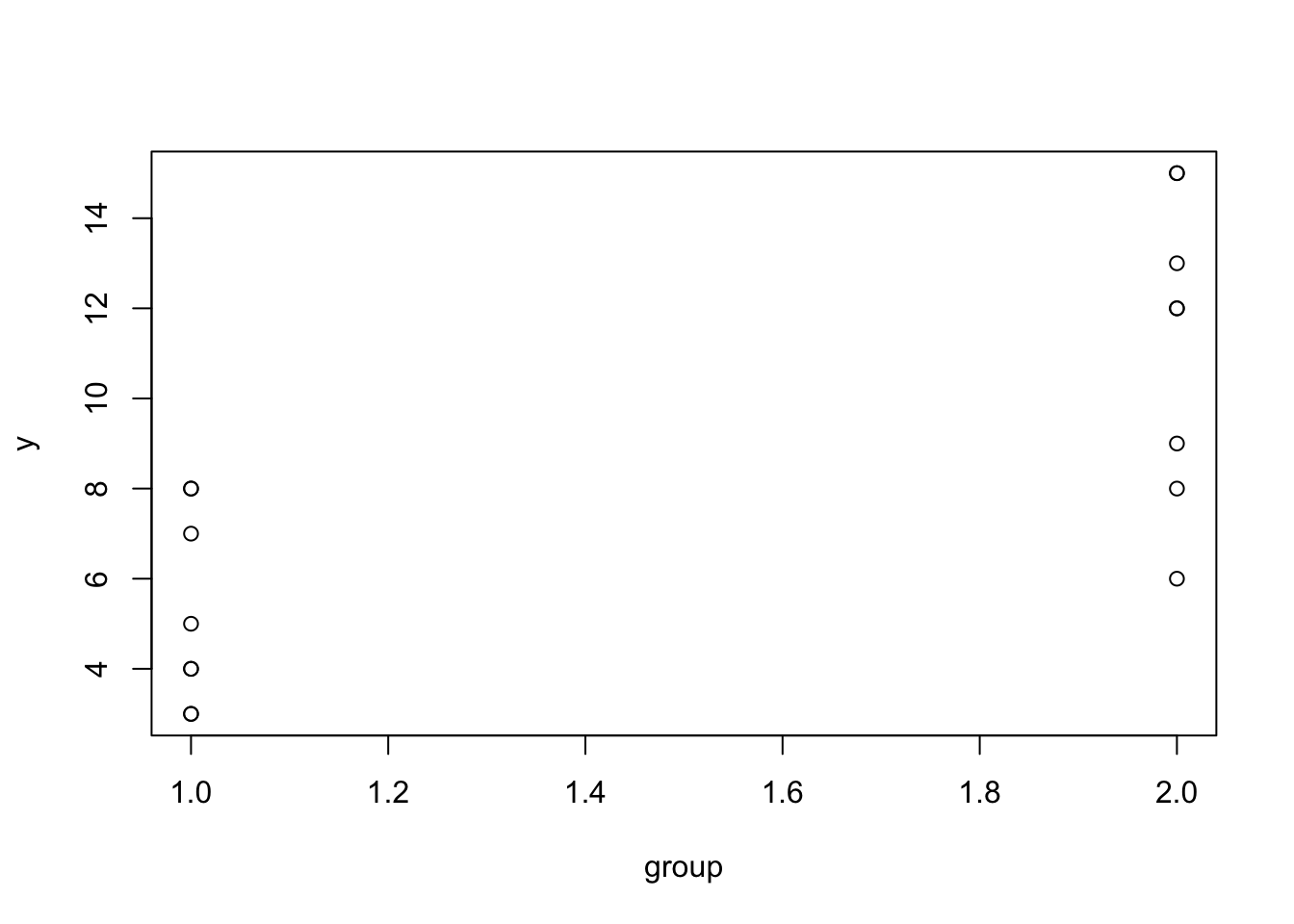

1 No offset

The gene is extremely significantly DE between groups.

set.seed(65)

nPerGroup <- 8

y <- c(rpois(n=nPerGroup, lambda = 7),

rpois(n=nPerGroup, lambda = 11))

group <- rep(1:2, each = nPerGroup)

plot(group, y)

# Poisson GLM, no library size: signifcantly DE

m <- glm(y ~ factor(group),

family = "poisson")

summary(m)##

## Call:

## glm(formula = y ~ factor(group), family = "poisson")

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.7195 -0.7771 0.0556 0.8105 1.1133

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 1.6582 0.1543 10.747 < 2e-16 ***

## factor(group)2 0.7621 0.1869 4.078 4.53e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 30.916 on 15 degrees of freedom

## Residual deviance: 13.055 on 14 degrees of freedom

## AIC: 78.587

##

## Number of Fisher Scoring iterations: 42 Library size offset

Since library sizes are different between groups, accounting for library size results in the gene no longer being DE at the 5% significance level. Not correcting for sequencing depth would thus result in spurious results.

# Suppose library sizes are different between groups

libSize <- c(rpois(n=nPerGroup, lambda = 1e5),

rpois(n=nPerGroup, lambda = 1.5e5))

# Poisson GLM with library size offset: no longer significantly DE on 5% level.

m <- glm(y ~ factor(group) + offset(log(libSize)),

family = "poisson")

summary(m)##

## Call:

## glm(formula = y ~ factor(group) + offset(log(libSize)), family = "poisson")

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.73231 -0.78231 0.05288 0.81088 1.11070

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -9.8536 0.1543 -63.859 <2e-16 ***

## factor(group)2 0.3545 0.1869 1.897 0.0578 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 16.851 on 15 degrees of freedom

## Residual deviance: 13.111 on 14 degrees of freedom

## AIC: 78.643

##

## Number of Fisher Scoring iterations: 43 Scaling versus offsets

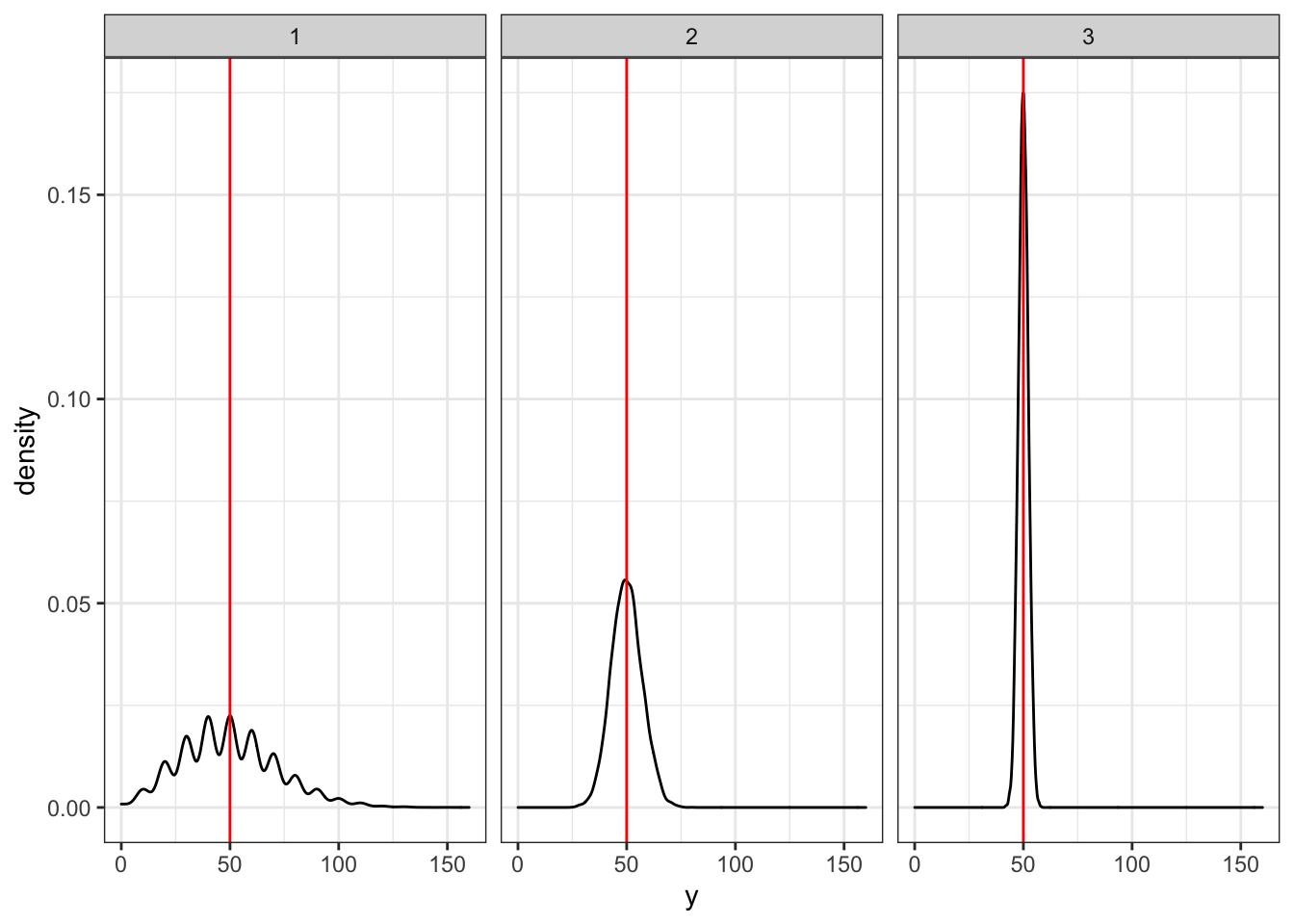

In RNA-seq, we are working with count data. Count data has an inherent mean-variance structure, where the variance is positively associated with the mean. For example, assume a random variable \(Y_{i} \sim Poi(\mu)\), where \(i \in \{1, \ldots, n\}\). We can visualize the mean-variance relationship by simulating different random variables \(\mathbf{Y}_1, \mathbf{Y}_2, \mathbf{Y}_3\). Say,

\[ Y_{1i} \sim Poi(\mu_1 = 5) \] \[ Y_{2i} \sim Poi(\mu_2 = 50) \] \[ Y_{3i} \sim Poi(\mu_3 = 500) \]

Below, we enforce the same mean for all three random variables, i.e. we create the set of random variables \(\{ Y_{1i} \times 10, Y_{2i}, Y_{3i} / 10 \}\), and plot the density of each variable. It is clear that the distributions are drastically different. This is because, although they all have the same mean, their variance is different, and indeed this is because of the mean-variance relationship that was impliclty used when simulating the data.

library(ggplot2)

set.seed(50)

n <- 1e4

y1 <- rpois(n = n, lambda = 5)

y2 <- rpois(n = n, lambda = 50)

y3 <- rpois(n = n, lambda = 500)

df <- data.frame(y = c(y1 * 10, y2, y3 / 10),

gr = factor(rep(1:3, each = n)))

ggplot(df, aes(x=y)) +

geom_density() +

facet_wrap(. ~ gr) +

geom_vline(xintercept = 50, col="red") +

theme_bw()

But didn’t you say a higher mean was associated with a higher variance?

The critical reader would rightly so be somewhat confused at this point. Indeed, above we wrote that “the variance is positively associated with the mean”, but the density plots are showing that \(\mathbf{Y}_3\), which was simulated to have the highest mean, has the smallest variance. Based on the simulation, we have that \(Var(\mathbf{Y}_3) = 500\). However, \(Var(\mathbf{Y}_3 / 10) = \frac{1}{100} Var(\mathbf{Y}_3) = 5\). Thus, \(\mathbf{Y}_3\) indeed will have a small variance upon rescaling.

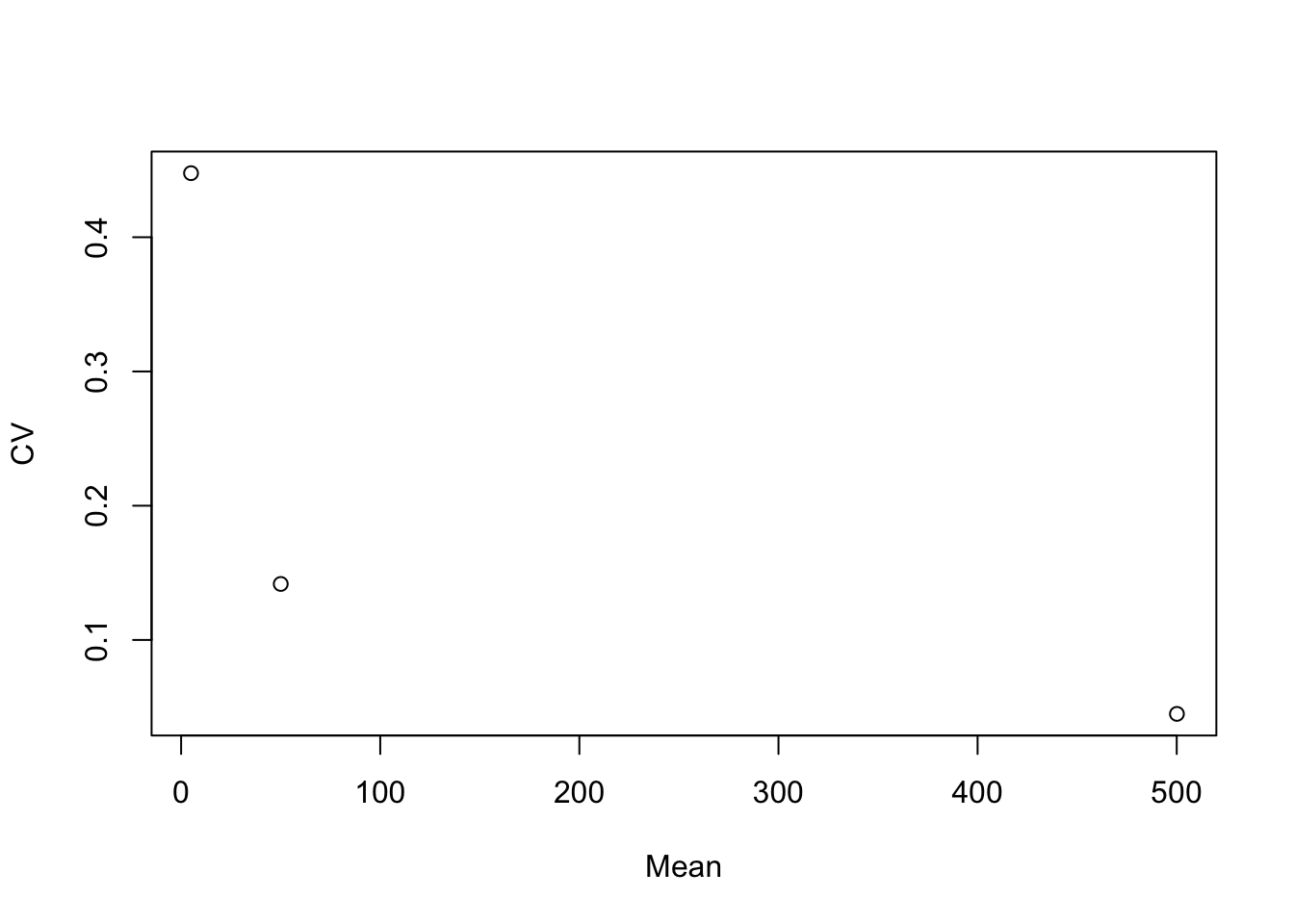

One could also say that the relative certainty of the mean of a Poisson random variable increases with its mean. With relative certainty, we mean the coefficient of variation (CV). Indeed, the CV of a Poisson distribution decreases with the mean

\[ CV(\mathbf{Y}_3) \le CV(\mathbf{Y}_2) \le CV(\mathbf{Y}_1). \]

We show this below using our simulated data.

## [1] 0.4477185## [1] 0.1417173## [1] 0.0449334

See also appendix B1 of Ahlmann-Eltze & Huber (2021) for more information and a more formal justification.