Linear Regression for High Dimensional Data

Consider linear regression model (for double centered data)

with and .

In matrix notation the model becomes

The least squares estimator of is given by

and the variance of equals

the matrix is crucial

Note, that

with double centered data it is meant that both the responses are centered (mean of is zero) and that all predictors are centered (columns of have zero mean). With double centered data the intercept in a linear regression model is always exactly equal to zero and hence the intercept must not be included in the model.

we do not assume that the residuals are normally distributed. For prediction purposes this is often not required (normality is particularly important for statistical inference in small samples).

Linear Regression for multivariate data vs High Dimensional Data

and are matrices

can only be inverted if it has rank

Rank of a matrix of form , with and matrix, can never be larger than .

in most regression problems and rank of equals

in high dimensional regression problems and rank of equals

in the toxicogenomics example and .

does not exist, and neither does .

Can SVD help?

Since the columns of are centered, .

if , the PCA will give 30 components, each being a linear combination of variables. These 30 PCs contain all information present in the original data.

if , the SVD of is given by

with the matrix with the scores on the PCs.

Still problematic because if we use all PCs .

Principal Component Regression

A principal component regression (PCR) consists of

transforming dimensional -variable to the dimensional -variable (PC scores). The PCs are mutually uncorrelated.

using the PC-variables as regressors in a linear regression model

performing feature selection to select the most important regressors (PC).

Feature selection is key, because we don’t want to have as many regressors as there are observations in the data. This would result in zero residual degrees of freedom. (see later)

To keep the exposition general so that we allow for a feature selection to have taken place, I use the notation to denote a matrix with left-singular column vectors , with ( an index set referring to the PCs to be included in the regression model).

For example, suppose that a feature selection method has resulted in the selection of PCs 1, 3 and 12 for inclusion in the prediction model, then and

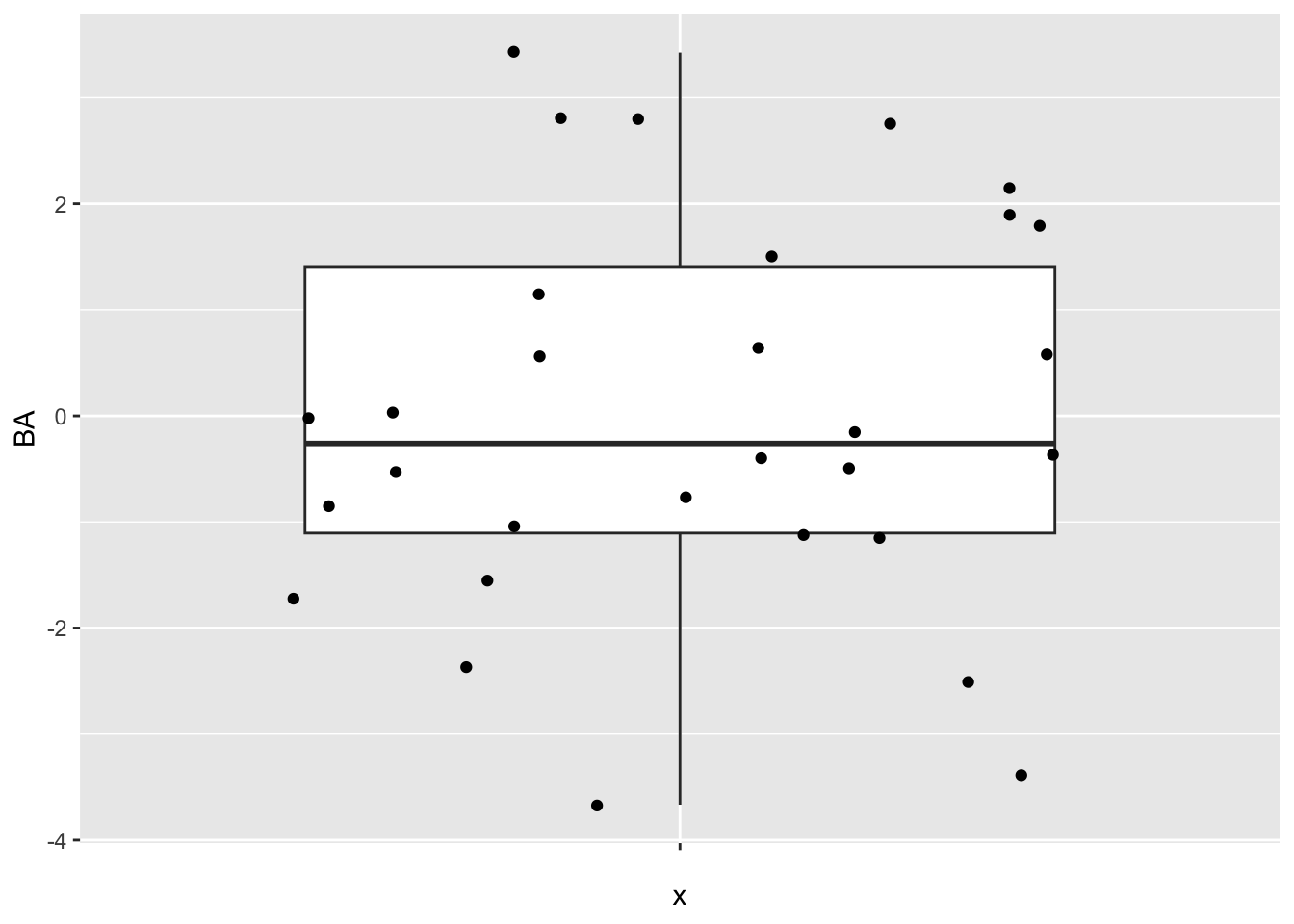

Example model based on first 4 PCs

k <- 30

Uk <- svdX$u[,1:k]

Dk <- diag(svdX$d[1:k])

Zk <- Uk%*%Dk

Y <- toxData %>%

pull(BA)

m4 <- lm(Y~Zk[,1:4])

summary(m4)

#>

#> Call:

#> lm(formula = Y ~ Zk[, 1:4])

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -2.1438 -0.7033 -0.1222 0.7255 2.2997

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 7.961e-16 2.081e-01 0.000 1.0000

#> Zk[, 1:4]1 -5.275e-01 7.725e-02 -6.828 3.72e-07 ***

#> Zk[, 1:4]2 -1.231e-02 8.262e-02 -0.149 0.8828

#> Zk[, 1:4]3 -1.759e-01 8.384e-02 -2.098 0.0461 *

#> Zk[, 1:4]4 -3.491e-02 8.396e-02 -0.416 0.6811

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 1.14 on 25 degrees of freedom

#> Multiple R-squared: 0.672, Adjusted R-squared: 0.6195

#> F-statistic: 12.8 on 4 and 25 DF, p-value: 8.352e-06

Note:

- the intercept is estimated as zero. (Why?) The model could have been fitted as

m4 <- lm(Y~-1+Zk[,1:4])

the PC-predictors are uncorrelated (by construction)

first PC-predictors are not necessarily the most important predictors

-values are not very meaningful when prediction is the objective

Methods for feature selection will be discussed later.

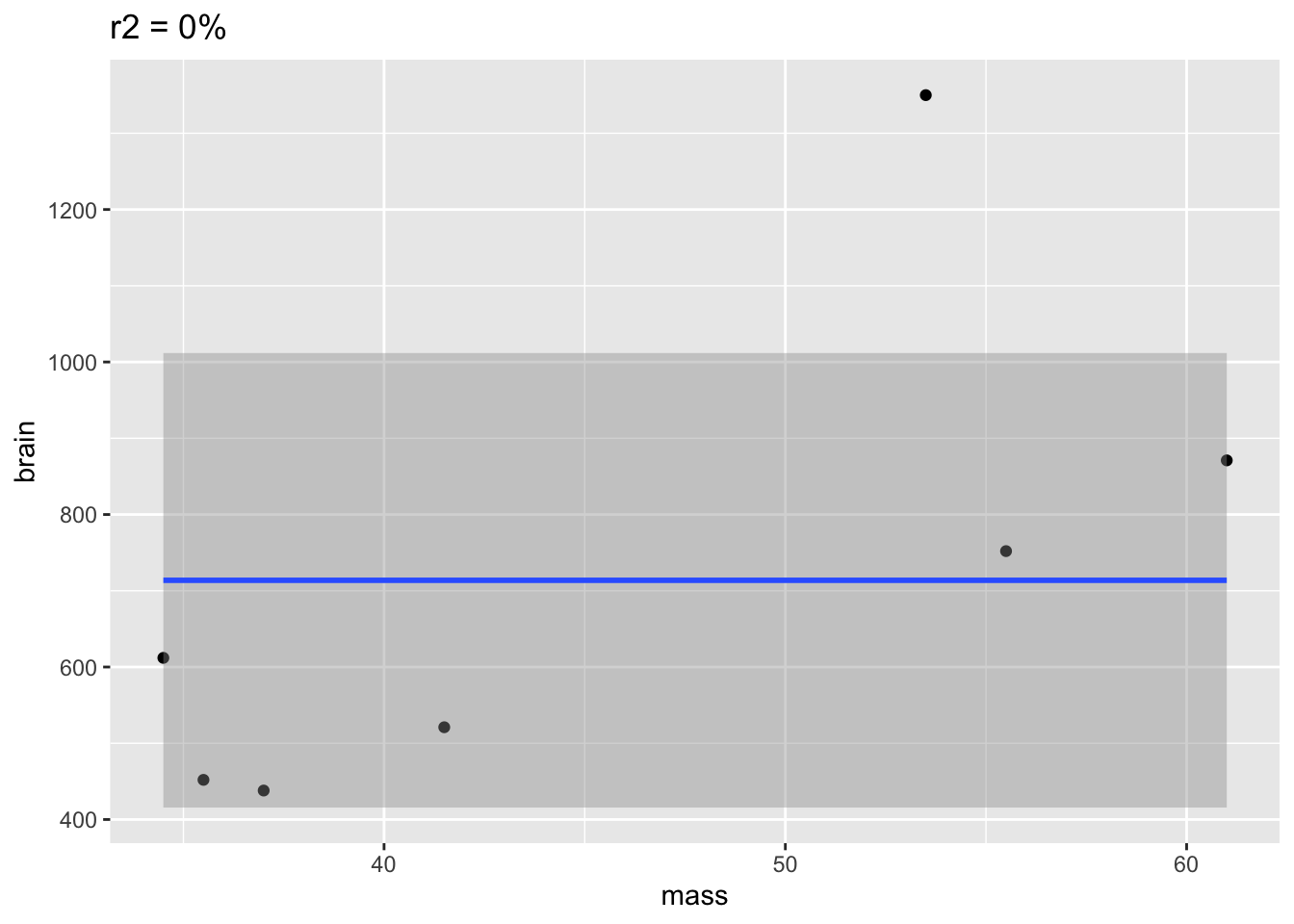

Ridge Regression

Penalty

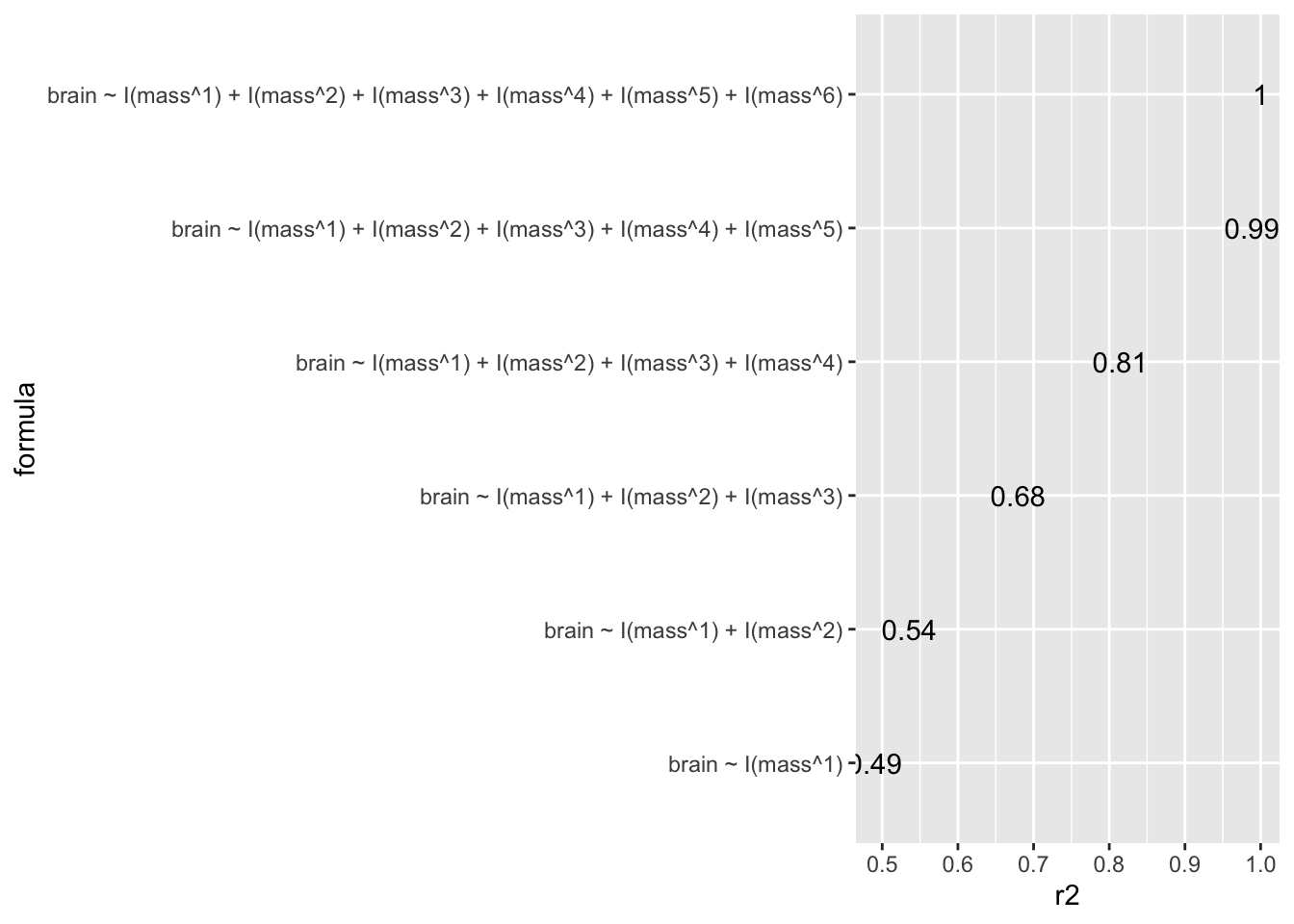

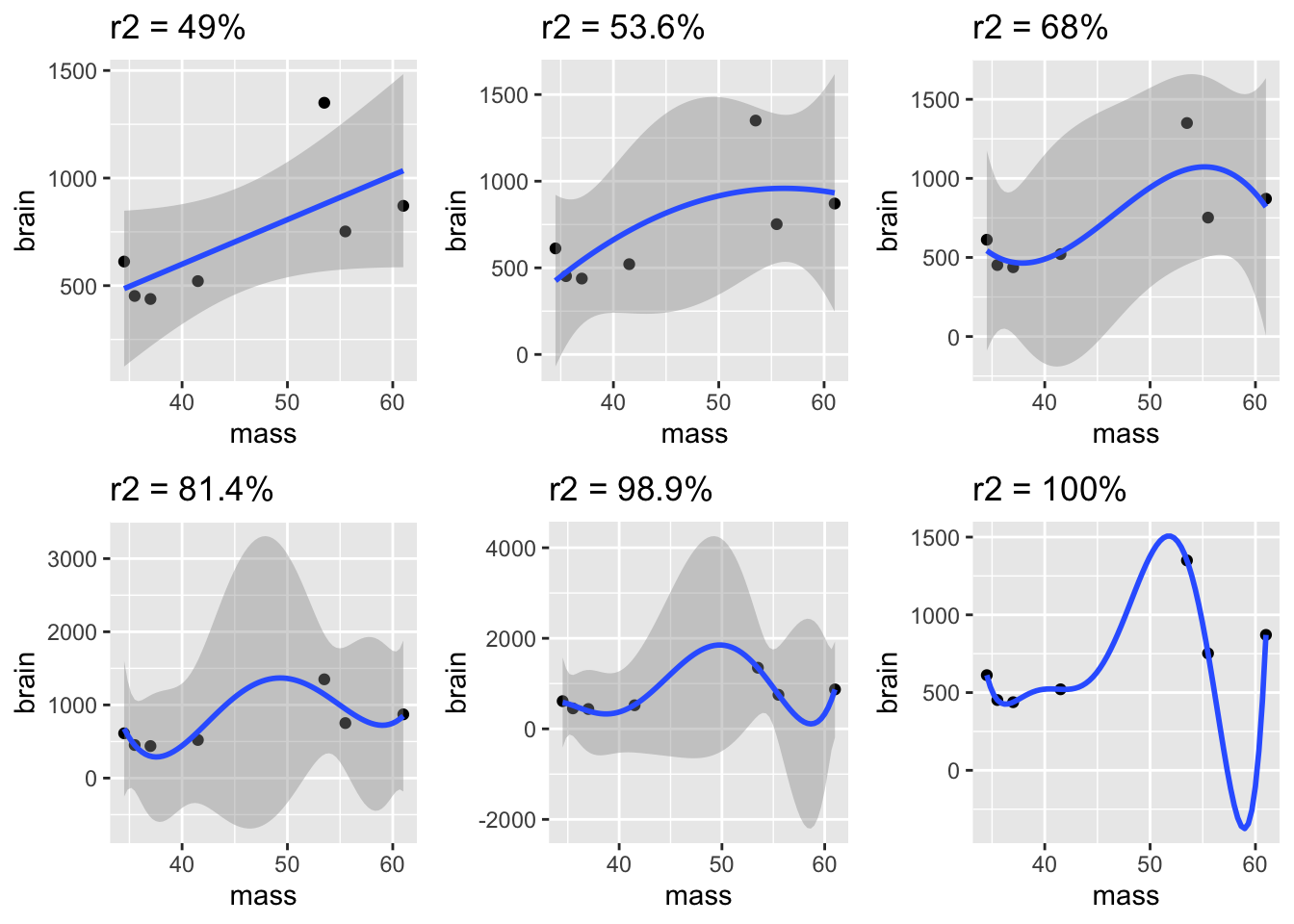

The ridge parameter estimator is defined as the parameter that minimises the penalised least squares criterion

Note, that that is equivalent to minimizing

Note, that has a one-to-one correspondence with

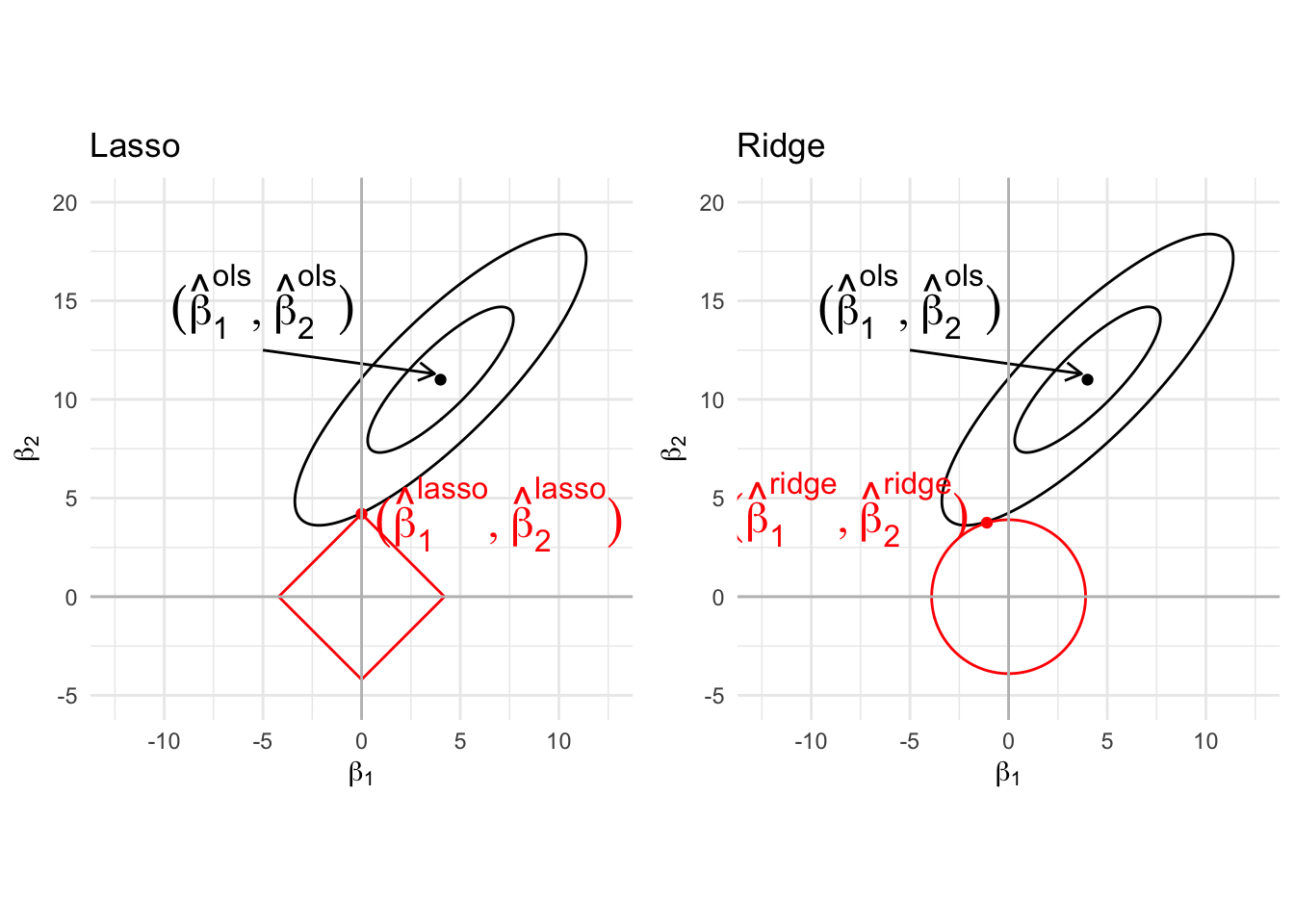

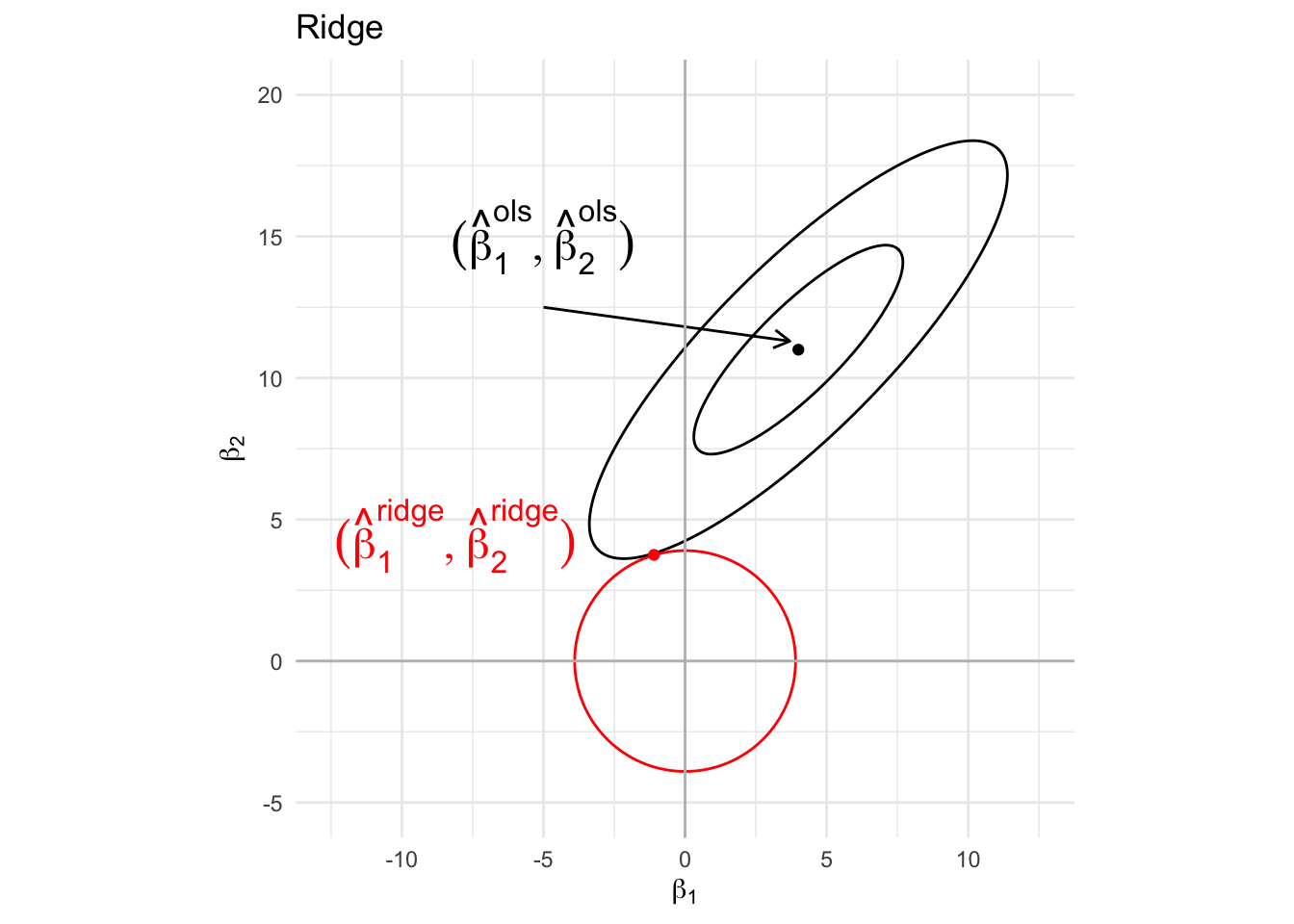

Graphical interpretation

Solution

The solution is given by

It can be shown that is always of rank if .

Hence, is invertible and exists even if .

We also find

However, it can be shown that improved intervals that also account for the bias can be constructed by using:

Proof

The criterion to be minimised is

First we re-express SSE in matrix notation:

The partial derivative w.r.t. is

Solving gives

(assumption: is of rank . This is always true if )

Link with SVD

SVD and inverse

Write the SVD of () as

with

a diagonal matrix of the

an matrix and a matrix. Note that only the first columns of and are informative.

With the SVD of we write

The inverse of is then given by

Since has , it is not invertible.

SVD of penalised matrix

It can be shown that

i.e. adding a constant to the diagonal elements does not affect the eigenvectors, and all eigenvalues are increased by this constant.

zero eigenvalues become .

Hence,

which can be computed even when some eigenvalues in are zero.

Note, that for high dimensional data () many eigenvalues are zero because is a matrix and has rank .

The identity is easily checked:

Properties

The Ridge estimator is biased! The are shrunken to zero!

Note, that the shrinkage is larger in the direction of the smaller eigenvalues.

the variance of the prediction ,

is smaller than with the least-squares estimator.

through the bias-variance trade-off it is hoped that better predictions in terms of expected conditional test error can be obtained, for an appropriate choice of .

Recall the expression of the expected conditional test error

where

- is the prediction at

- is an outcome at predictor

- the irreducible error that does not depend on the model. It simply originates from observations that randomly fluctuate around the true mean .

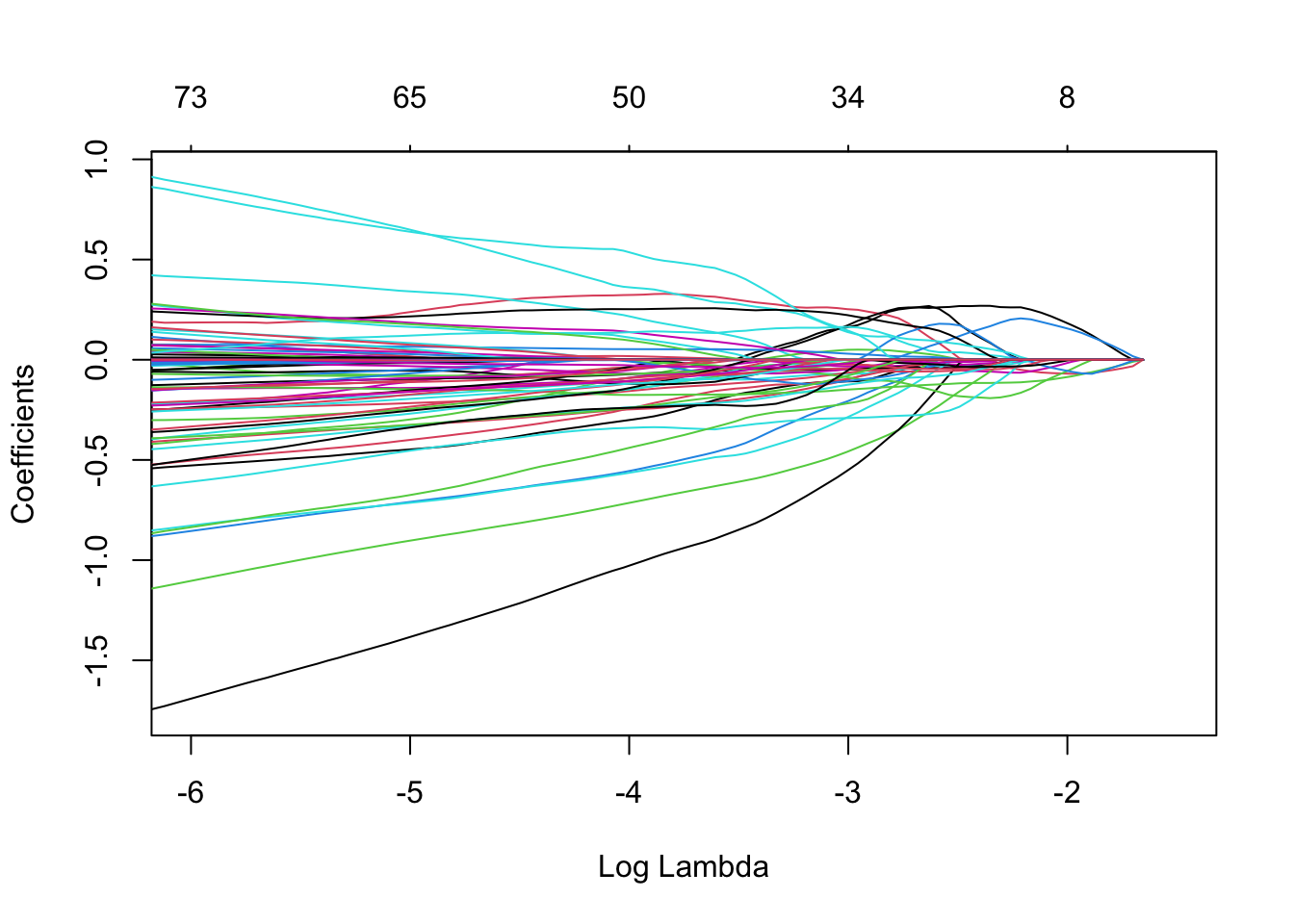

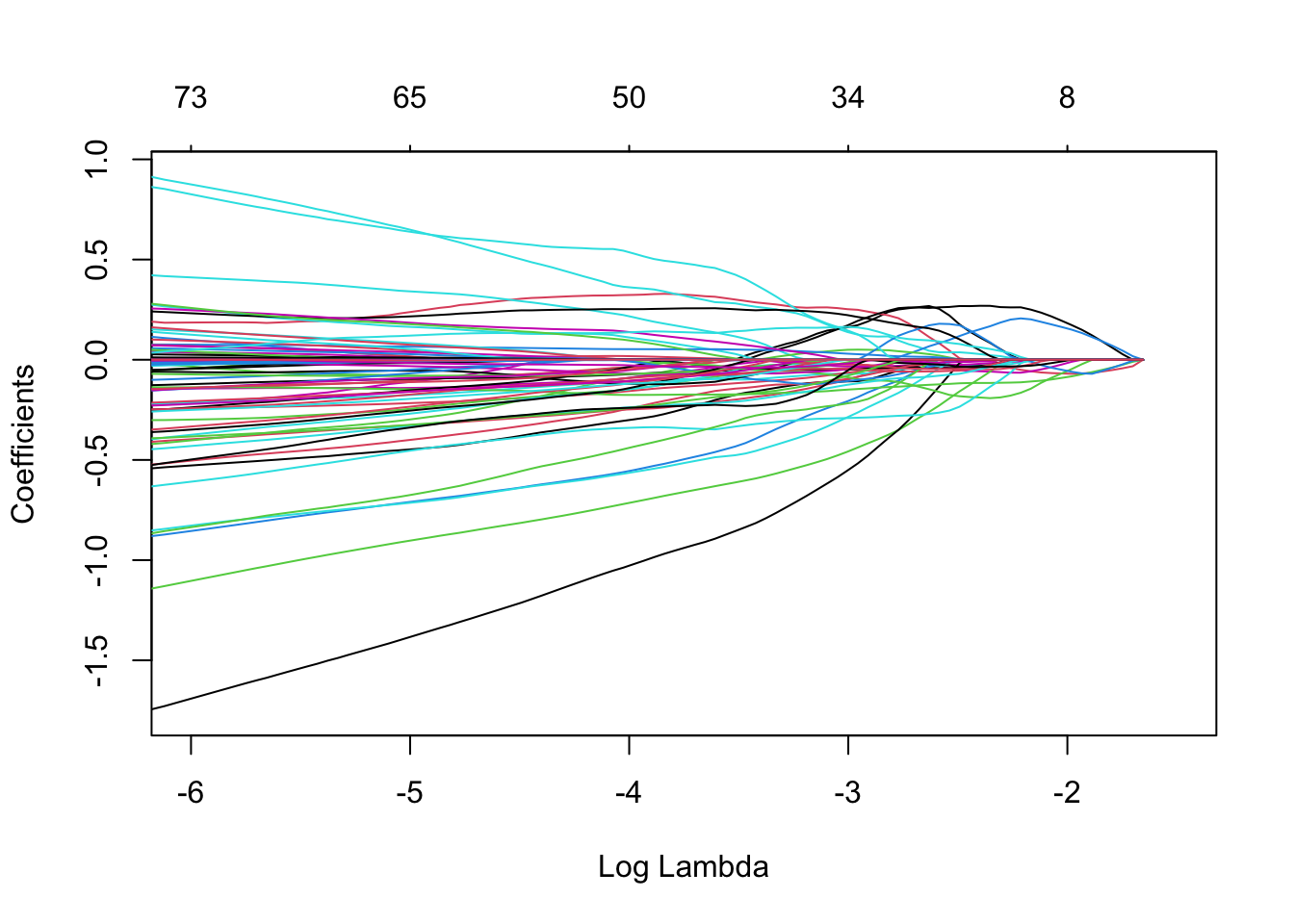

Toxicogenomics example

library(glmnet)

mRidge <- glmnet(

x = toxData[,-1] %>%

as.matrix,

y = toxData %>%

pull(BA),

alpha = 0) # ridge: alpha = 0

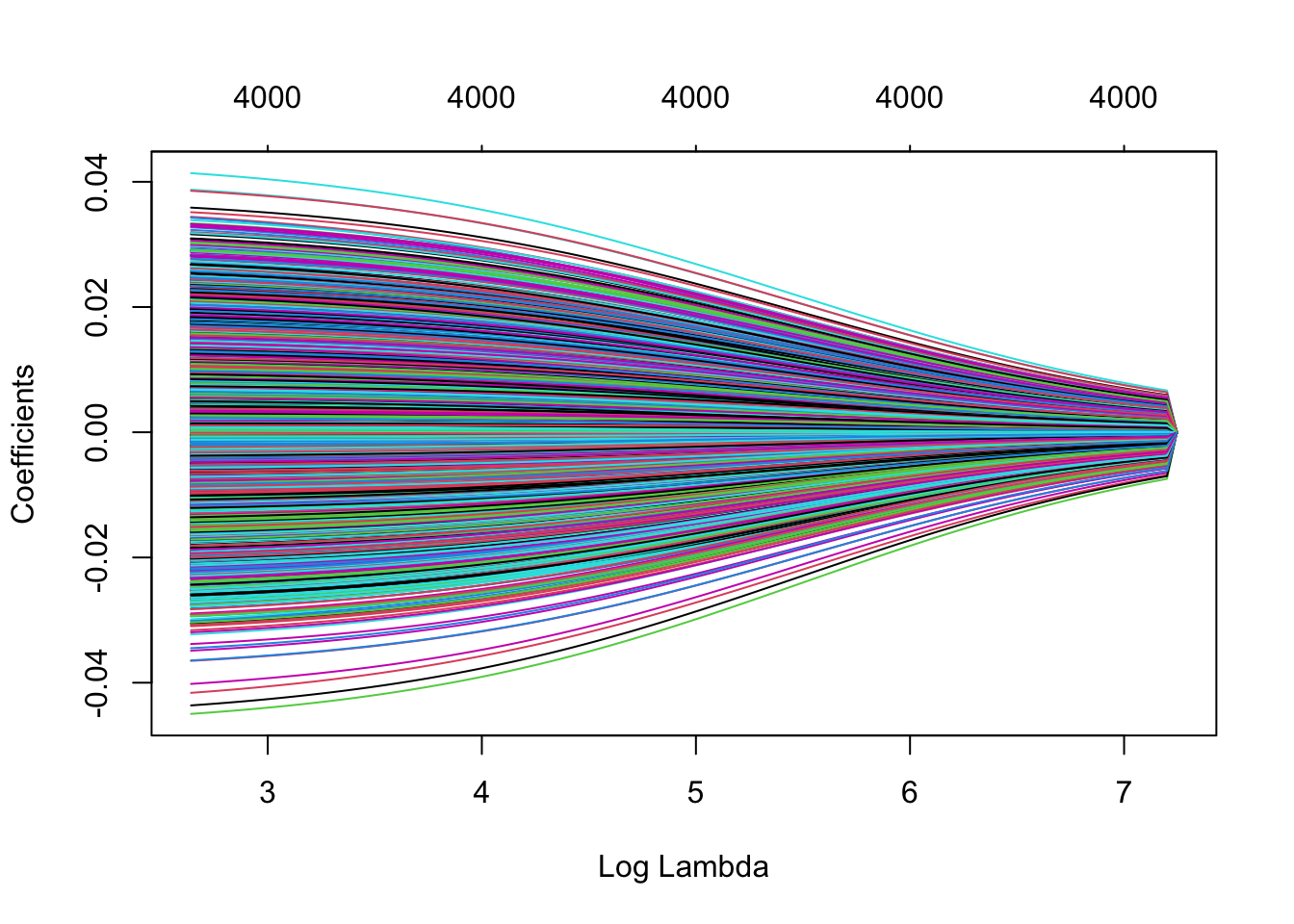

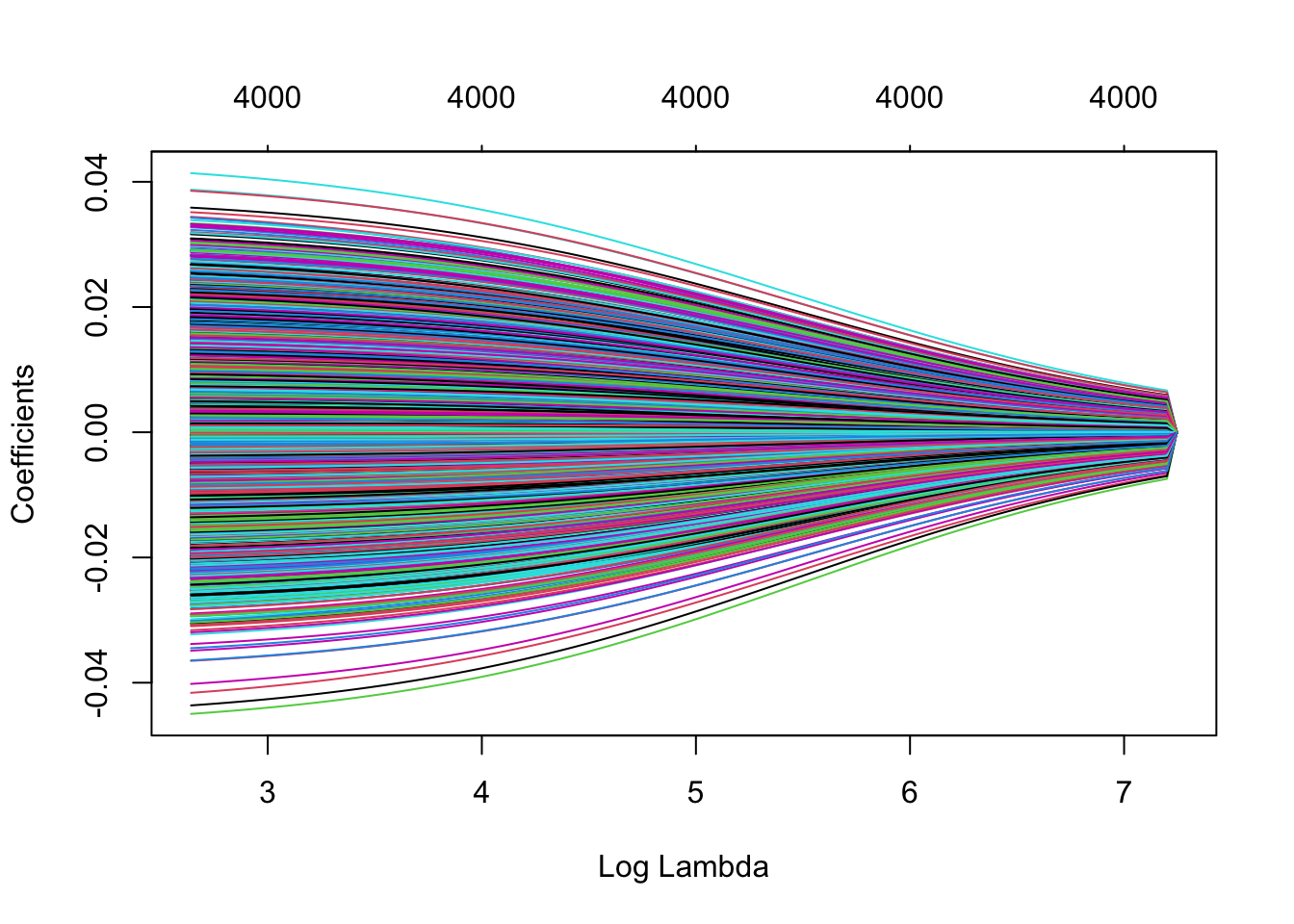

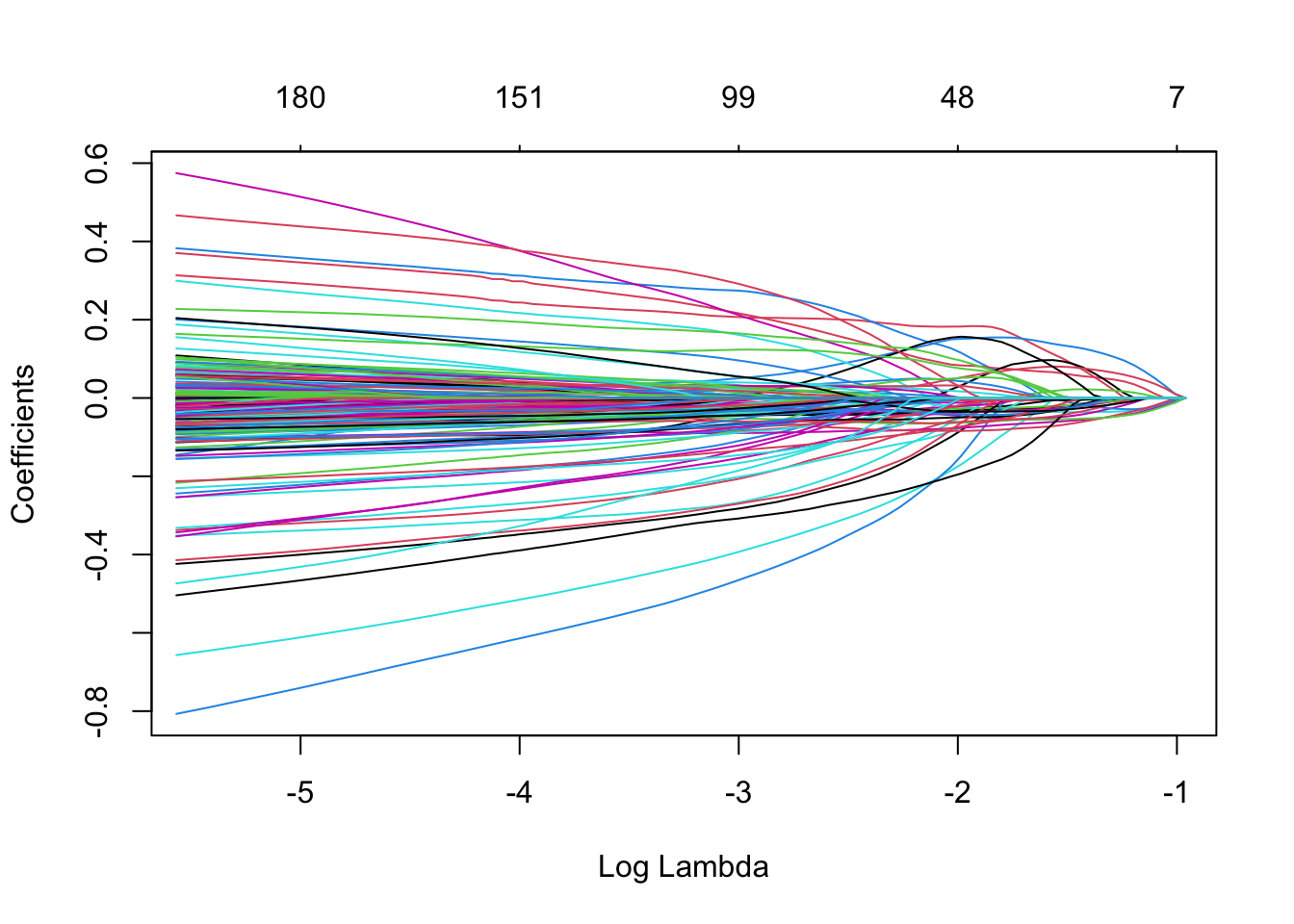

plot(mRidge, xvar="lambda")

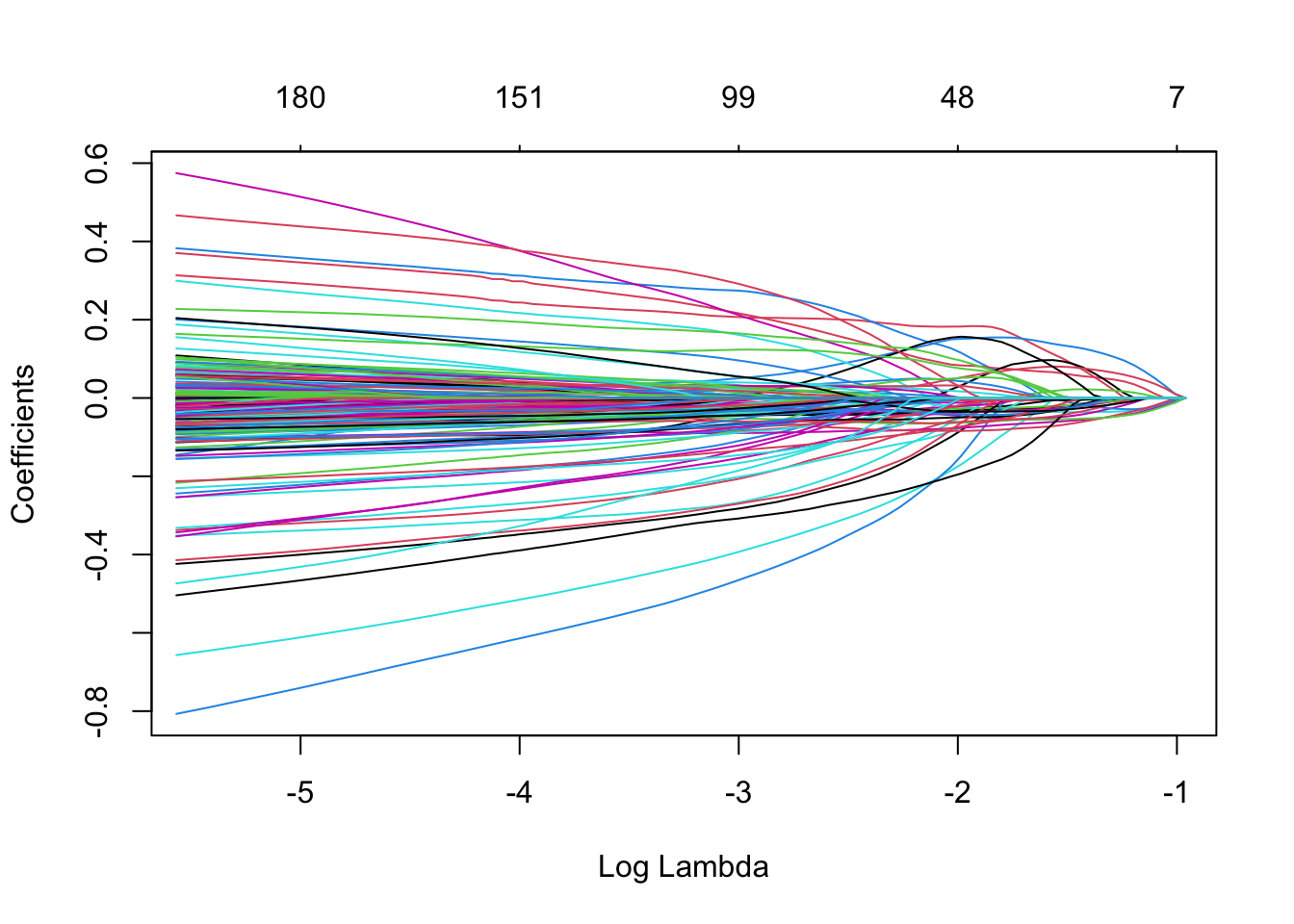

The R function uses to refer to the penalty parameter. In this course we use , because is often used as eigenvalues.

The graph shows that with increasing penalty parameter, the parameter estimates are shrunken towards zero. The estimates will only reach zero for . The stronger the shrinkage, the larger the bias (towards zero) and the smaller the variance of the parameter estimators (and hence also smaller variance of the predictions).

Another (informal) viewpoint is the following. By shrinking the estimates towards zero, the estimates loose some of their ``degrees of freedom’’ so that the parameters become estimable with only data points. Even with a very small , the parameters regain their estimability. However, note that the variance of the estimator is given by

Hence, a small will result in large variances of the parameter estimators. The larger , the smaller the variances become. In the limit, as , the estimates are converged to zero and show no variability any longer.

Evaluation of Prediction Models

Predictions are calculated with the fitted model

when focussing on prediction, we want the prediction error to be as small as possible.

The prediction error for a prediction at covariate pattern is given by

where

Prediction is typically used to predict an outcome before it is observed.

- Hence, the outcome is not observed yet, and

- the prediction error cannot be computed.

Recall that the prediction model is estimated by using data in the training data set , and

that the outcome is an outcome at which is assumed to be independent of the training data.

Goal is to use prediction model for predicting a future observation (), i.e. an observation that still has to be realised/observed (otherwise prediction seems rather useless).

Hence, can never be part of the training data set.

Here we provide definitions and we show how the prediction performance of a prediction model can be evaluated from data.

Let denote the training data, from which the prediction model is build. This building process typically involves feature selection and parameter estimation.

We will use a more general notation for the prediction model: .

Test or Generalisation Error

The test or generalisation error for prediction model is given by

where is independent of the training data.

- Note that the test error is conditional on the training data .

- Hence, the test error evaluates the performance of the single model build from the observed training data.

- This is the ultimate target of the model assessment, because it is exactly this prediction model that will be used in practice and applied to future predictors to predict .

- The test error is defined as an average over all such future observations .

Conditional test error

Sometimes the conditional test error is used:

The conditional test error in for prediction model is given by

where is an outcome at predictor , independent of the training data.

Hence,

A closely related error is the insample error.

Insample Error

The insample error for prediction model is given by

i.e. the insample error is the sample average of the conditional test errors evaluated in the training dataset predictors .

Since is an average over all , even over those predictors not observed in the training dataset, it is sometimes referred to as the outsample error.

Estimation of the insample error

We start with introducing the training error rate, which is closely related to the MSE in linear models.

Training error

The training error is given by

where the from the training dataset which is also used for the calculation of .

The training error is an overly optimistic estimate of the test error .

The training error will never increases when the model becomes more complex. cannot be used directly as a model selection criterion.

Indeed, model parameters are often estimated by minimising the training error (cfr. SSE).

- Hence the fitted model adapts to the training data, and

- training error will be an overly optimistic estimate of the test error .

It can be shown that the training error is related to the insample test error via

Note, that for linear models

with

- the hat matrix and

- all are assumed to be independently distributed

Hence, for linear models with independent observations

And we can thus estimate the insample error by Mallow’s

with the number of predictors.

- Mallow’s is often used for model selection.

- Note, that we can also consider it as a kind of penalized least squares:

with norm .

Expected test error

The test or generalisation error was defined conditionally on the training data. By averaging over the distribution of training datasets, the expected test error arises.

The expected test error may not be of direct interest when the goal is to assess the prediction performance of a single prediction model .

The expected test error averages the test errors of all models that can be build from all training datasets, and hence this may be less relevant when the interest is in evaluating one particular model that resulted from a single observed training dataset.

Also note that building a prediction model involves both parameter estimation and feature selection.

Hence the expected test error also evaluates the feature selection procedure (on average).

If the expected test error is small, it is an indication that the model building process gives good predictions for future observations on average.

Estimating the Expected test error

The expected test error may be estimated by cross validation (CV).

Leave one out cross validation (LOOCV)}

The LOOCV estimator of the expected test error (or expected outsample error) is given by

where

- the form the training dataset

- is the fitted model based on all training data, except observation

- is the prediction at , which is the observation left out the training data before building model .

Some rationale as to why LOOCV offers a good estimator of the outsample error:

the prediction error is mimicked by not using one of the training outcomes for the estimation of the model so that this plays the role of , and, consequently, the fitted model is independent of

the sum in is over all in the training dataset, but each term was left out once for the calculation of . Hence, mimics an outsample prediction.

the sum in CV is over different training datasets (each one with a different observation removed), and hence CV is an estimator of the expected test error.

For linear models the LOOCV can be readily obtained from the fitted model: i.e.

with the residuals from the model that is fitted based on all training data.

An alternative to LOOCV is the -fold cross validation procedure. It also gives an estimate of the expected outsample error.

-fold cross validation

Randomly divide the training dataset into approximately equal subsets . Let denote the index set of the th subset (referred to as a fold). Let denote the number of observations in fold .

The -fold cross validation estimator of the expected outsample error is given by

where is the model fitted using all training data, except observations in fold (i.e. observations ).

The cross validation estimators of the expected outsample error are nearly unbiased. One argument that helps to understand where the bias comes from is the fact that e.g. in de LOOCV estimator the model is fit on only observations, whereas we are aiming at estimating the outsample error of a model fit on all training observations. Fortunately, the bias is often small and is in practice hardly a concern.

-fold CV is computationally more complex.

Since CV and CV are estimators, they also show sampling variability. Standard errors of the CV or CV can be computed. We don’t show the details, but in the example this is illustrated.

Bias Variance trade-off

For the expected conditional test error in , it holds that

where .

bias:

does not depend on the model, and is referred to as the irreducible variance.

The importance of the bias-variance trade-off can be seen from a model selection perspective. When we agree that a good model is a model that has a small expected conditional test error at some point , then the bias-variance trade-off shows us that a model may be biased as long as it has a small variance to compensate for the bias. It often happens that a biased model has a substantial smaller variance. When these two are combined, a small expected test error may occur.

Also note that the model which forms the basis of the prediction model does NOT need to satisfy or . The model is known by the data-analyst (its the basis of the prediction model), whereas and are generally unknown to the data-analyst. We only hope that serves well as a prediction model.

In practice

We use cross validation to estimate the lambda penalty for penalised regression:

- Ridge Regression

- Lasso

- Build models, e.g. select the number of PCs for PCA regression

- Splines

Toxicogenomics example

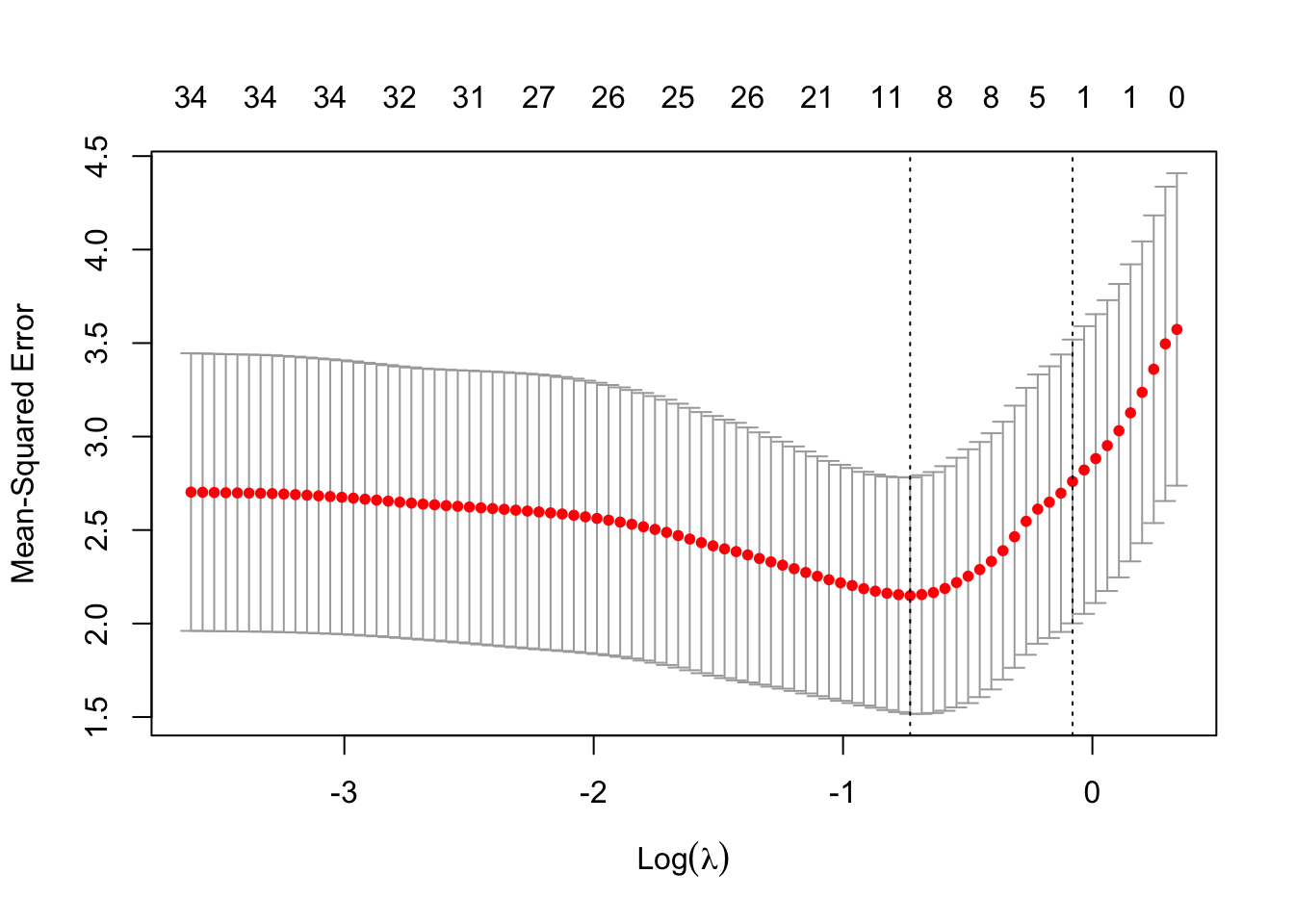

Lasso

set.seed(15)

library(glmnet)

mCvLasso <- cv.glmnet(

x = toxData[,-1] %>%

as.matrix,

y = toxData %>%

pull(BA),

alpha = 1) # lasso alpha=1

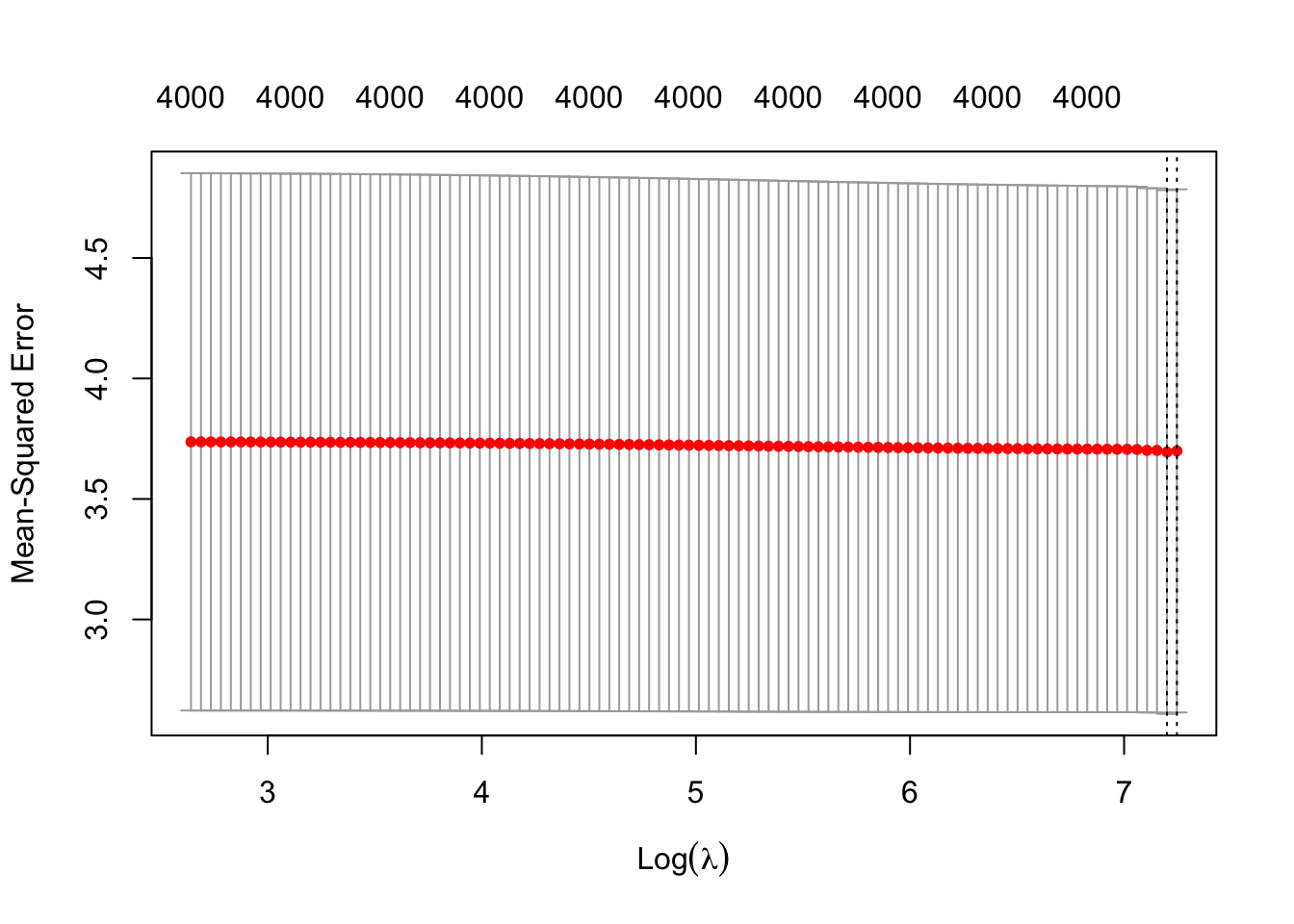

plot(mCvLasso)

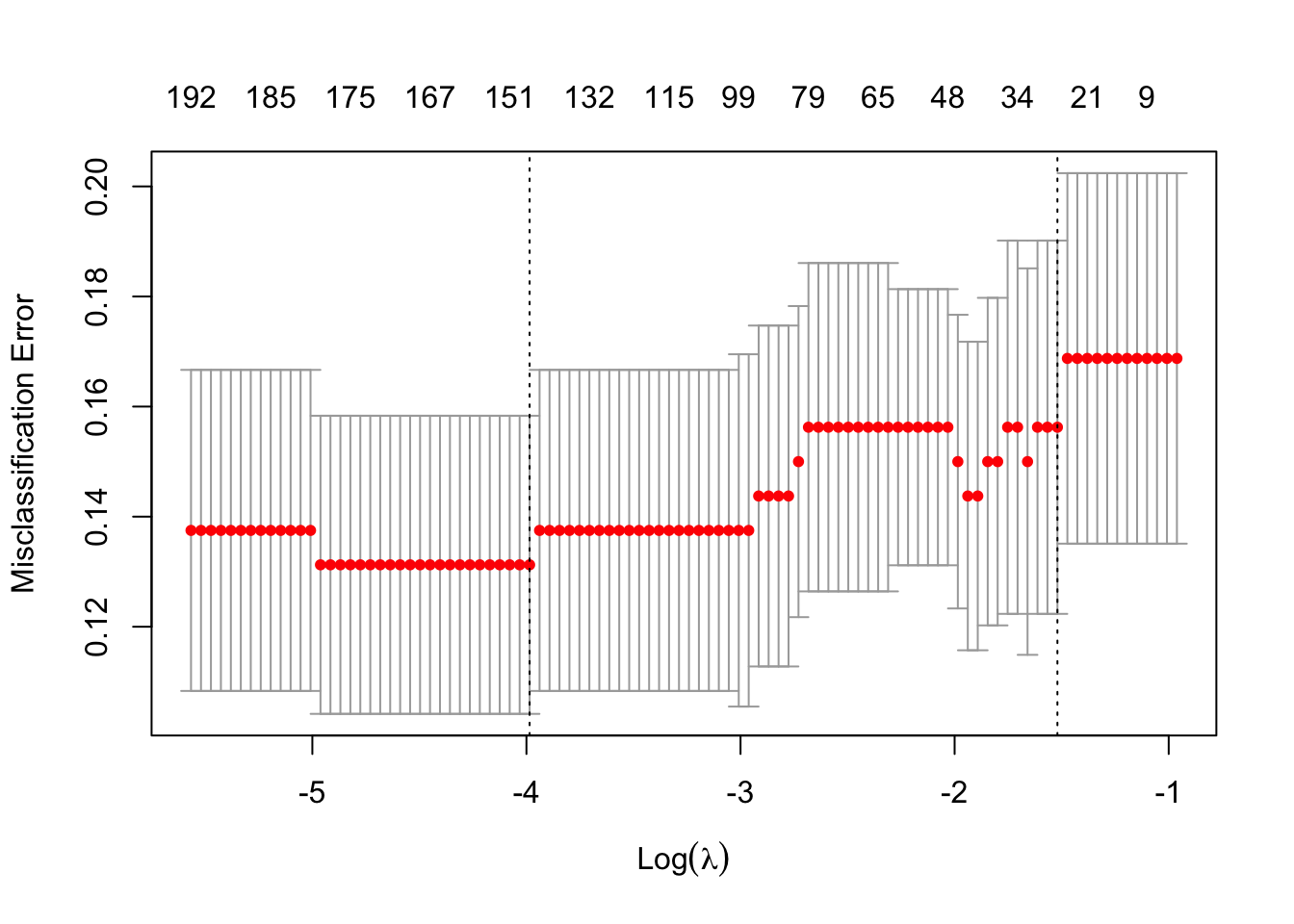

Default CV procedure in is -fold CV.

The Graphs shows

- 10-fold CV estimates of the extra-sample error as a function of the lasso penalty parameter .

- estimate plus and minus once the estimated standard error of the CV estimate (grey bars)

- On top the number of non-zero regression parameter estimates are shown.

Two vertical reference lines are added to the graph. They correspond to

- the that gives the smallest CV estimate of the extra-sample error, and

- the largest that gives a CV estimate of the extra-sample error that is within one standard error from the smallest error estimate.

- The latter choice of has no firm theoretical basis, except that it somehow accounts for the imprecision of the error estimate. One could loosely say that this corresponds to the smallest model (i.e. least number of predictors) that gives an error that is within margin of error of the error of the best model.

mLassoOpt <- glmnet(

x = toxData[,-1] %>%

as.matrix,

y = toxData %>%

pull(BA),

alpha = 1,

lambda = mCvLasso$lambda.min)

summary(coef(mLassoOpt))

With the optimal (smallest error estimate) the output shows the 9 non-zero estimated regression coefficients (sparse solution).

mLasso1se <- glmnet(

x = toxData[,-1] %>%

as.matrix,

y= toxData %>%

pull(BA),

alpha = 1,

lambda = mCvLasso$lambda.1se)

mLasso1se %>%

coef %>%

summary

This shows the solution for the largest within one standard error of the optimal model. Now only 3 non-zero estimates result.

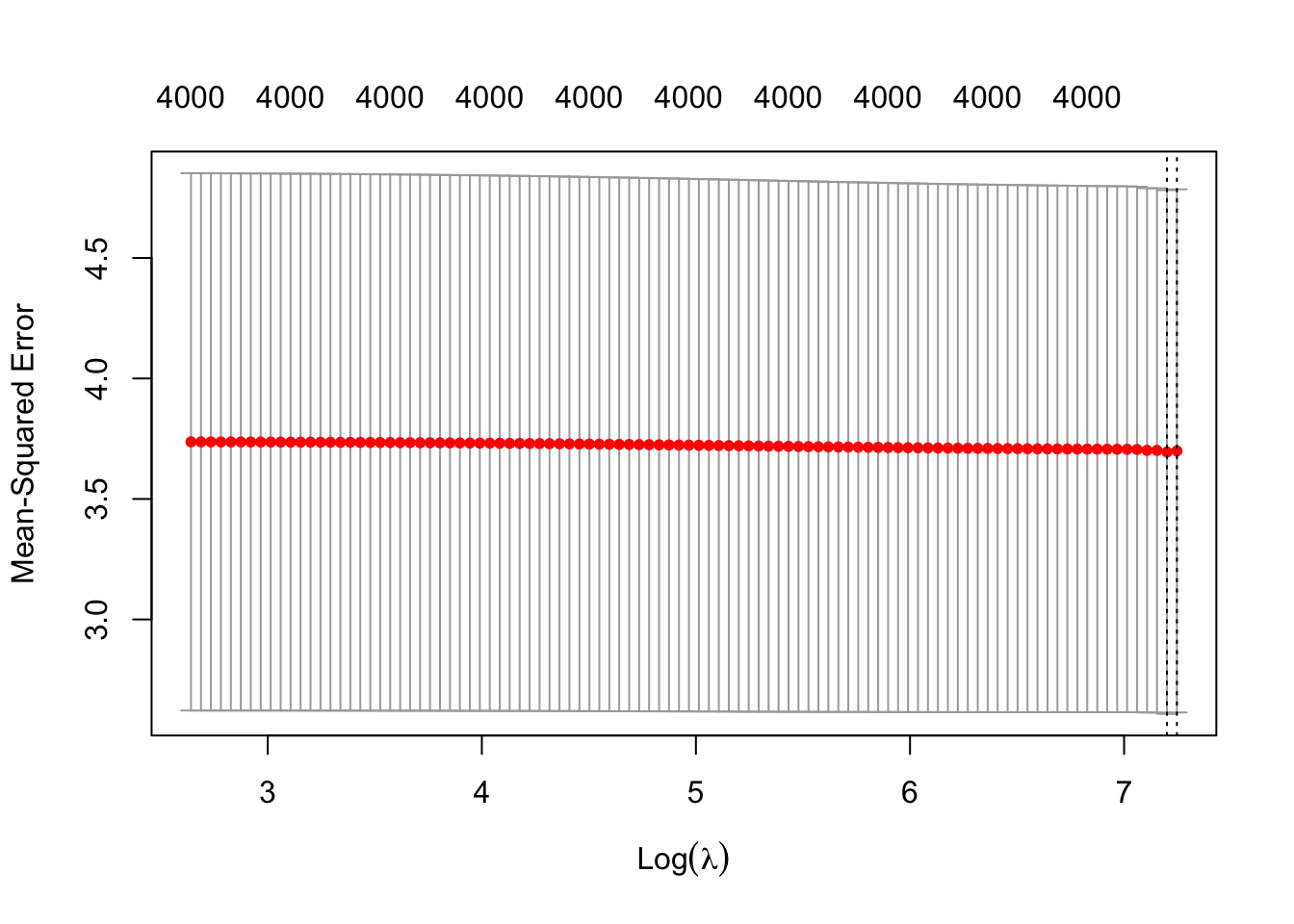

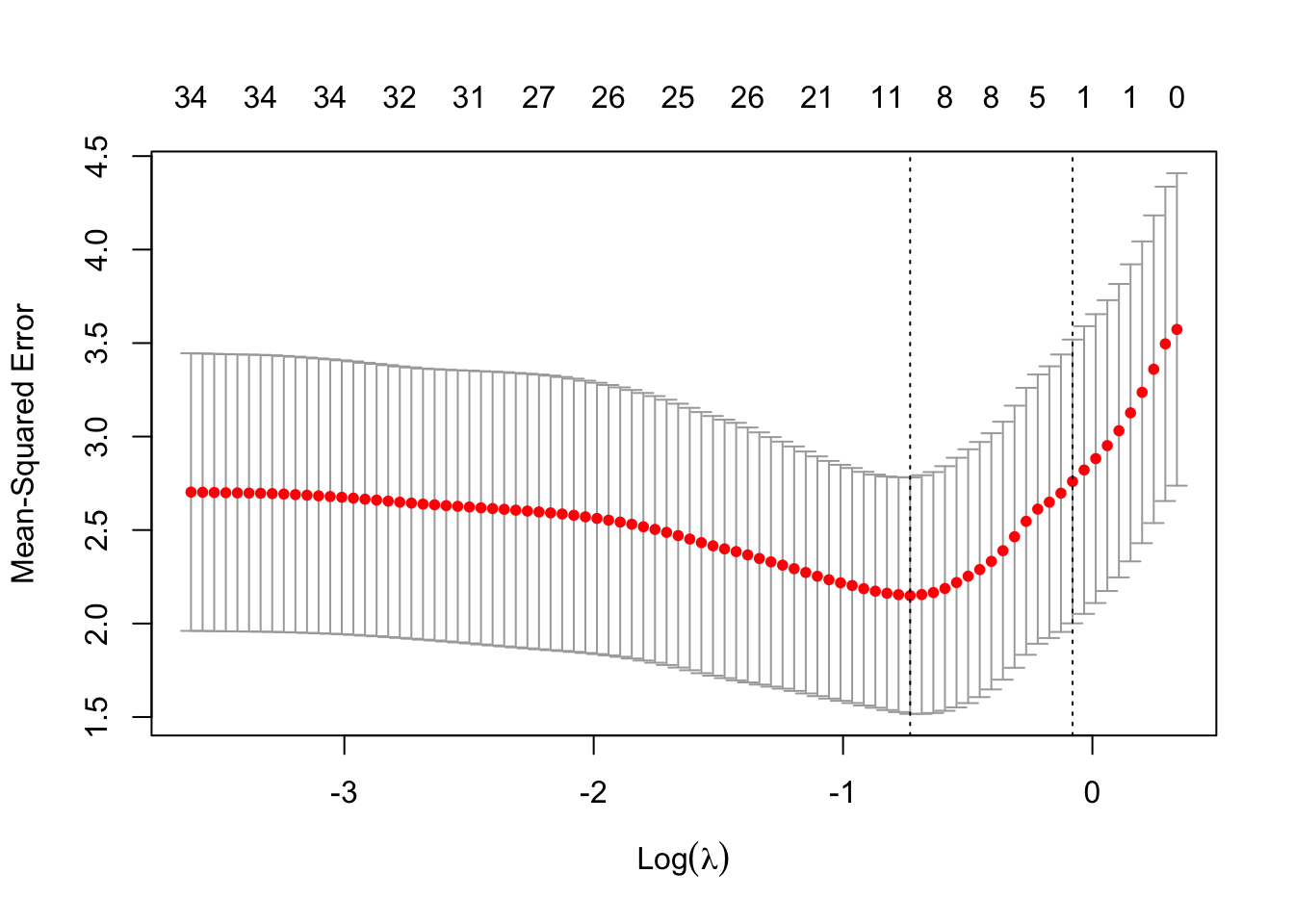

Ridge

mCvRidge <- cv.glmnet(

x = toxData[,-1] %>%

as.matrix,

y = toxData %>%

pull(BA),

alpha = 0) # ridge alpha=0

plot(mCvRidge)

- Ridge does not seem to have optimal solution.

- 10-fold CV is also larger than for lasso.

PCA regression

set.seed(1264)

library(DAAG)

tox <- data.frame(

Y = toxData %>%

pull(BA),

PC = Zk)

PC.seq <- 1:25

Err <- numeric(25)

mCvPca <- cv.lm(

Y~PC.1,

data = tox,

m = 5,

printit = FALSE)

Err[1]<-attr(mCvPca,"ms")

for(i in 2:25) {

mCvPca <- cv.lm(

as.formula(

paste("Y ~ PC.1 + ",

paste("PC.", 2:i, collapse = "+", sep=""),

sep=""

)

),

data = tox,

m = 5,

printit = FALSE)

Err[i]<-attr(mCvPca,"ms")

}

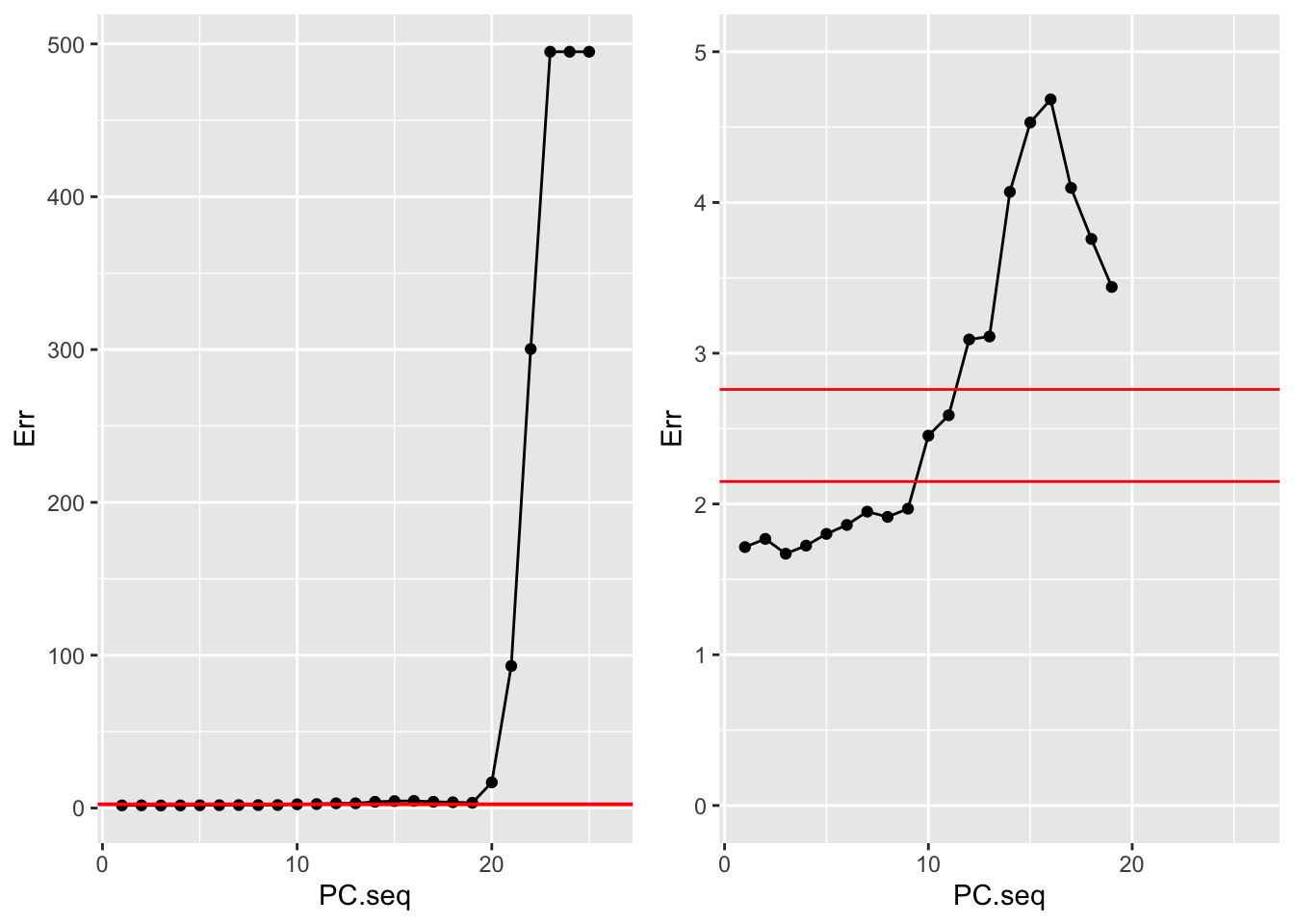

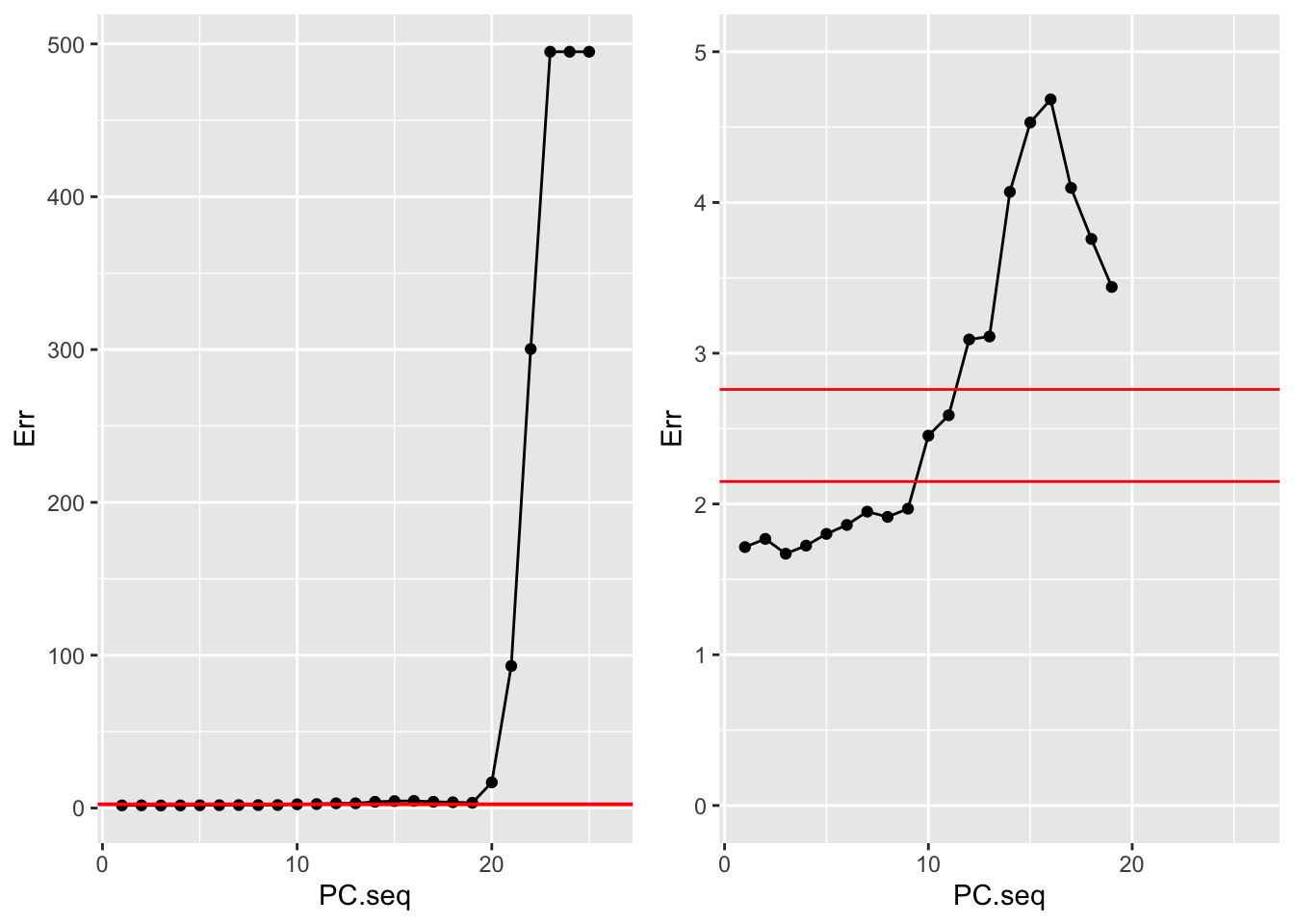

Here we illustrate principal component regression.

The most important PCs are selected in a forward model selection procedure.

Within the model selection procedure the models are evaluated with 5-fold CV estimates of the outsample error.

It is important to realise that a forward model selection procedure will not necessarily result in the best prediction model, particularly because the order of the PCs is generally not related to the importance of the PCs for predicting the outcome.

A supervised PC would be better.

pPCreg <- data.frame(PC.seq, Err) %>%

ggplot(aes(x = PC.seq, y = Err)) +

geom_line() +

geom_point() +

geom_hline(

yintercept = c(

mCvLasso$cvm[mCvLasso$lambda==mCvLasso$lambda.min],

mCvLasso$cvm[mCvLasso$lambda==mCvLasso$lambda.1se]),

col = "red") +

xlim(1,26)

grid.arrange(

pPCreg,

pPCreg + ylim(0,5),

ncol=2)

The graph shows the CV estimate of the outsample error as a function of the number of sparse PCs included in the model.

A very small error is obtained with the model with only the first PC. The best model with 3 PCs.

The two vertical reference lines correspond to the error estimates obtained with lasso (optimal and largest within one standard error).

Thus although there was a priori no guarantee that the first PCs are the most predictive, it seems to be the case here (we were lucky!).

Moreover, the first PC resulted in a small outsample error.

Note that the graph does not indicate the variability of the error estimates (no error bars).

Also note that the graph clearly illustrates the effect of overfitting: including too many PCs causes a large outsample error.

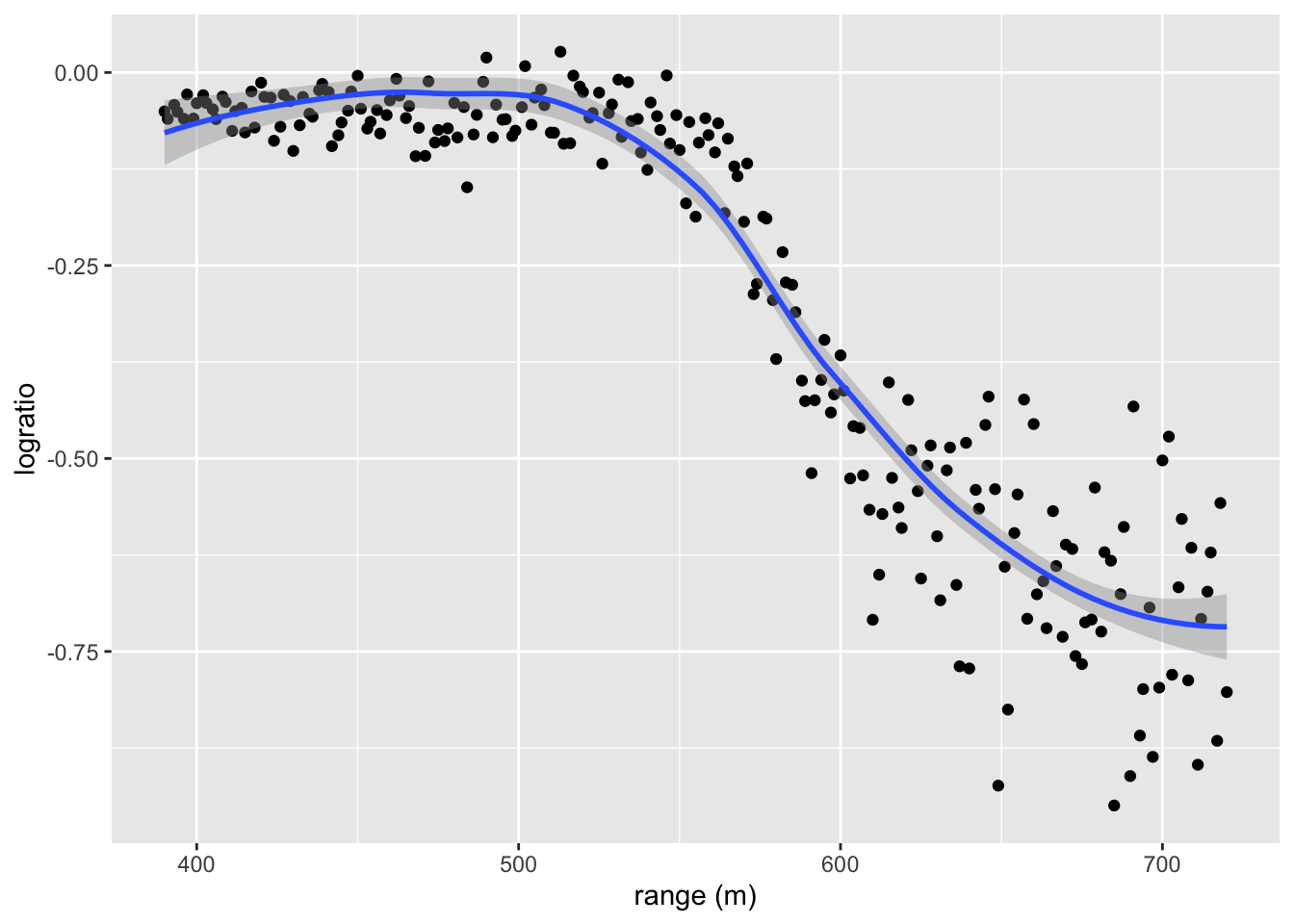

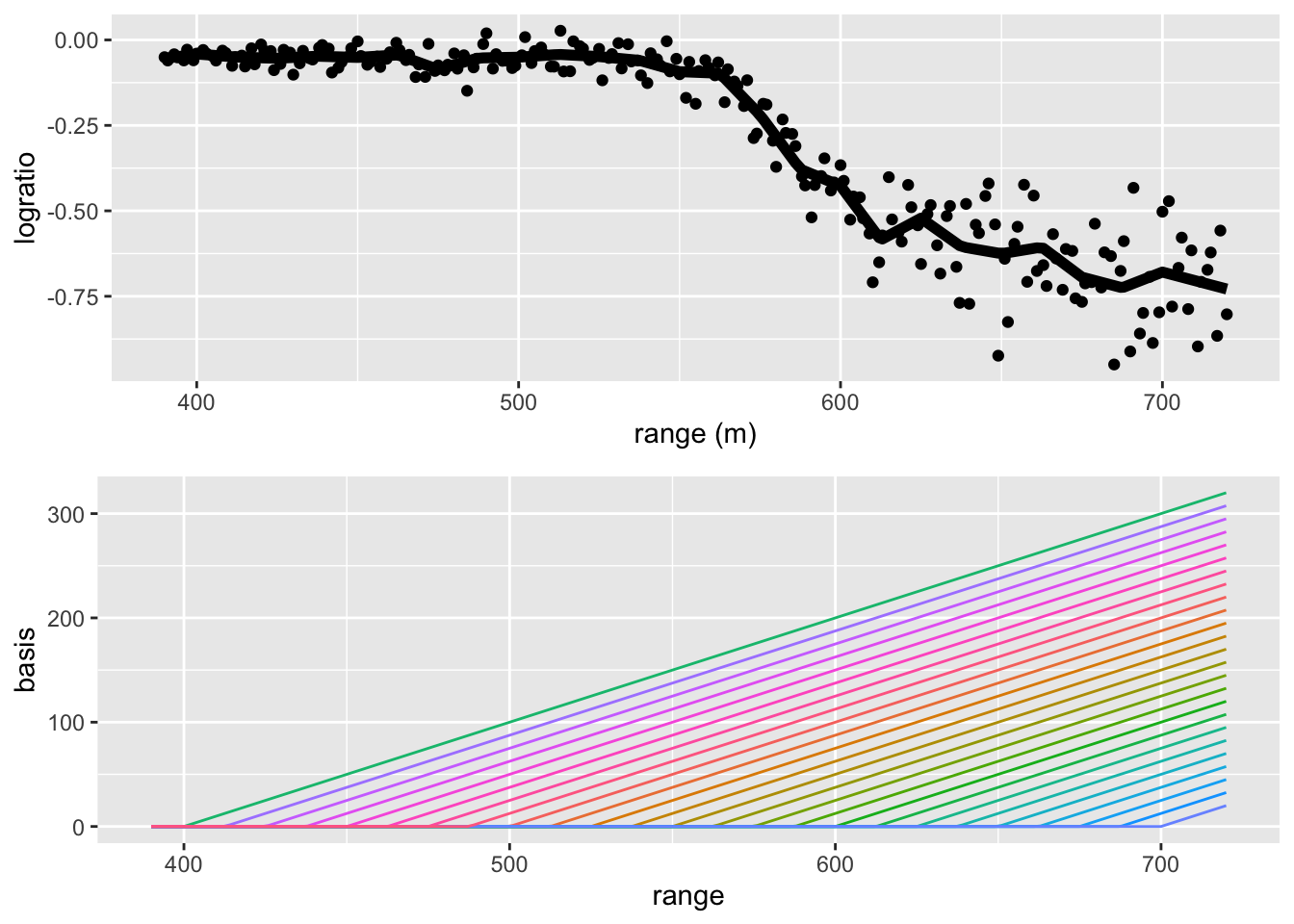

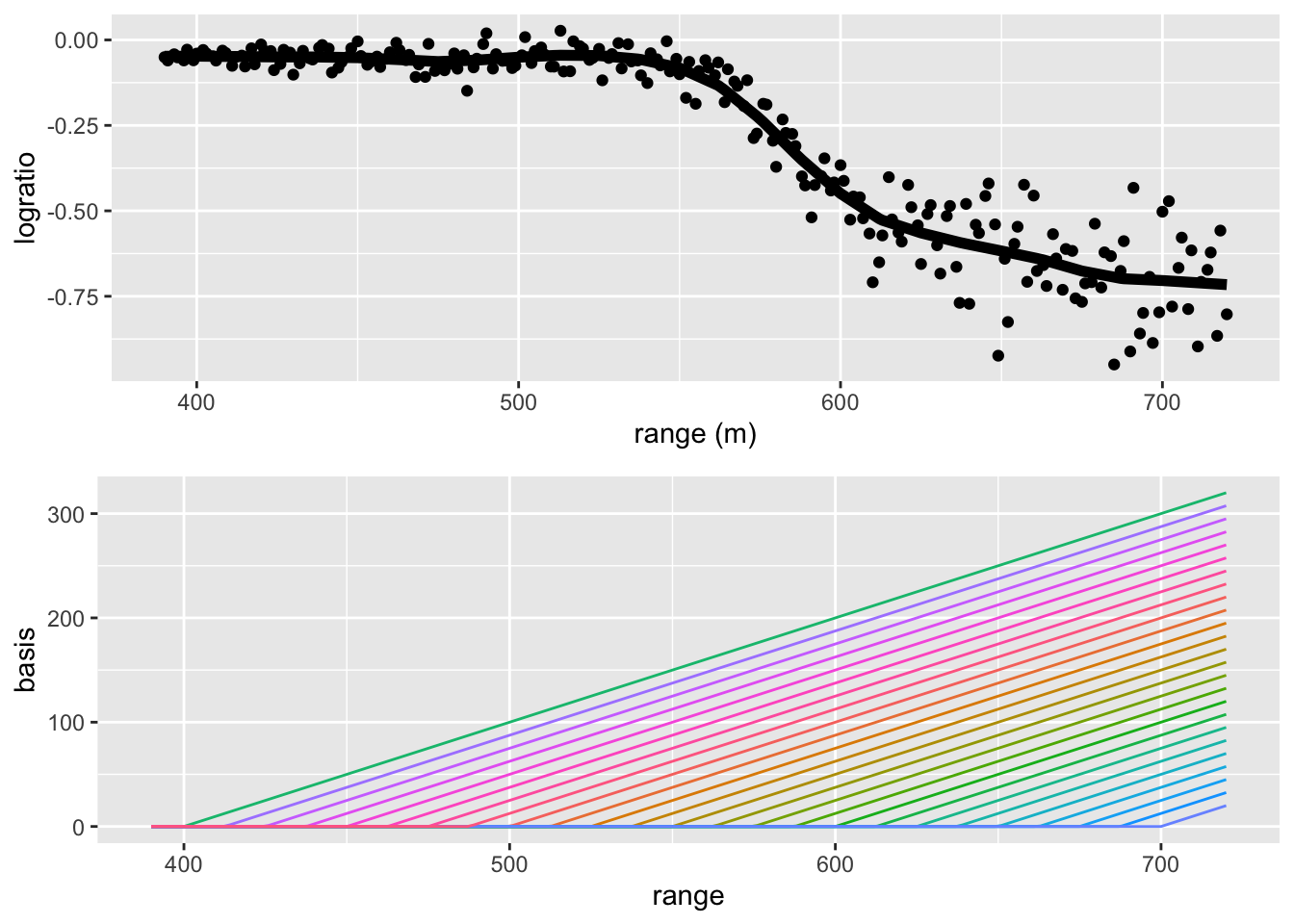

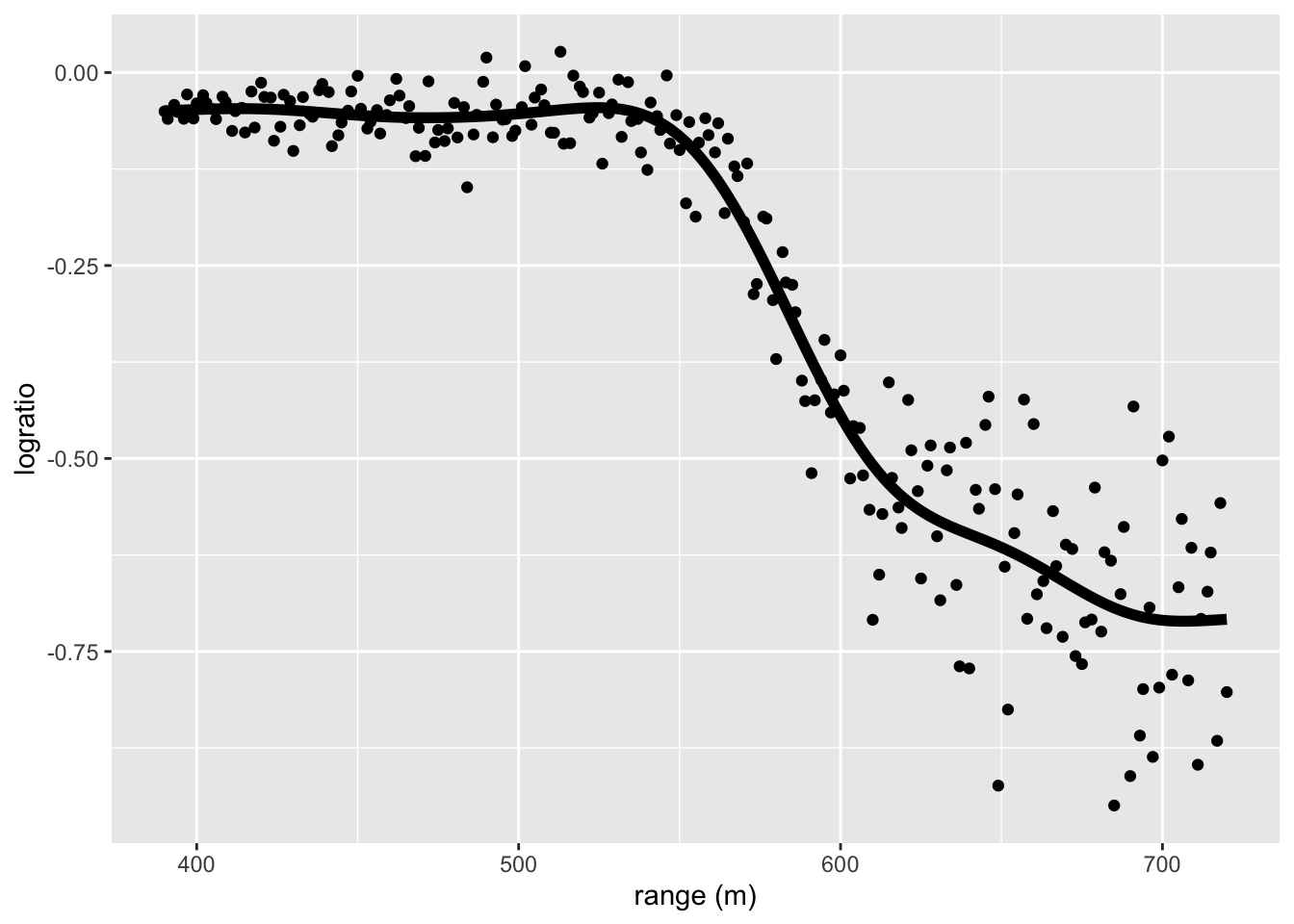

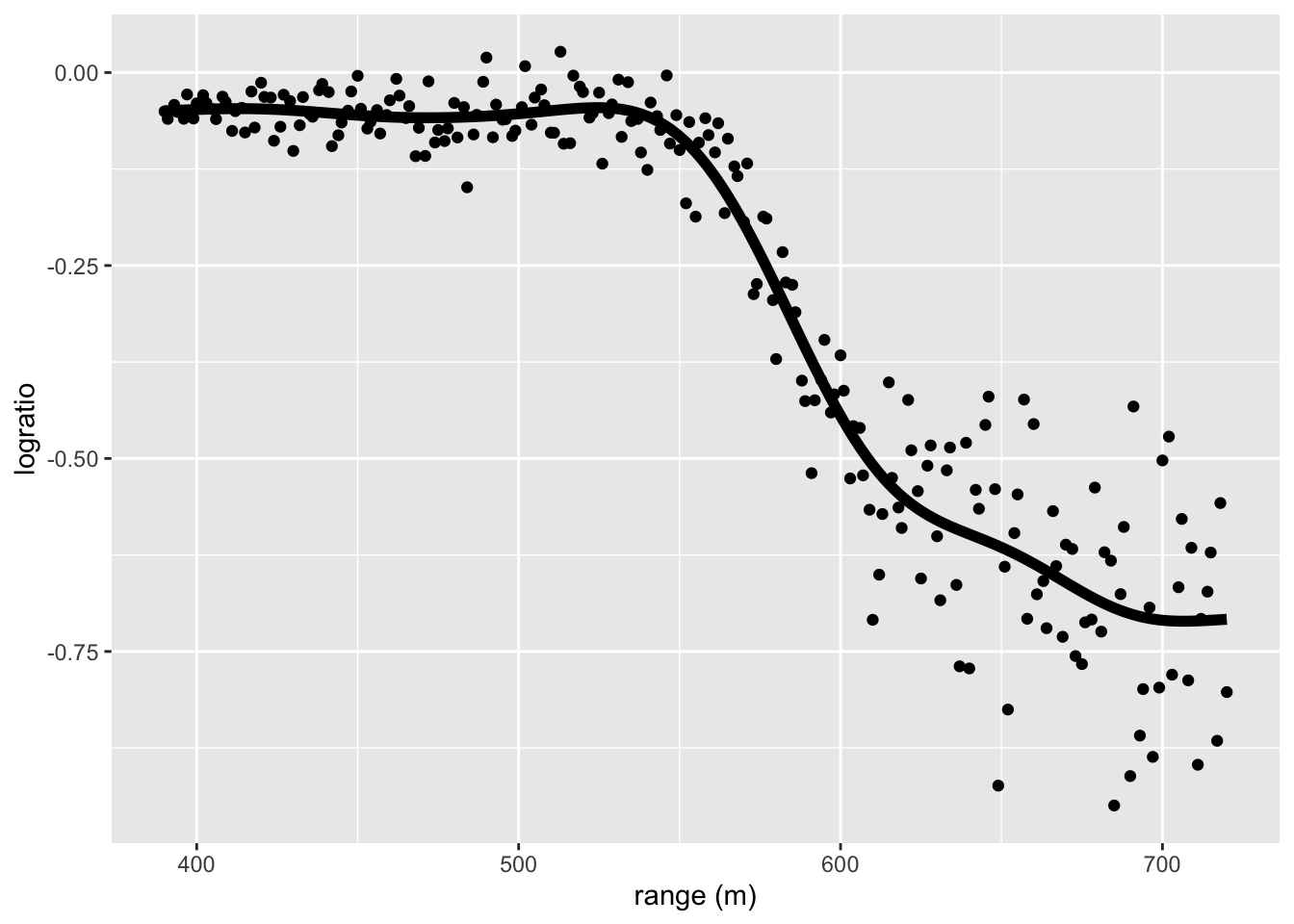

Lidar Example: splines

- We use the mgcv package to fit the spline model to the lidar data.

- A better basis is used than the truncated spline basis

- Thin plate splines are also linear smoothers, i.e.

- So their variance can be easily calculated.

- The ridge/smoothness penalty is chosen by generalized cross validation.

library(mgcv)

gamfit <- gam(logratio ~ s(range), data = lidar)

gamfit$sp

#> s(range)

#> 0.006114634

pLidar +

geom_line(aes(x = lidar$range, y = gamfit$fitted), lwd = 2)

More general error definitions

So far we only looked at continuous outcomes and errors defined in terms of the squared loss .

More generally, a loss function measures an discrepancy between the prediction and an independent outcome that corresponds to .

Some examples for continuous :

In the expression of the deviance

- denotes the density function of a distribution with mean set to (cfr. perfect fit), and

- is the density function of the same distribution but with mean set to the predicted outcome .

With a given loss function, the errors are defined as follows:

- Test or generalisation or outsample error

When an exponential family distribution is assumed for the outcome distribution, and when the deviance loss is used, the insample error can be estimated by means of the AIC and BIC.

Training and test sets

Sometimes, when a large (training) dataset is available, one may decide the split the dataset randomly in a

training dataset:

data are used for model fitting and for model building or feature selection (this may require e.g. cross validation)

test dataset:

this data are used to evaluate the final model (result of model building). An unbiased estimate of the outsample error (i.e. test or generalisation error) based on this test data is

where

denote the observations in the test dataset

is estimated from using the training data (this may also be the result from model building, using only the training data).

Note that the training dataset is used for model building or feature selection. This also requires the evaluation of models. For these evaluations the methods from the previous slides can be used (e.g. cross validation, -fold CV, Mallow’s ). The test dataset is only used for the evaluation of the final model (estimated and build from using only the training data). The estimate of the outsample error based on the test dataset is the best possible estimate in the sense that it is unbiased. The observations used for this estimation are independent of the observations in the training data.

However, if the number of data points in the test dataset () is small, the estimate of the outsample error may show large variance and hence is not reliable.

Logistic Regression Analysis for High Dimensional Data

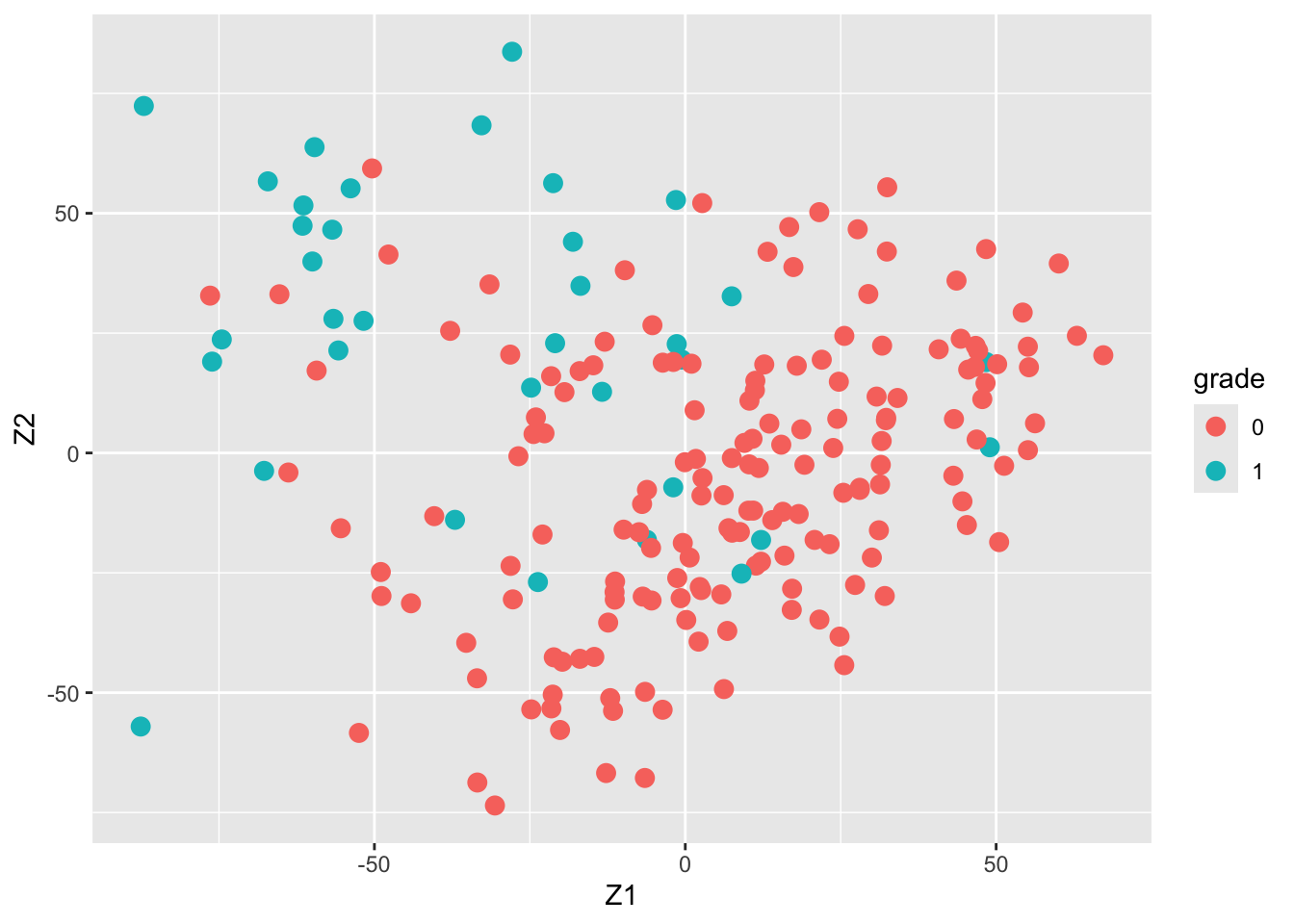

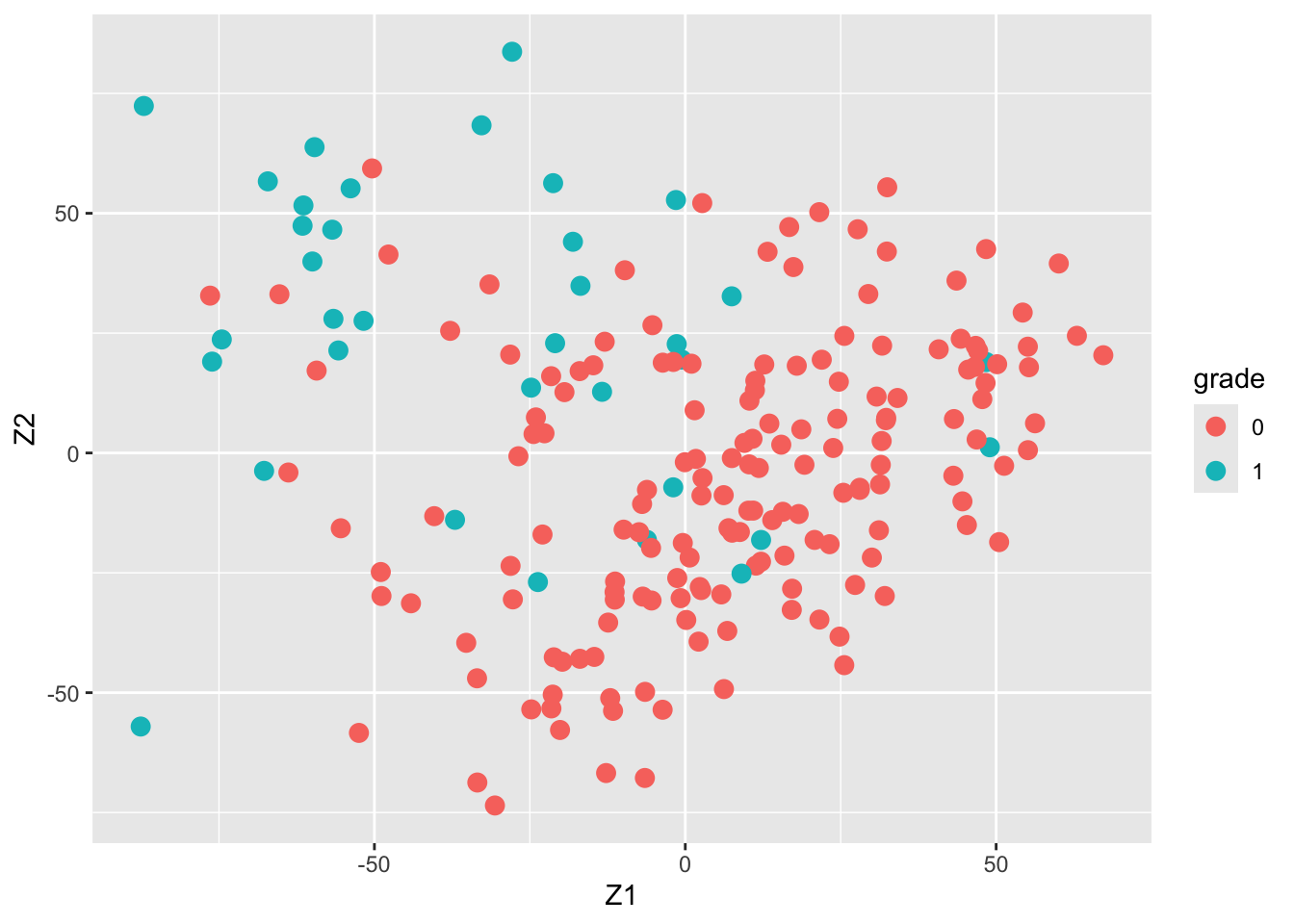

Breast Cancer Example

Schmidt et al., 2008, Cancer Research, 68, 5405-5413

Gene expression patterns in breast tumors were investigated ( genes)

After surgery the tumors were graded by a pathologist (stage 1,2,3)

Here the objective is to predict stage 3 from the gene expression data (prediction of binary outcome)

If the prediction model works well, it can be used to predict the stage from a biopsy sample.

Data

#BiocManager::install("genefu")

#BiocManager::install("breastCancerMAINZ")

library(genefu)

library(breastCancerMAINZ)

data(mainz)

X <- t(exprs(mainz)) # gene expressions

n <- nrow(X)

H <- diag(n)-1/n*matrix(1,ncol=n,nrow=n)

X <- H%*%X

Y <- ifelse(pData(mainz)$grade==3,1,0)

table(Y)

#> Y

#> 0 1

#> 165 35

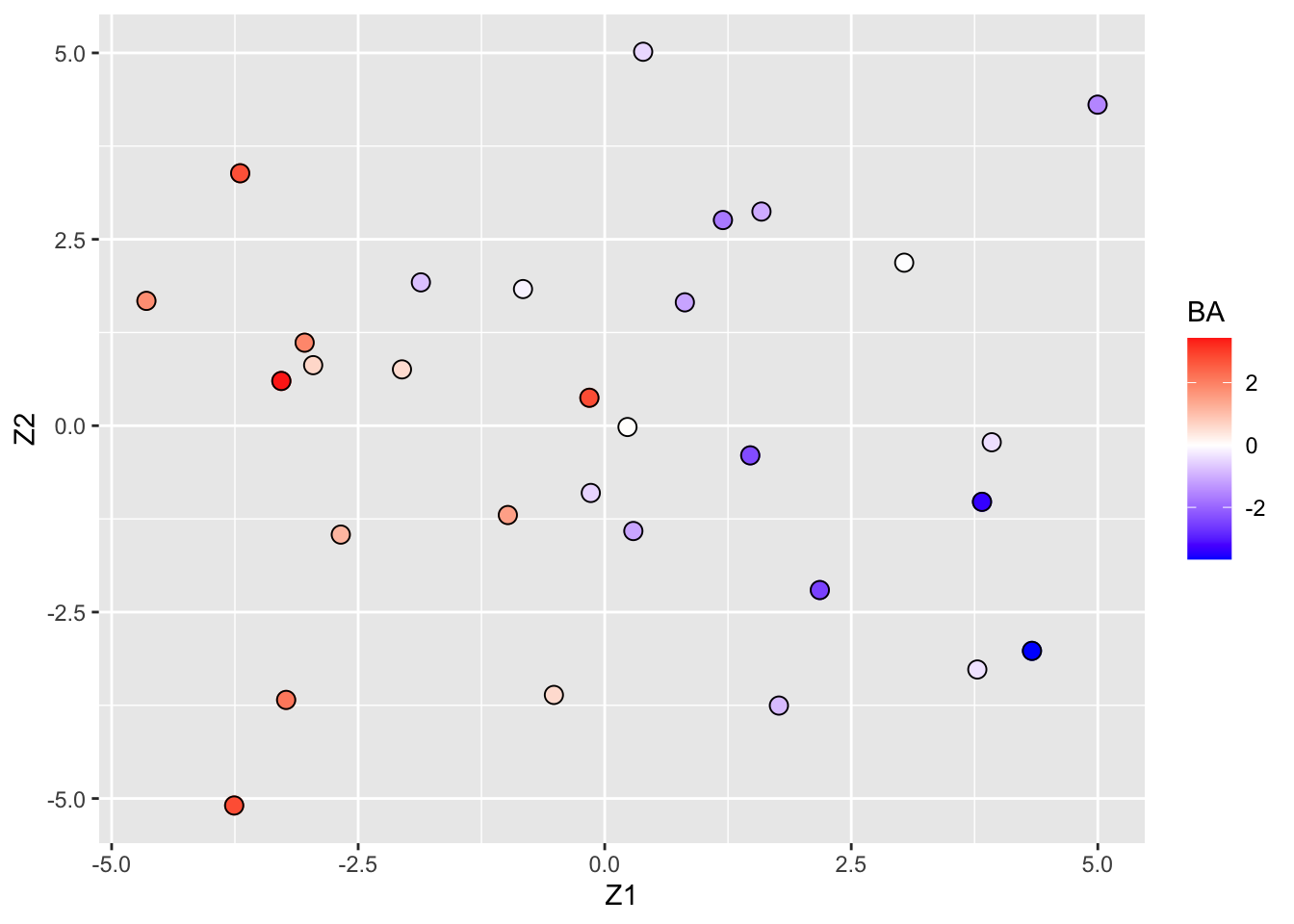

svdX <- svd(X)

k <- 2

Zk <- svdX$u[,1:k] %*% diag(svdX$d[1:k])

colnames(Zk) <- paste0("Z",1:k)

Zk %>%

as.data.frame %>%

mutate(grade = Y %>% as.factor) %>%

ggplot(aes(x= Z1, y = Z2, color = grade)) +

geom_point(size = 3)

Logistic regression models

Binary outcomes are often analysed with logistic regression models.

Let denote the binary (1/0, case/control, positive/negative) outcome, and the -dimensional predictor.

Logistic regression assumes

with and

The parameters are typically estimated by maximising the log-likelihood, which is denoted by , i.e.

Penalized maximum likelihood

Penalised estimation methods (e.g. lasso and ridge) can als be applied to maximum likelihood, resulting in the penalised maximum likelihood estimator.

Lasso:

Ridge:

Once the parameters are estimated, the model may be used to compute

With these estimated probabilities the prediction rule becomes

with a threshold that either is fixed (e.g. ), depends on prior probabilities, or is empirically determined by optimising e.g. the Area Under the ROC Curve (AUC) or by finding a good compromise between sensitivity and specificity.

Note that logistic regression directly models the Posterior probability that an observation belongs to class , given the predictor .

Model evaluation

Common model evaluation criteria for binary prediction models are:

sensitivity = true positive rate (TPR)

specificity = true negative rate (TNR)

misclassification error

area under the ROC curve (AUC)

These criteria can again be estimated via cross validation or via splitting of the data into training and test/validation data.

Sensitivity of a model with threshold

Sensitivity is the probability to correctly predict a positive outcome:

It is also known as the true positive rate (TPR).

Specificity of a model with threshold

Specificity is the probability to correctly predict a negative outcome:

It is also known as the true negative rate (TNR).

Misclassification error of a model with threshold

The misclassification error is the probability to incorrectly predict an outcome:

Note that in the definitions of sensitivity, specificity and the misclassification error, the probabilities refer to the distribution of the , which is independent of the training data, conditional on the training data. This is in line with the test or generalisation error. The misclassification error is actually the test error when a 0/1 loss function is used. Just as before, the sensitivity, specificity and the misclassification error can also be averaged over the distribution of the training data set, which is in line with the expected test error which has been discussed earlier.

ROC curve of a model

The Receiver Operating Characteristic (ROC) curve for model is given by the function

For when moves from 1 to 0, the ROC function defines a curve in the plane , moving from for to for .

The horizontal axis of the ROC curve shows 1-specificity. This is also known as the False Positive Rate (FPR).

Area under the curve (AUC) of a model

The area under the curve (AUC) for model is area under the ROC curve and is given by

Some notes about the AUC:

AUC=0.5 results when the ROC curve is the diagonal. This corresponds to flipping a coin, i.e. a complete random prediction.

AUC=1 results from the perfect ROC curve, which is the ROC curve through the points , and . This ROC curve includes a threshold such that sensitivity and specificity are equal to one.

Breast cancer example

Data

library(glmnet)

#BiocManager::install("genefu")

#BiocManager::install("breastCancerMAINZ")

library(genefu)

library(breastCancerMAINZ)

data(mainz)

X <- t(exprs(mainz)) # gene expressions

n <- nrow(X)

H <- diag(n)-1/n*matrix(1,ncol=n,nrow=n)

X <- H%*%X

Y <- ifelse(pData(mainz)$grade==3,1,0)

table(Y)

#> Y

#> 0 1

#> 165 35

From the table of the outcomes in Y we read that

- 35 tumors were graded as stage 3 and

- 165 tumors were graded as stage 1 or 2.

In this the stage 3 tumors are referred to as cases or postives and the stage 1 and 2 tumors as controls or negatives.

Training and test dataset

The use of the lasso logistic regression for the prediction of stage 3 breast cancer is illustrated here by

randomly splitting the dataset into a training dataset ( of data = 160 tumors) and a test dataset (40 tumors)

using the training data to select a good value in the lasso logistic regression model (through 10-fold CV)

evaluating the final model by means of the test dataset (ROC Curve, AUC).

## Used to provide same results as in previous R version

RNGkind(sample.kind = "Rounding")

set.seed(6977326)

####

n <- nrow(X)

nTrain <- round(0.8*n)

nTrain

#> [1] 160

indTrain <- sample(1:n,nTrain)

XTrain <- X[indTrain,]

YTrain <- Y[indTrain]

XTest <- X[-indTrain,]

YTest <- Y[-indTrain]

table(YTest)

#> YTest

#> 0 1

#> 32 8

Note that the randomly selected test data has 20% cases of stage 3 tumors.

This is a bit higher than the 17.5% in the complete data.

One could also perform the random splitting among the positives and the negatives separately (stratified splitting).

Model fitting based on training data

mLasso <- glmnet(

x = XTrain,

y = YTrain,

alpha = 1,

family="binomial") # lasso: alpha = 1

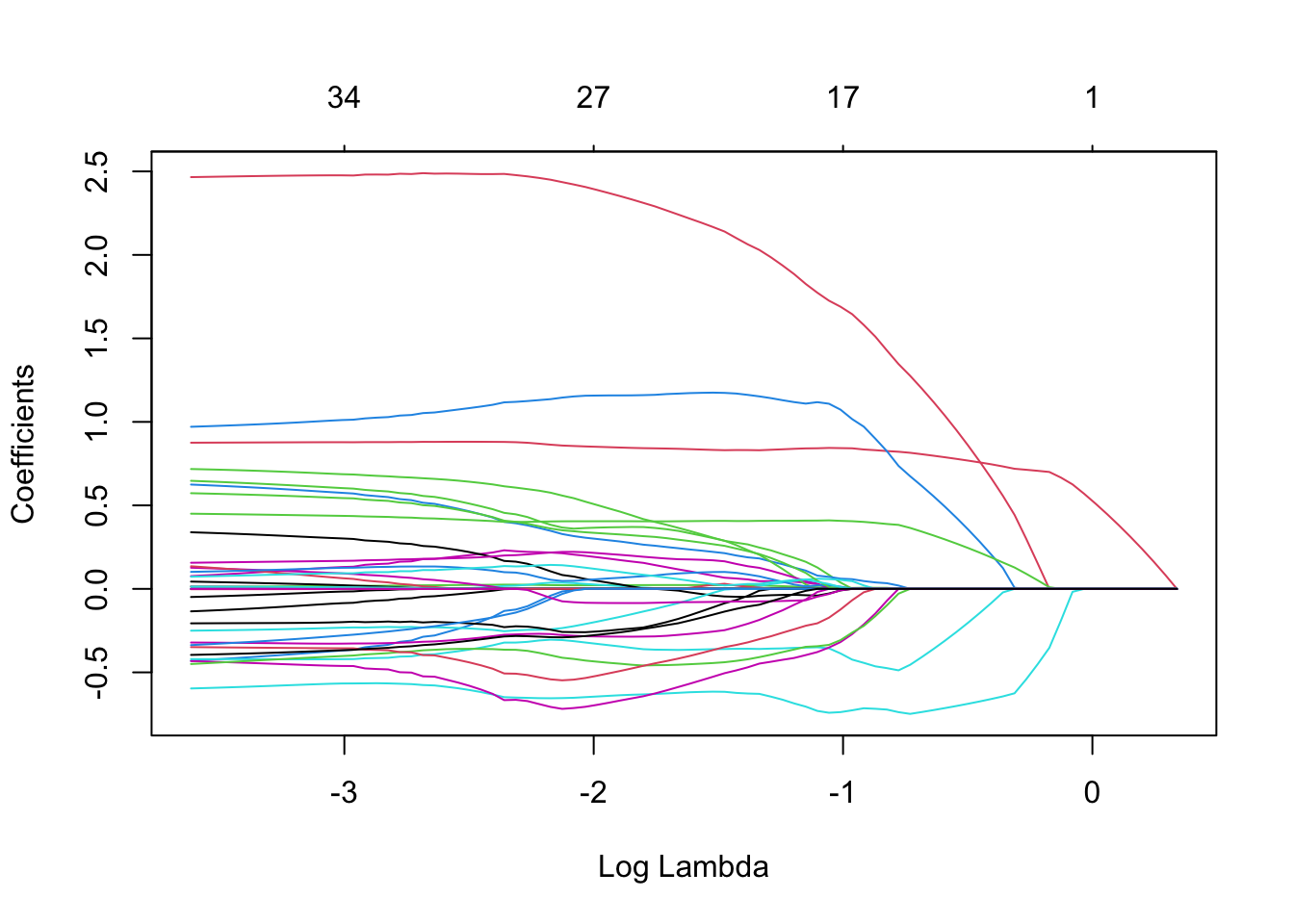

plot(mLasso, xvar = "lambda", xlim = c(-6,-1.5))

mCvLasso <- cv.glmnet(

x = XTrain,

y = YTrain,

alpha = 1,

type.measure = "class",

family = "binomial") # lasso alpha = 1

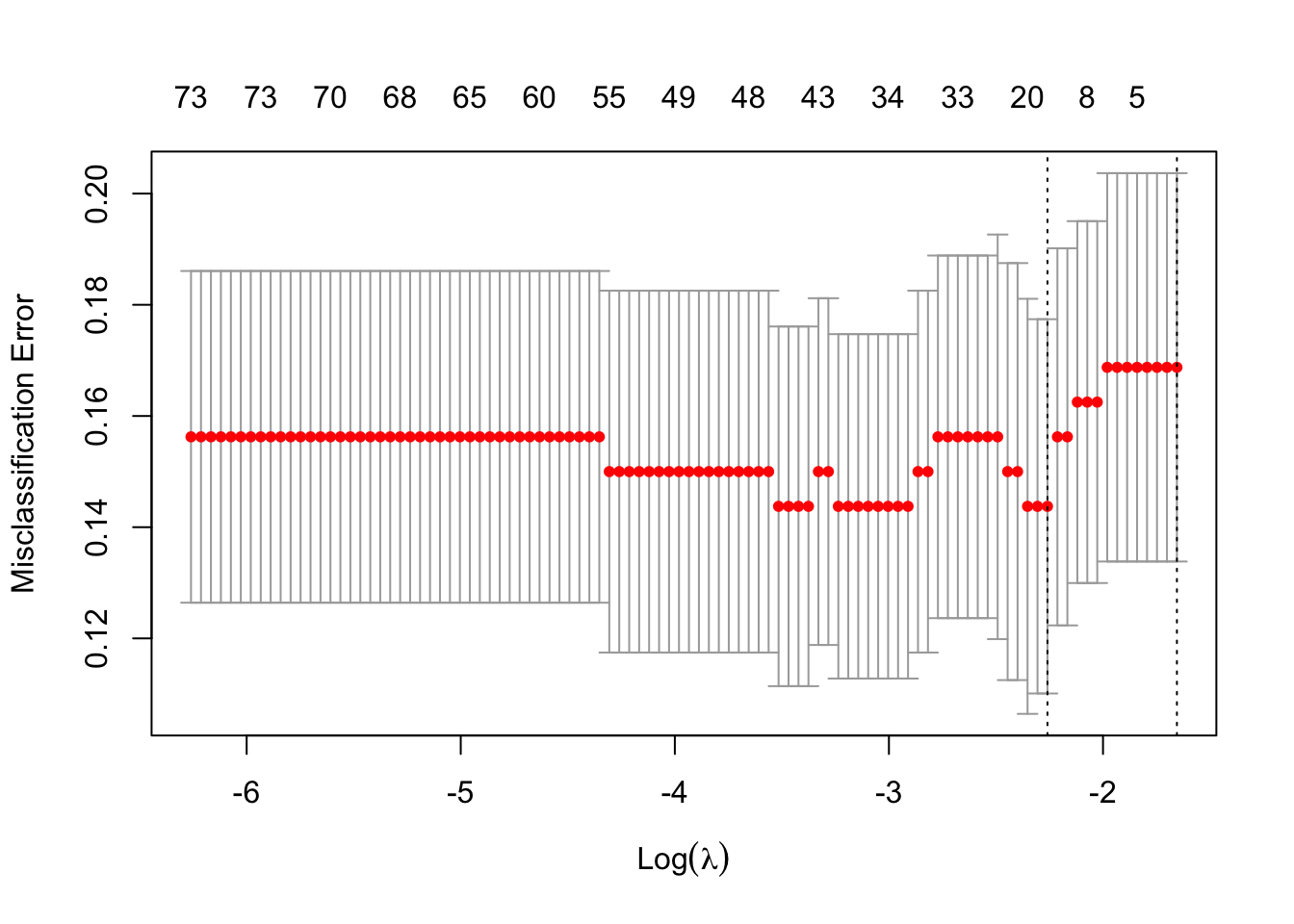

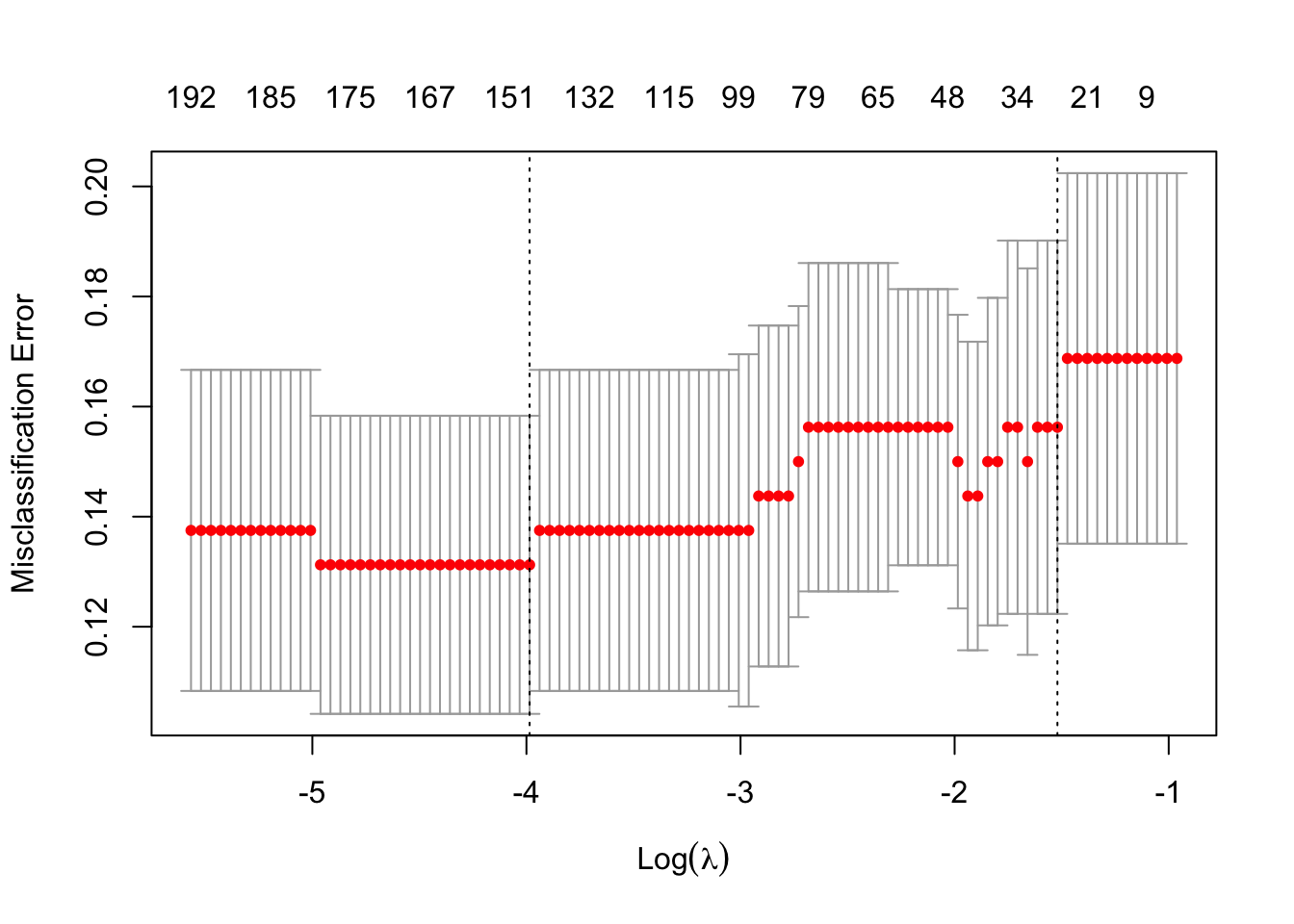

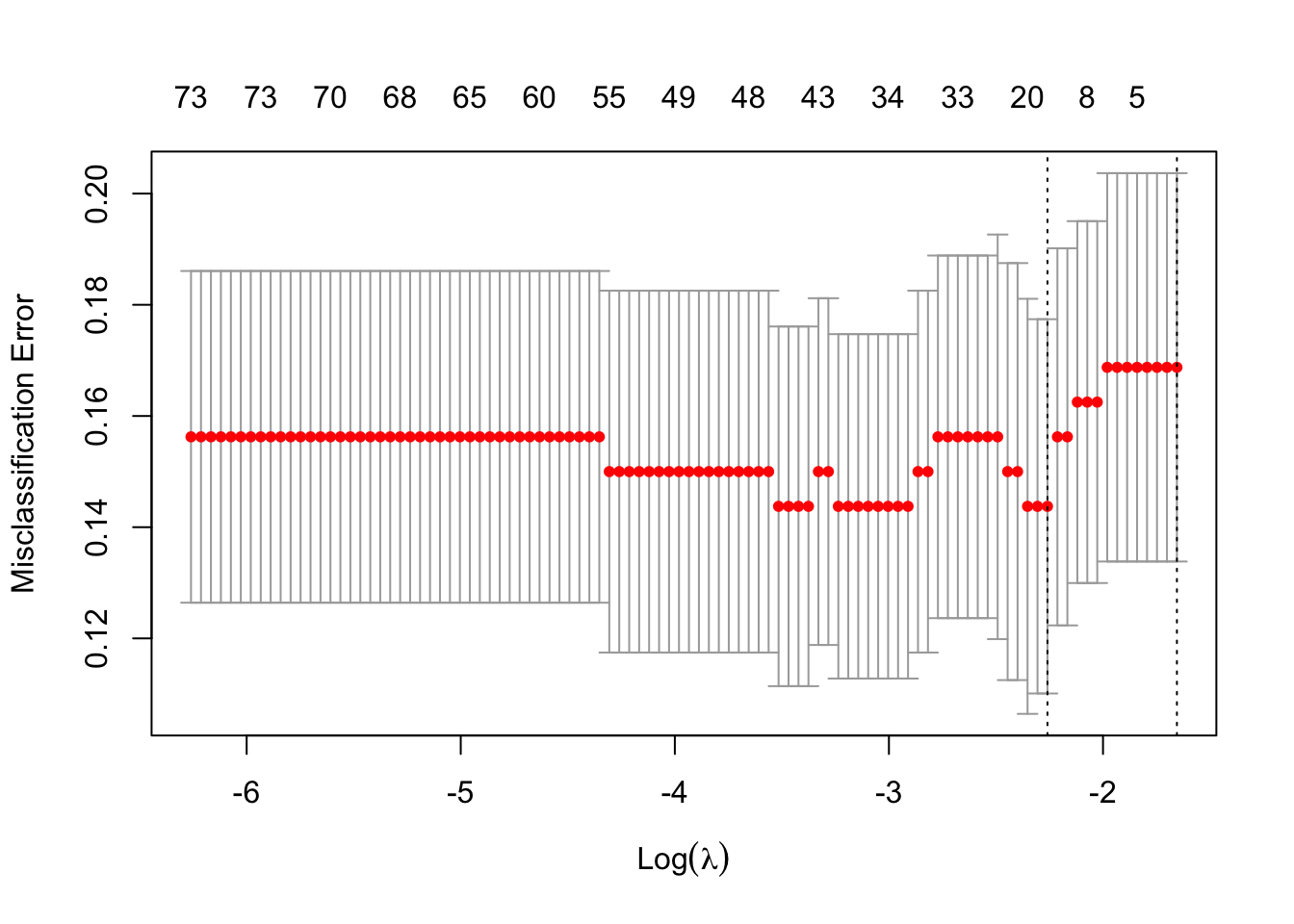

plot(mCvLasso)

#>

#> Call: cv.glmnet(x = XTrain, y = YTrain, type.measure = "class", alpha = 1, family = "binomial")

#>

#> Measure: Misclassification Error

#>

#> Lambda Index Measure SE Nonzero

#> min 0.1044 14 0.1437 0.03366 18

#> 1se 0.1911 1 0.1688 0.03492 0

The total misclassification error is used here to select a good value for .

# BiocManager::install("plotROC")

library(plotROC)

dfLassoOpt <- data.frame(

pi = predict(mCvLasso,

newx = XTest,

s = mCvLasso$lambda.min,

type = "response") %>% c(.),

known.truth = YTest)

roc <-

dfLassoOpt %>%

ggplot(aes(d = known.truth, m = pi)) +

geom_roc(n.cuts = 0) +

xlab("1-specificity (FPR)") +

ylab("sensitivity (TPR)")

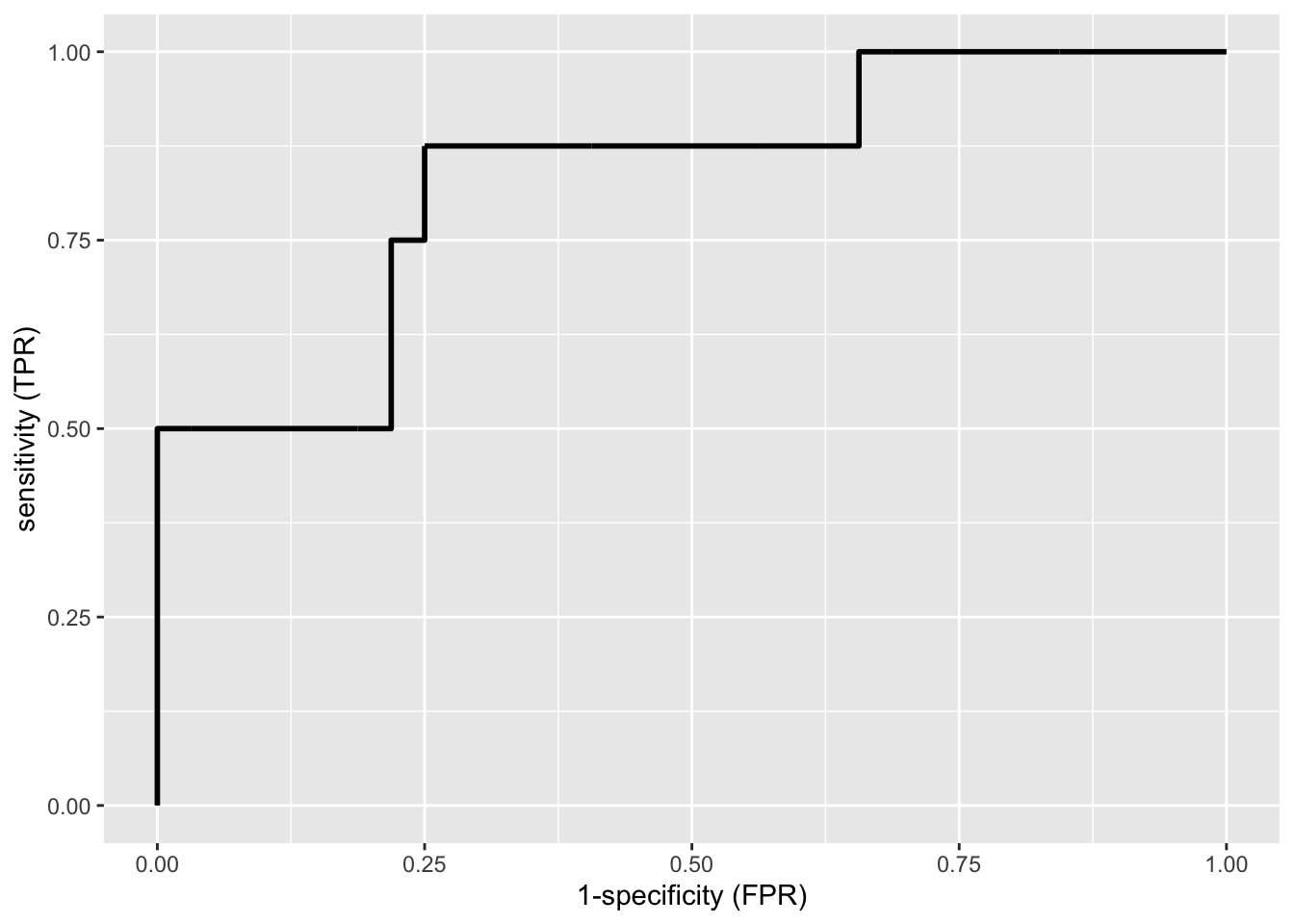

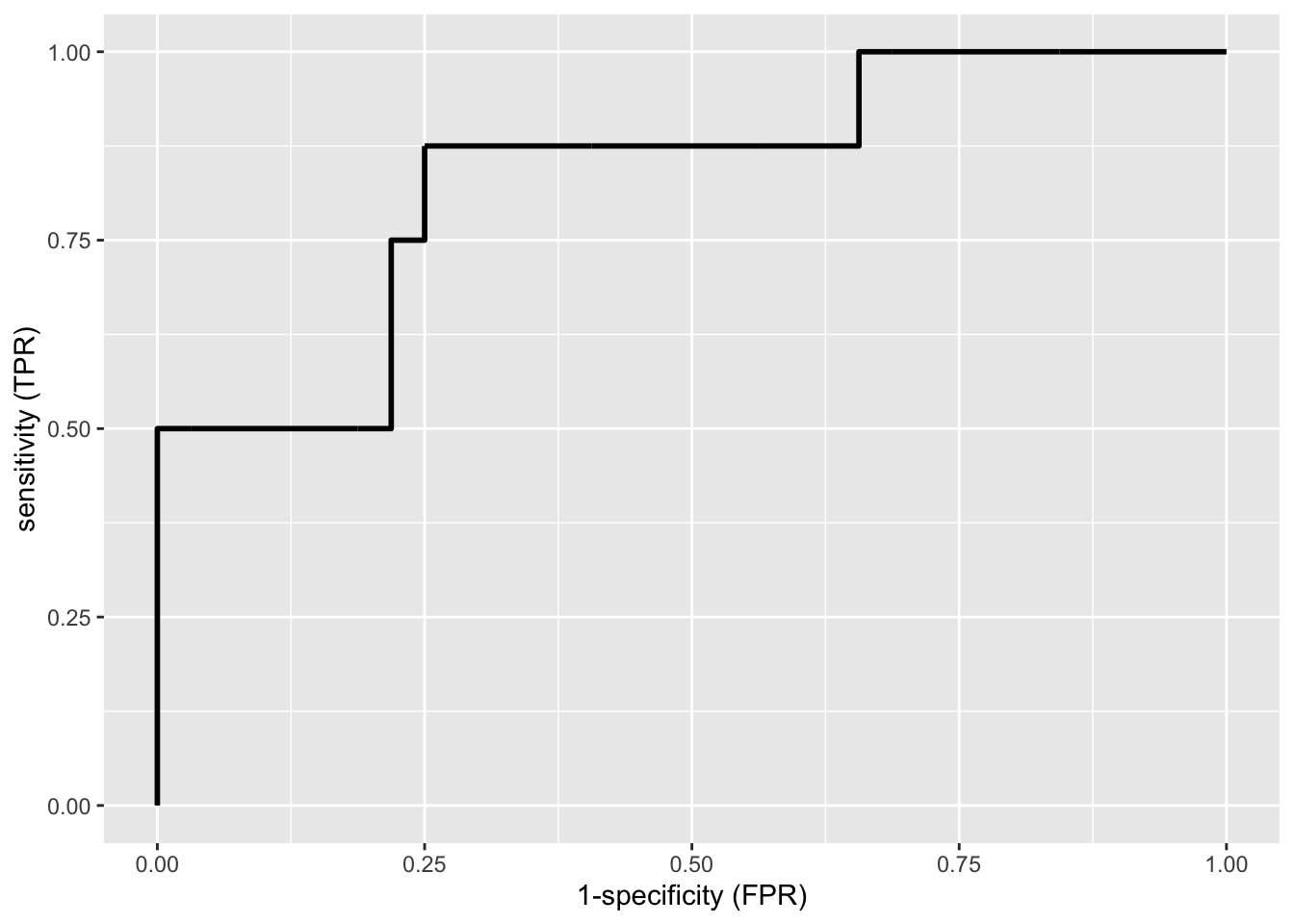

roc

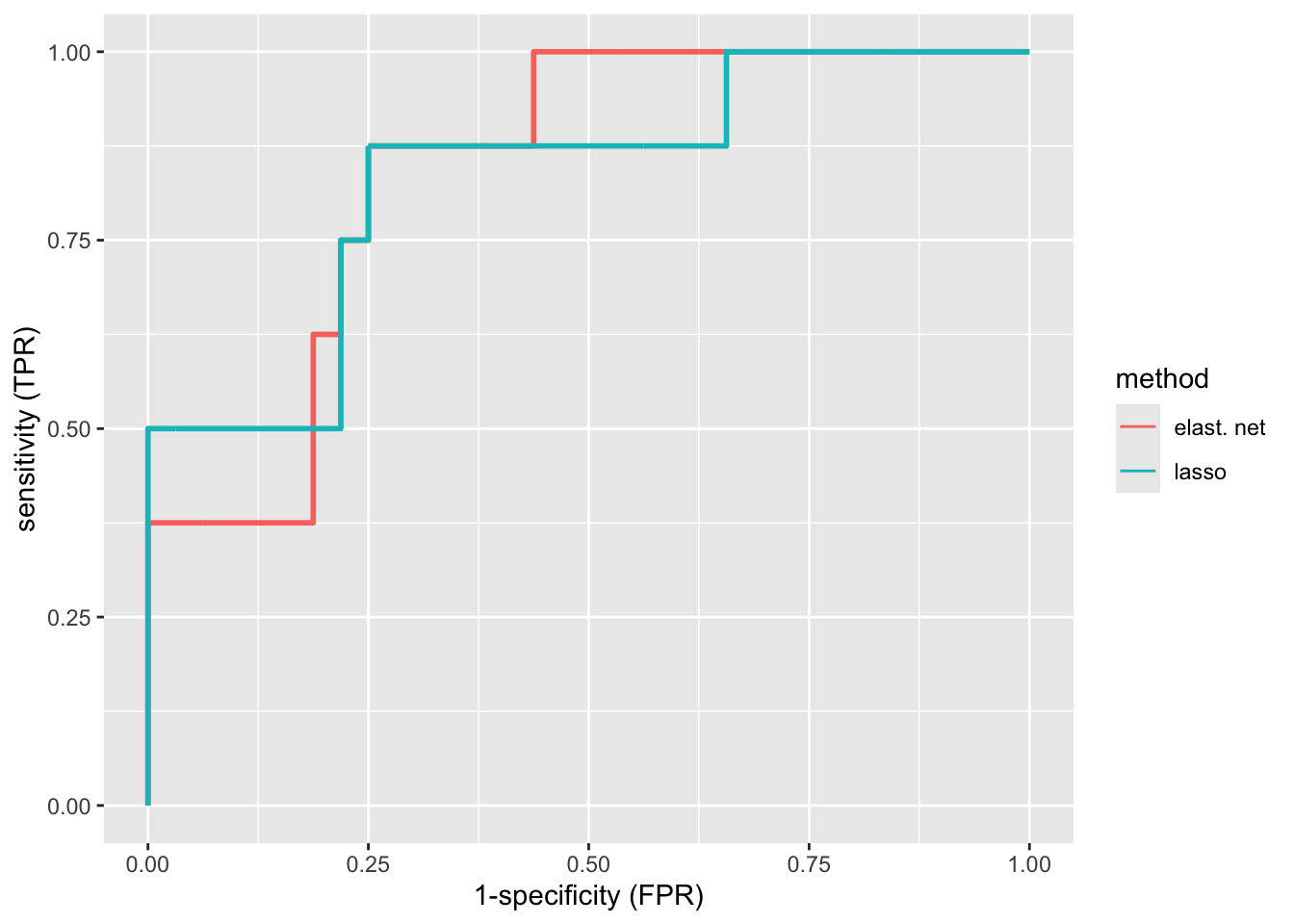

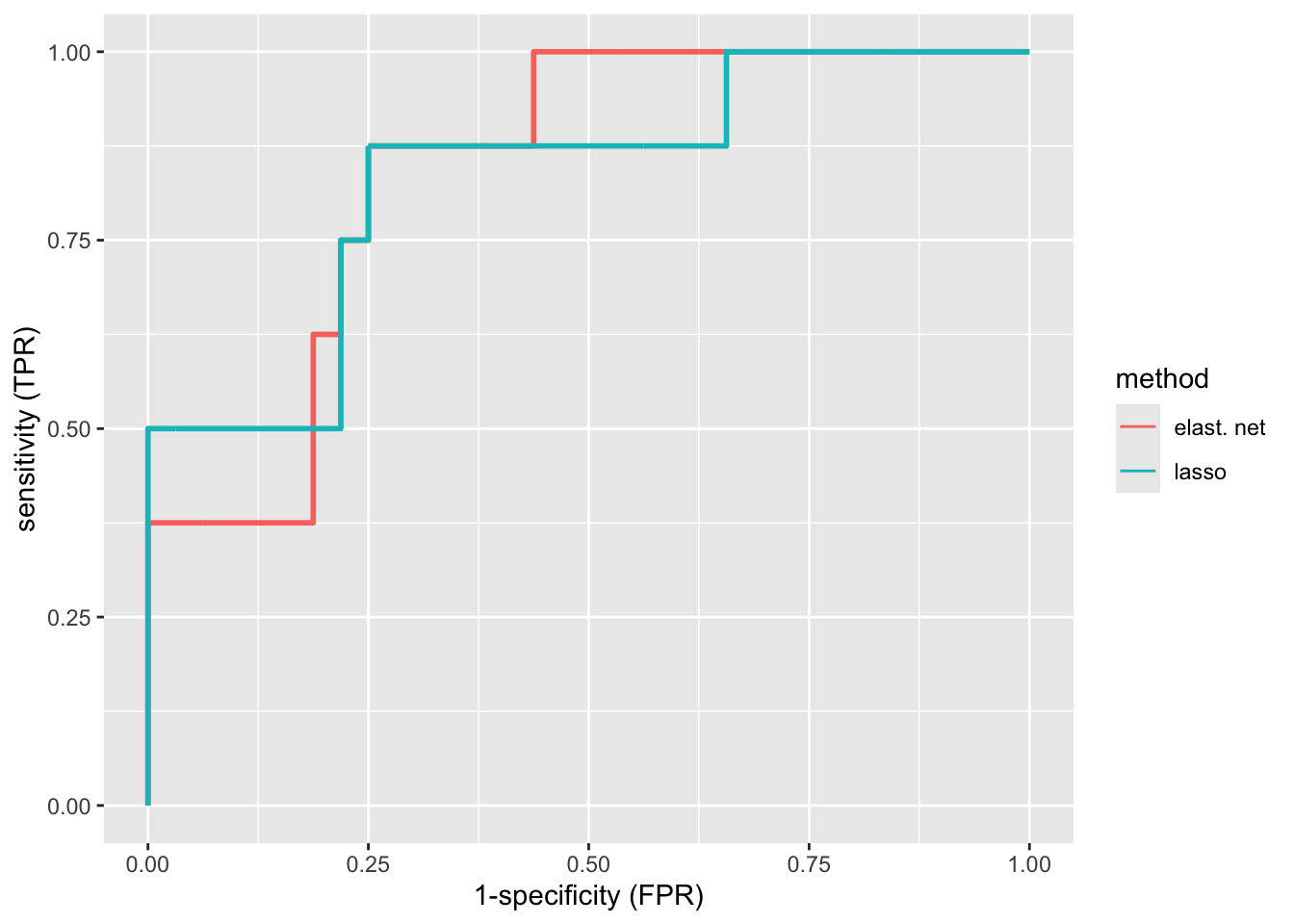

The ROC curve is shown for the model based on with the smallest misclassification error. The model has an AUC of 0.83.

Based on this ROC curve an appropriate threshold can be chosen. For example, from the ROC curve we see that it is possible to attain a specificity and a sensitivity of 75%.

The sensitivities and specificities in the ROC curve are unbiased (independent test dataset) for the prediction model build from the training data. The estimates of sensitivity and specificity, however, are based on only 40 observations.

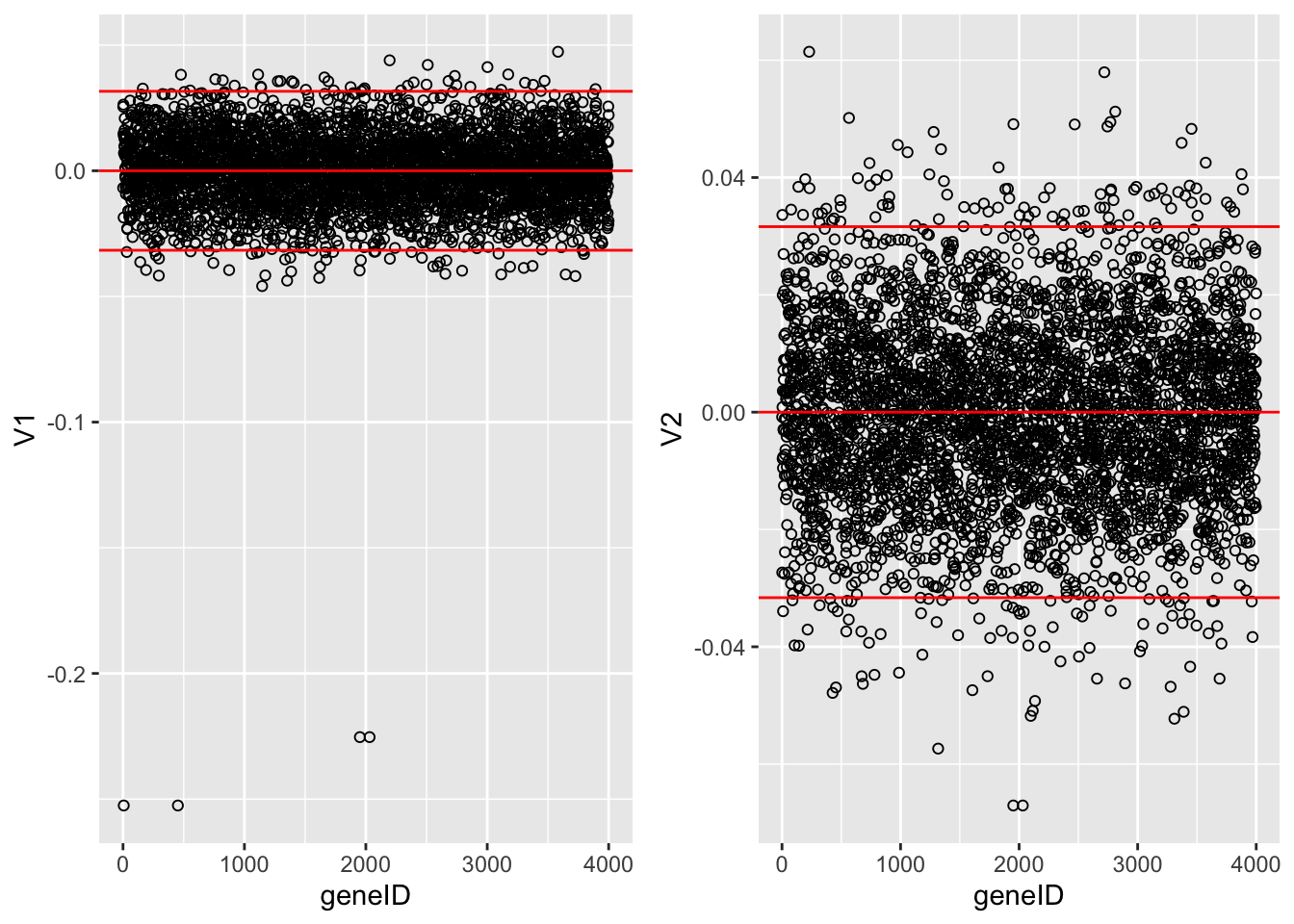

mLambdaOpt <- glmnet(x = XTrain,

y = YTrain,

alpha = 1,

lambda = mCvLasso$lambda.min,

family="binomial")

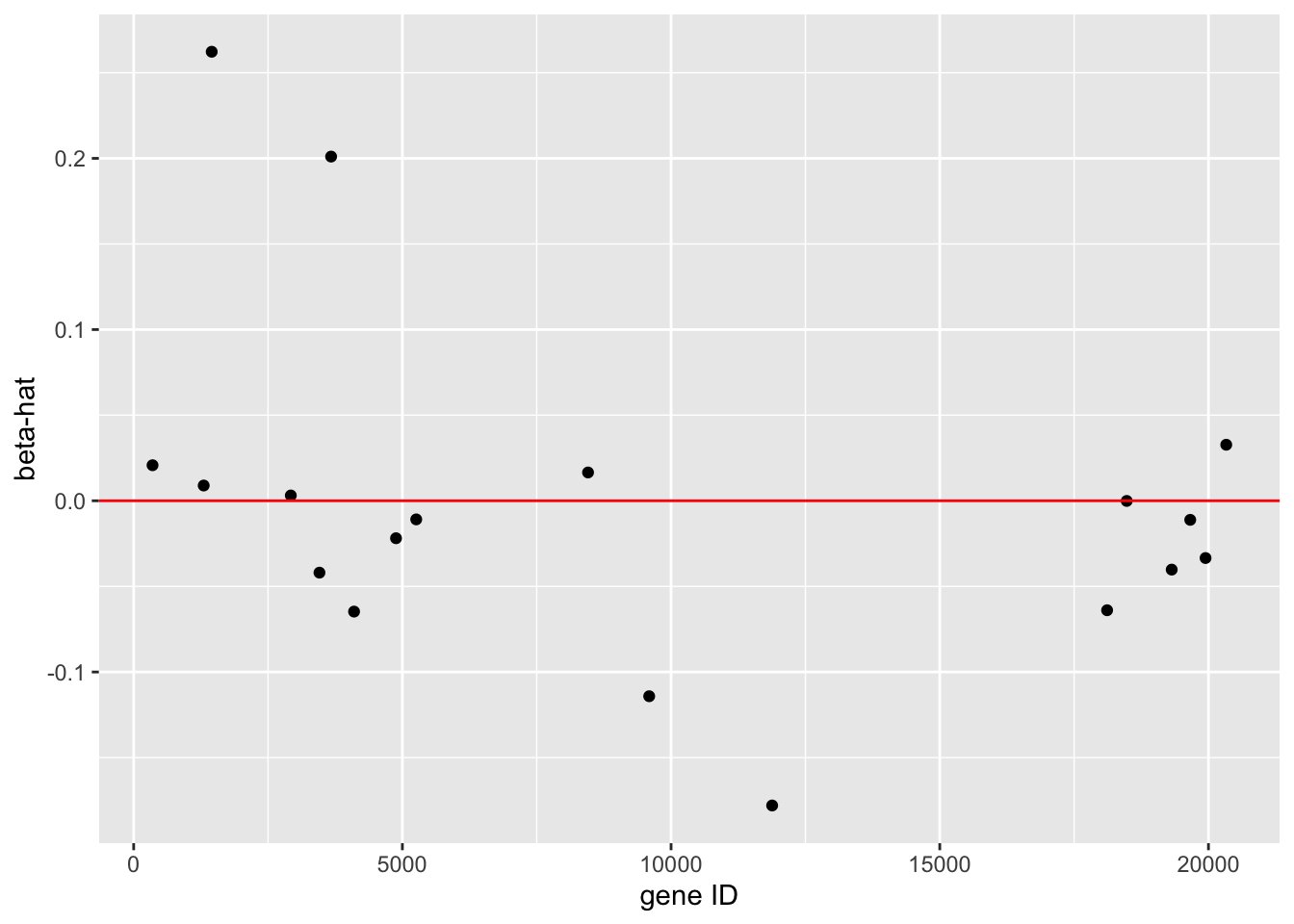

qplot(

summary(coef(mLambdaOpt))[-1,1],

summary(coef(mLambdaOpt))[-1,3]) +

xlab("gene ID") +

ylab("beta-hat") +

geom_hline(yintercept = 0, color = "red")

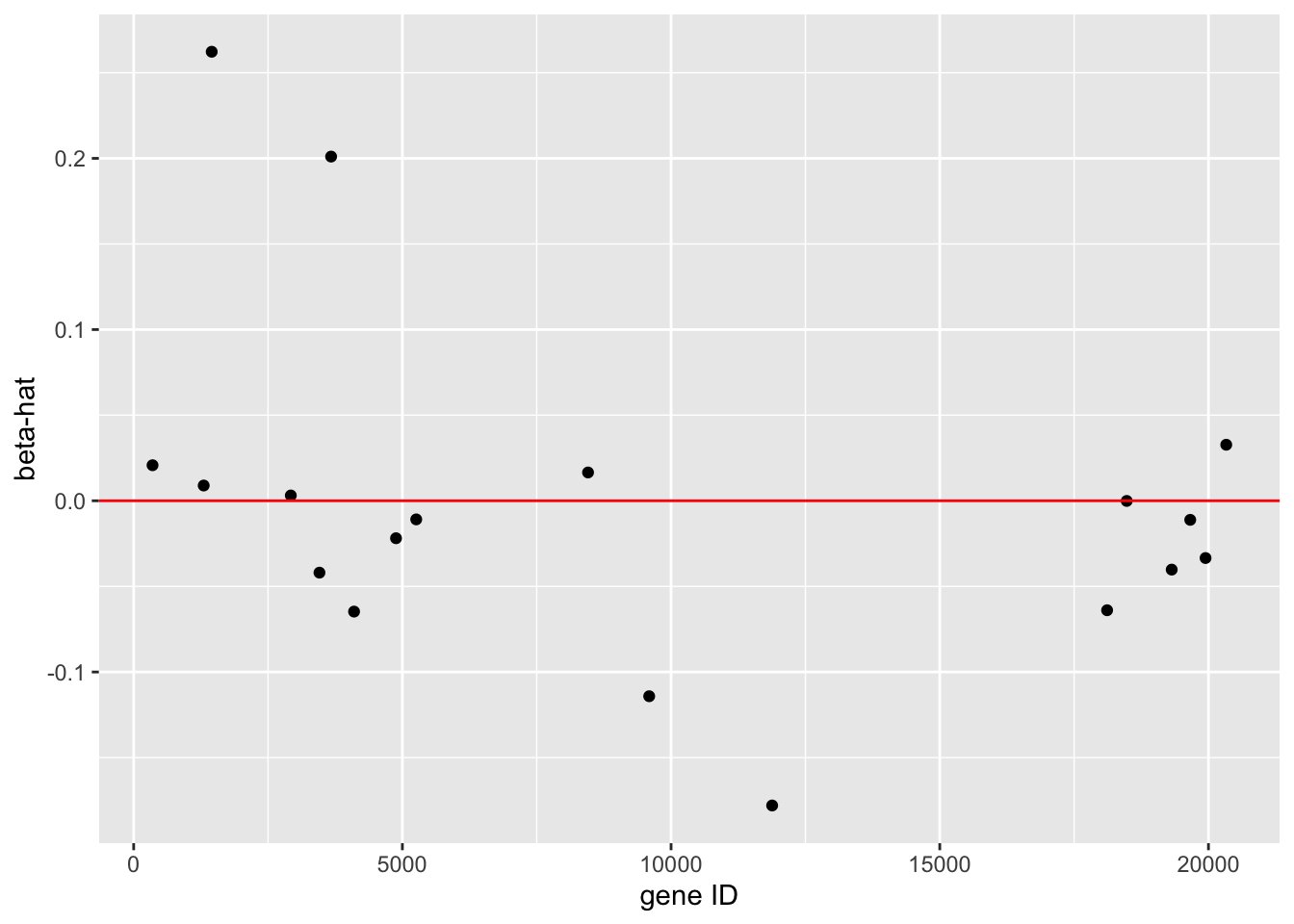

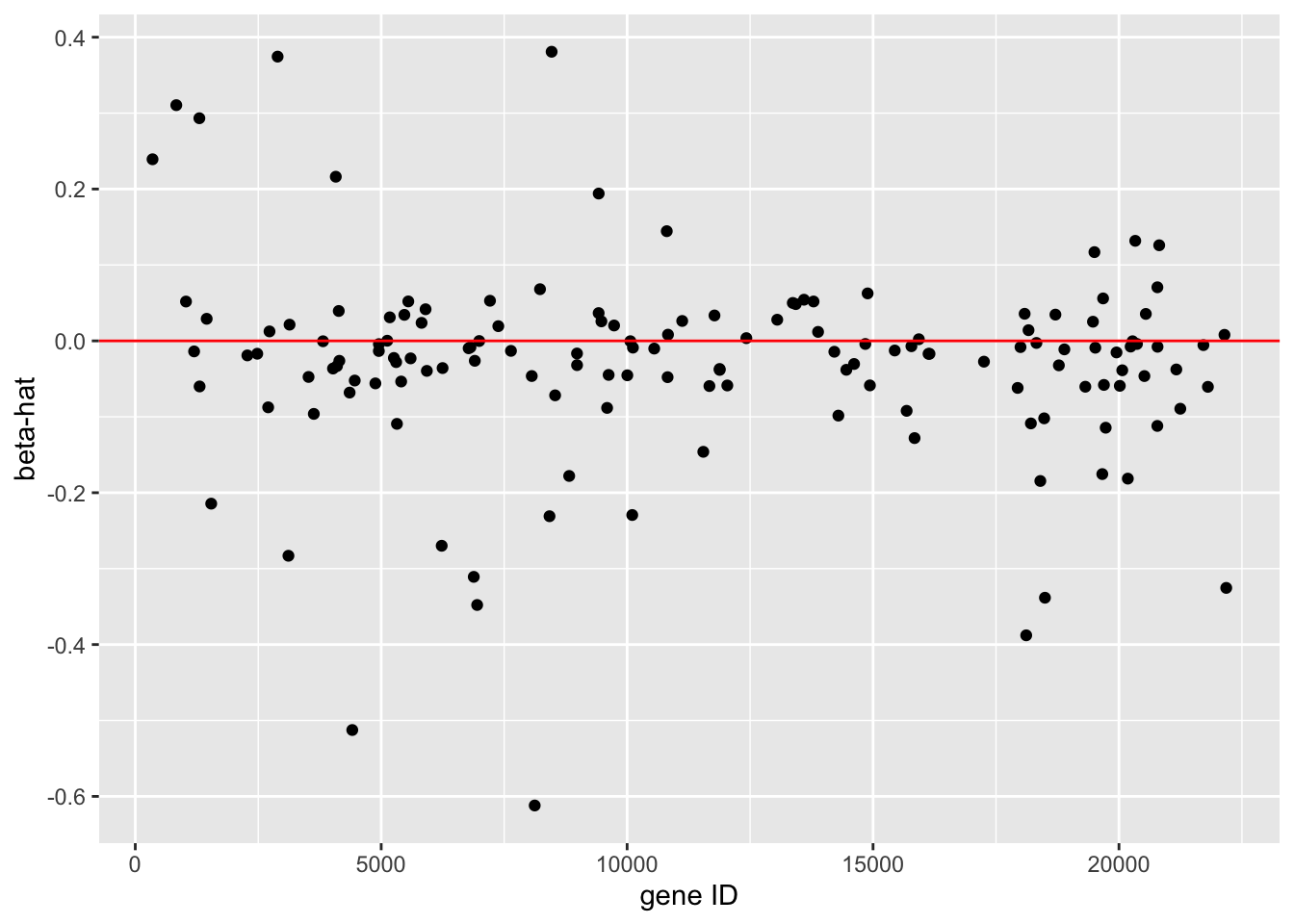

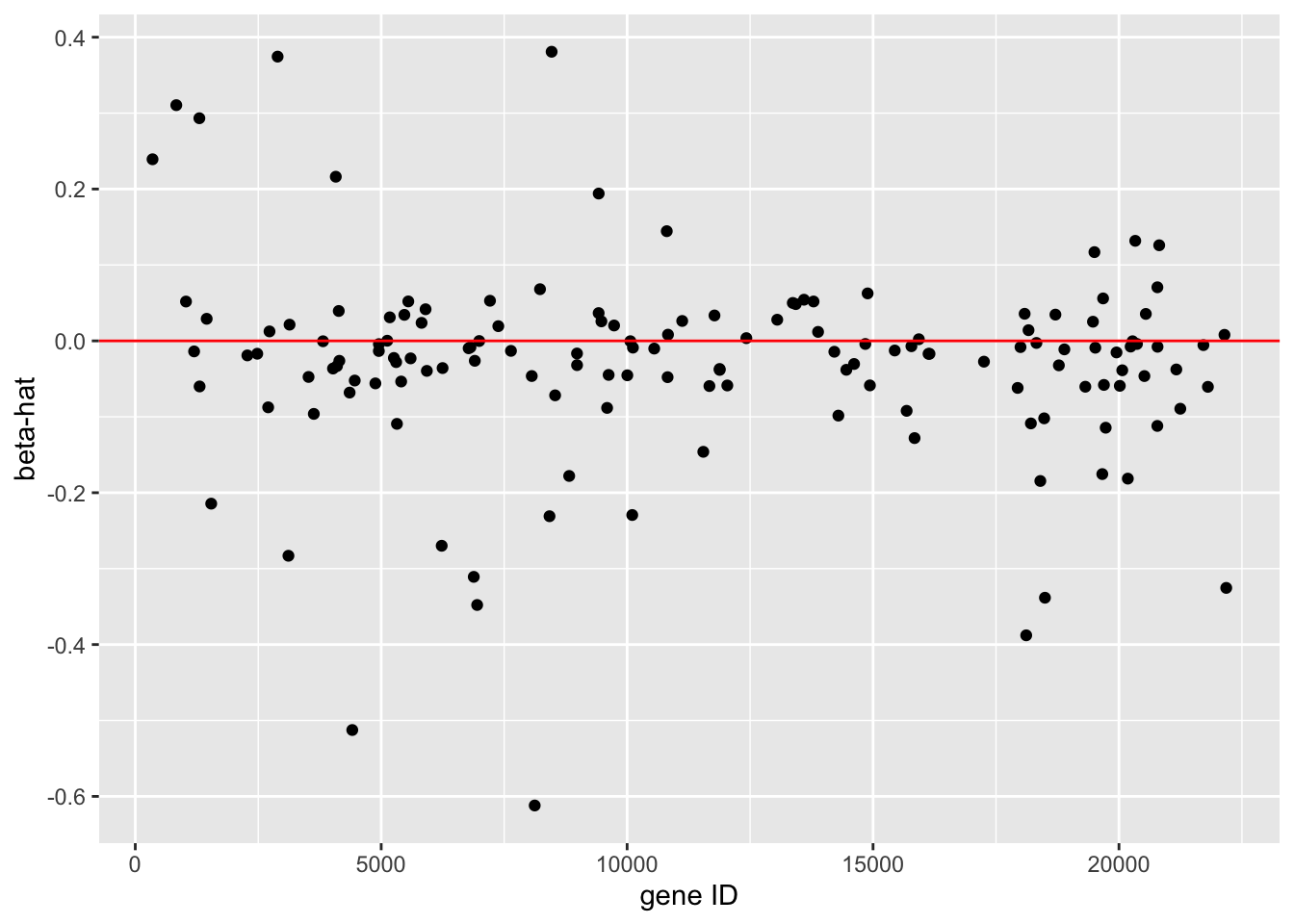

- The model with the optimal has only 19 non-zero parameter estimates.

- Thus only 19 genes are involved in the prediction model.

- These 19 parameter estimates are plotting in the graph.

A listing of the model output would show the names of the genes.

dfLasso1se <- data.frame(

pi = predict(mCvLasso,

newx = XTest,

s = mCvLasso$lambda.1se,

type = "response") %>% c(.),

known.truth = YTest)

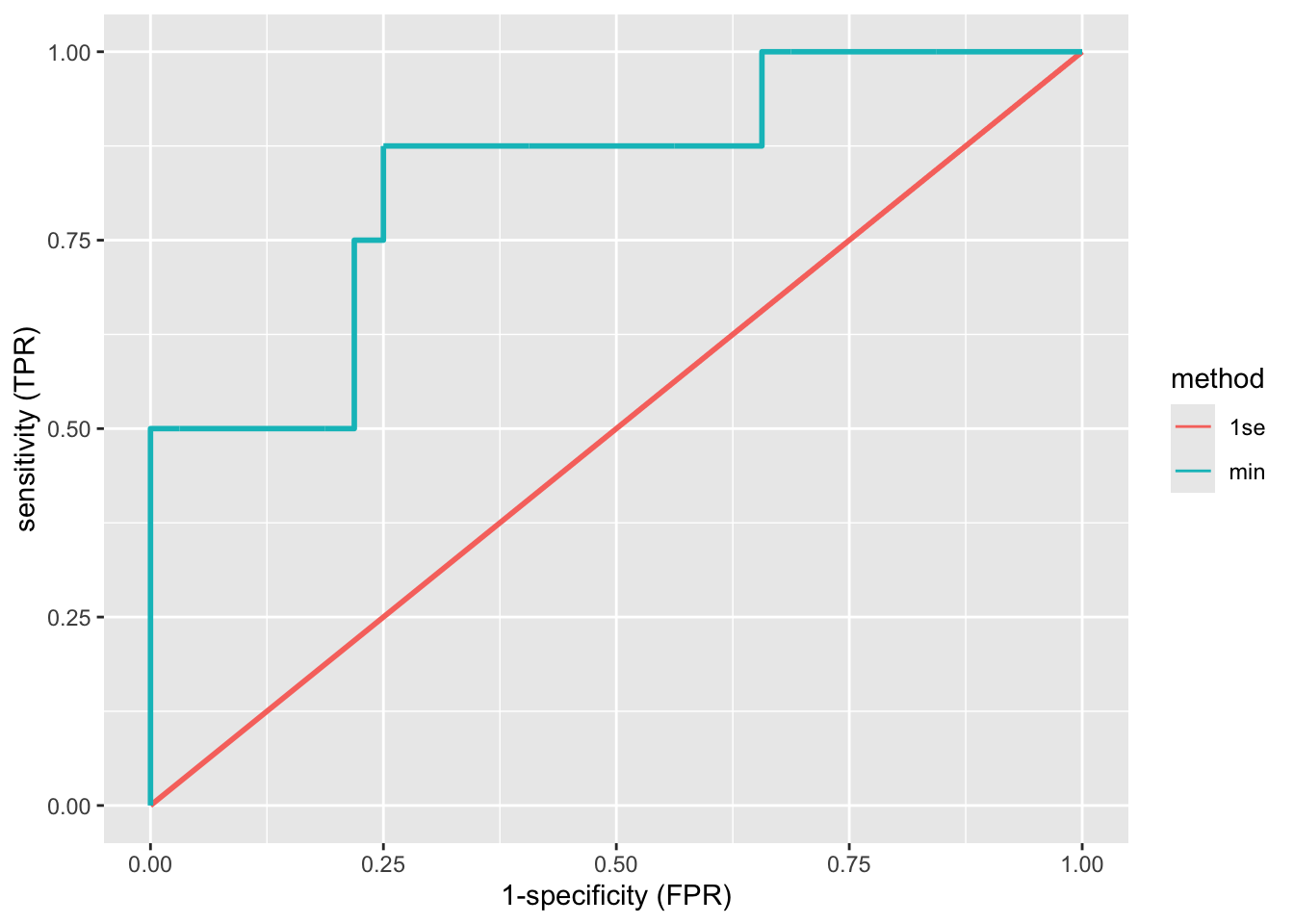

roc <-

rbind(

dfLassoOpt %>%

mutate(method = "min"),

dfLasso1se %>%

mutate(method = "1se")

) %>%

ggplot(aes(d = known.truth, m = pi, color = method)) +

geom_roc(n.cuts = 0) +

xlab("1-specificity (FPR)") +

ylab("sensitivity (TPR)")

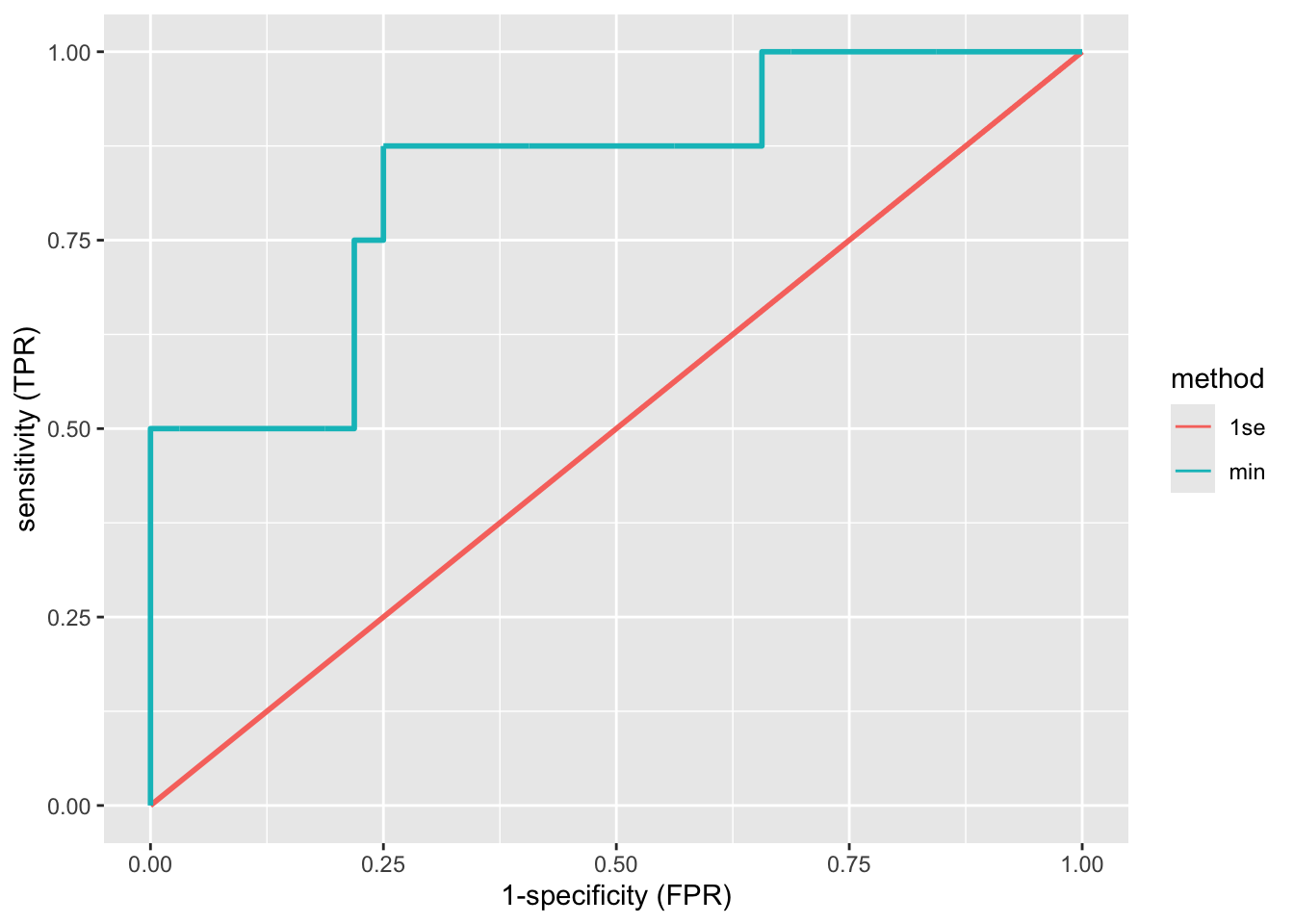

roc

When using the of the optimal model up to 1 standard deviation, a diagonal ROC curve is obtained and hence AUC is .

This prediction model is thus equivalent to flipping a coin for making the prediction.

The reason is that with this choice of (strong penalisation) almost all predictors are removed from the model.

Therefore, do never blindly choose for the ``optimal’’ as defined here, but assess the performance of the model first.

mLambda1se <- glmnet(x = XTrain,

y = YTrain,

alpha = 1,

lambda = mCvLasso$lambda.1se,

family="binomial")

mLambda1se %>%

coef %>%

summary

The Elastic Net

The lasso and ridge regression have positive and negative properties.

Lasso

positive: sparse solution

negative: at most predictors can be selected

negative: tend to select one predictor among a group of highly correlated predictors

Ridge

- negative: no sparse solution

- positive: more than predictors can be selected

A compromise between lasso and ridge: the elastic net:

The elastic gives a sparse solution with potentially more than predictors.

The glmnet R function uses the following parameterisation,

parameter gives weight to penalty term (hence gives the lasso, and gives ridge).

a parameter to give weight to the penalisation

Note that the combination of and gives the same flexibility as the combination of the parameters and .

Breast cancer example

mElastic <- glmnet(

x = XTrain,

y = YTrain,

alpha = 0.5,

family="binomial") # elastic net

plot(mElastic, xvar = "lambda",xlim=c(-5.5,-1))

mCvElastic <- cv.glmnet(x = XTrain,

y = YTrain,

alpha = 0.5,

family = "binomial",

type.measure = "class") # elastic net

plot(mCvElastic)

#>

#> Call: cv.glmnet(x = XTrain, y = YTrain, type.measure = "class", alpha = 0.5, family = "binomial")

#>

#> Measure: Misclassification Error

#>

#> Lambda Index Measure SE Nonzero

#> min 0.01859 66 0.1313 0.02708 148

#> 1se 0.21876 13 0.1562 0.03391 26

dfElast <- data.frame(

pi = predict(mElastic,

newx = XTest,

s = mCvElastic$lambda.min,

type = "response") %>% c(.),

known.truth = YTest)

roc <- rbind(

dfLassoOpt %>% mutate(method = "lasso"),

dfElast %>% mutate(method = "elast. net")) %>%

ggplot(aes(d = known.truth, m = pi, color = method)) +

geom_roc(n.cuts = 0) +

xlab("1-specificity (FPR)") +

ylab("sensitivity (TPR)")

roc

- More parameters are used than for the lasso, but the performance does not improve.

mElasticOpt <- glmnet(x = XTrain,

y = YTrain,

alpha = 0.5,

lambda = mCvElastic$lambda.min,

family="binomial")

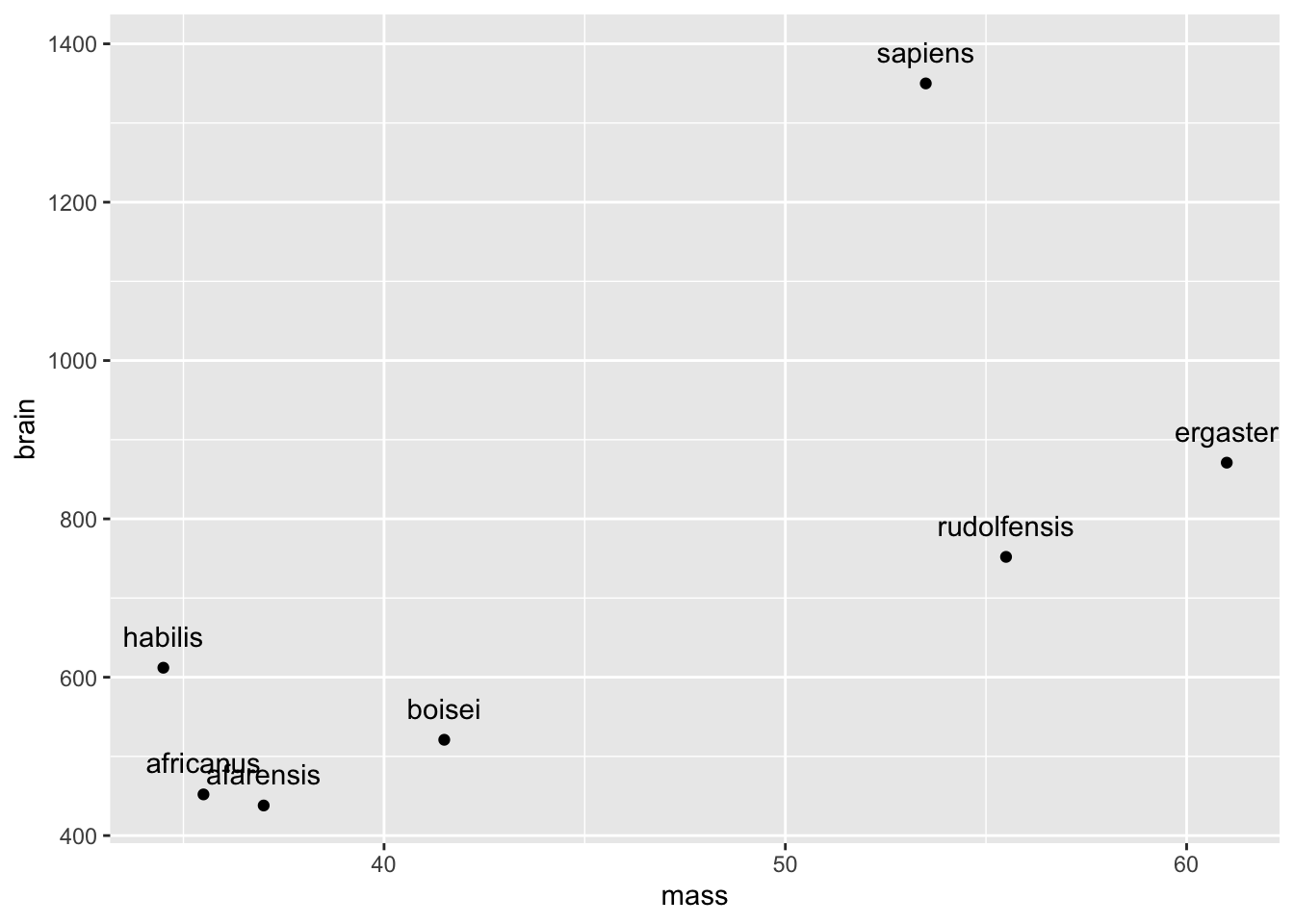

qplot(

summary(coef(mElasticOpt))[-1,1],

summary(coef(mElasticOpt))[-1,3]) +

xlab("gene ID") +

ylab("beta-hat") +

geom_hline(yintercept = 0, color = "red")