Linear Discriminant Analysis (LDA)

Lieven Clement

##

## Attaching package: 'gridExtra'## The following object is masked from 'package:Biobase':

##

## combine## The following object is masked from 'package:BiocGenerics':

##

## combine## The following object is masked from 'package:dplyr':

##

## combine1 Breast cancer example

Schmidt et al., 2008, Cancer Research, 68, 5405-5413

Gene expression patterns in n=200 breast tumors were investigated (p=22283 genes)

After surgery the tumors were graded by a pathologist (stage 1,2,3)

1.1 Data

#BiocManager::install("genefu")

#BiocManager::install("breastCancerMAINZ")

library(genefu)

library(breastCancerMAINZ)

data(mainz)

X <- t(exprs(mainz)) # gene expressions

n <- nrow(X)

H <- diag(n)-1/n*matrix(1,ncol=n,nrow=n)

X <- H%*%X

Y <- pData(mainz)$grade

table(Y)## Y

## 1 2 3

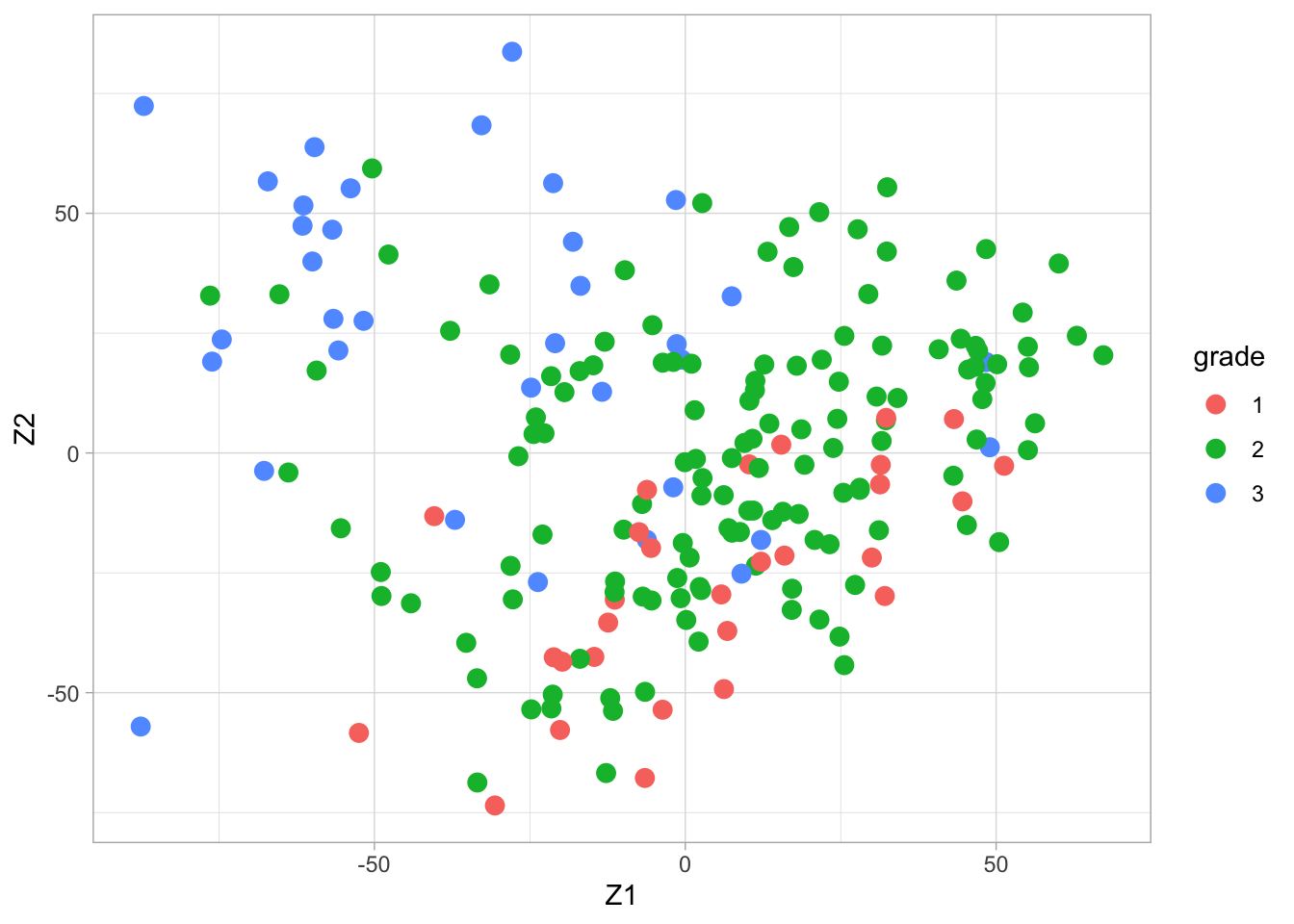

## 29 136 35svdX <- svd(X)

k <- 2

Zk <- svdX$u[,1:k] %*% diag(svdX$d[1:k])

colnames(Zk) <- paste0("Z",1:k)

Zk %>%

as.data.frame %>%

mutate(grade = Y %>% as.factor) %>%

ggplot(aes(x= Z1, y = Z2, color = grade)) +

geom_point(size = 3)

2 Linear discriminant analysis

Fisher’s construction of LDA is simple: it allows for classification in a dimension-reduced subspace of \(\mathbb{R}^p\).

First we assume that \(Y\) can only take two values (0/1).

Fisher aimed for a direction, say \(\mathbf{a}\), in the \(p\)-dimensional predictor space such that the orthogonal projections of the predictors, \(\mathbf{x}^t\mathbf{a}\), show maximal ratio between the between and within sums of squares: \[ \mathbf{v} = \text{ArgMax}_a \frac{\mathbf{a}^t\mathbf{B}\mathbf{a}}{\mathbf{a}^t\mathbf{W}\mathbf{a}} \text{ subject to } {\mathbf{a}^t\mathbf{W}\mathbf{a}}=1, \] where \(\mathbf{W}\) and \(\mathbf{B}\) are the within and between covariance matrices of \(\mathbf{x}\). The restriction is introduced to obtain a (convenient) unique solution.

2.1 Between and within sums of squares

In the training dataset, let \(\mathbf{x}_{ik}\) denote the \(i\)th \(p\)-dimensional observation in the \(k\)th group (\(k=0,1\) referring to \(Y=0\) and \(Y=1\), resp.), \(i=1,\ldots, n_k\).

Let \(z_{ik}=\mathbf{a}^t\mathbf{x}_{ik}\) denote the orthogonal projection of \(\mathbf{x}_{ik}\) onto \(\mathbf{a}\)

For the one-dimensional \(z\)-observations, consider the following sum of squares: \[ \text{SSE}=\text{within sum of squares} = \sum_{k=0,1}\sum_{i=1}^{n_k} (z_{ik}-\bar{z}_k)^2 \] \[ \text{SSB}=\text{between sum of squares} = \sum_{k=0,1}\sum_{i=1}^{n_k} (\bar{z}_{k}-\bar{z})^2 = \sum_{k=0,1} n_k (\bar{z}_{k}-\bar{z})^2 \] with \(\bar{z}_k\) the sample mean of \(z_{ik}\) within group \(k\), and \(\bar{z}\) the sample mean of all \(z_{ik}\).

To reformulate SSE and SSB in terms of the \(p\)-dimensional \(\mathbf{x}_{ik}\), we need the sample means \[ \bar{z}_k = \frac{1}{n_k} \sum_{i=1}^{n_k} z_{ik} = \frac{1}{n_k} \sum_{i=1}^{n_k} \mathbf{a}^t\mathbf{x}_{ik} = \mathbf{a}^t \frac{1}{n_k} \sum_{i=1}^{n_k} \mathbf{x}_{ik} = \mathbf{a}^t \bar{\mathbf{x}}_k \] \[ \bar{z} = \frac{1}{n}\sum_{k=0,1}\sum_{i=1}^{n_k} z_{ik} = \cdots = \mathbf{a}^t\bar{\mathbf{x}}. \]

SSE becomes \[ \text{SSE} = \sum_{k=0,1}\sum_{i=1}^{n_k} (z_{ik}-\bar{z}_k)^2 = \mathbf{a}^t \left(\sum_{k=0,1}\sum_{i=1}^{n_k} (\mathbf{x}_{ik}-\bar{\mathbf{x}}_k)(\mathbf{x}_{ik}-\bar{\mathbf{x}}_k)^t\right)\mathbf{a} \]

SSB becomes \[ \text{SSB} = \sum_{k=0,1} n_k (\bar{z}_{k}-\bar{z})^2 = \mathbf{a}^t \left(\sum_{k=0,1} n_k (\bar{\mathbf{x}_{k}}-\bar{\mathbf{x}})(\bar{\mathbf{x}_{k}}-\bar{\mathbf{x}})^t \right)\mathbf{a} \]

The \(p \times p\) matrix \[ \mathbf{W}=\sum_{k=0,1}\sum_{i=1}^{n_k} (\mathbf{x}_{ik}-\bar{\mathbf{x}}_k)(\mathbf{x}_{ik}-\bar{\mathbf{x}}_k)^t \] is referred to as the matrix of within sum of squares and cross products.

The \(p \times p\) matrix \[ \mathbf{B}=\sum_{k=0,1} n_k (\bar{\mathbf{x}_{k}}-\bar{\mathbf{x}})(\bar{\mathbf{x}_{k}}-\bar{\mathbf{x}})^t \] is referred to as the matrix of between sum of squares and cross products.

Note that on the diagonal of \(\mathbf{W}\) and \(\mathbf{B}\) you find the ordinary univariate within and between sums of squares of the individual components of \(\mathbf{x}\).

2.2 Obtain projections

An equivalent formulation: \[ \mathbf{v} = \text{ArgMax}_a \mathbf{a}^t\mathbf{B}\mathbf{a} \text{ subject to } \mathbf{a}^t\mathbf{W}\mathbf{a}=1. \]

This can be solved by introducing a Langrange multiplier: \[ \mathbf{v} = \text{ArgMax}_a \mathbf{a}^t\mathbf{B}\mathbf{a} -\lambda(\mathbf{a}^t\mathbf{W}\mathbf{a}-1). \]

Calculating the partial derivative w.r.t. \(\mathbf{a}\) and setting it to zero gives \[\begin{eqnarray*} 2\mathbf{B}\mathbf{a} -2\lambda \mathbf{W}\mathbf{a} &=& 0\\ \mathbf{B}\mathbf{a} &=& \lambda \mathbf{W}\mathbf{a} \\ \mathbf{W}^{-1}\mathbf{B}\mathbf{a} &=& \lambda\mathbf{a}. \end{eqnarray*}\]

From the final equation we recognise that \(\mathbf{v}=\mathbf{a}\) is an eigenvector of \(\mathbf{W}^{-1}\mathbf{B}\), and \(\lambda\) is the corresponding eigenvalue.

The equation has in general \(\text{rank}(\mathbf{W}^{-1}\mathbf{B})\) solutions. In the case of two classes, the rank equals 1 and thus only one solution exists.

A training data set is used for the calculation of \(\mathbf{W}\) and \(\mathbf{B}\). \(\longrightarrow\) This gives the eigenvector \(\mathbf{v}\)

The training data is also used for the calculation of the centroids of the classes (e.g. the sample means, say \(\bar{\mathbf{x}}_1\) and \(\bar{\mathbf{x}}_2\)). \(\longrightarrow\) The projected centroids are given by \(\bar{\mathbf{x}}_1^t\mathbf{v}\) and \(\bar{\mathbf{x}}_2^t\mathbf{v}\).

A new observation with predictor \(\mathbf{x}\) is classified in the class for which the projected centroid is closest to the projected predictor \(z=\mathbf{x}^t\mathbf{v}\).

An advantage of this approach is that \(\mathbf{v}\) can be interpreted (similar as the loadings in a PCA) in terms of which predictors \(x_j\) are important to discriminate between classes 0 and 1.

2.3 More than two classes

When the outcome \(Y\) refers to more than two classes, say \(m\) classes, then Fisher’s method is constructed in exactly the same way. Now \[ \mathbf{W}^{-1}\mathbf{B}\mathbf{a} = \lambda\mathbf{a} \] will have \(r=\text{rank}(\mathbf{W}^{-1}\mathbf{B}) = \min(m-1,p,n)\) solutions (eigenvectors and eigenvalues). (\(n\): sample size of training data)

Let \(\mathbf{v}_j\) and \(\lambda_j\) denote the \(r\) solutions, and define

\(\mathbf{V}\): \(p\times r\) matrix with collums \(\mathbf{v}\)

\(\mathbf{L}\): \(r \times r\) diagonal matrix with elements \(\lambda_1 > \lambda_2 > \cdots > \lambda_r\)

The \(p\)-dimensional predictor data in \(\mathbf{X}\) may then be transformed to the \(r\)-dimensional scores \[ \mathbf{Z} = \mathbf{X}\mathbf{V}. \]

For eigenvectors \(\mathbf{v}_i\) and \(\mathbf{v}_j\), it holds that \[ \text{cov}\left[Z_i,Z_j\right] = \text{cov}\left[\mathbf{X}\mathbf{v}_i,\mathbf{X}\mathbf{v}_j\right]= \mathbf{v}_i^t \mathbf{W} \mathbf{v}_j = \delta_{ij} , \] in which the covariances are defined within groups. Hence, within the groups (classes) the scores are uncorrelated.

3 High dimensional predictors

With high-dimensional predictors

Replace the \(p\times p\) matrices \(\mathbf{W}\) and \(\mathbf{B}\) by their diagonal matrices (i.e. put zeroes on the off-diagonal positions)

Sparse LDA by imposing an \(L_1\)-penalty on \(\mathbf{v}\).

Two approaches: Zhou et al. (2006), Journal of Computational and Graphical Statistics , 15, 265-286, and Clemmensen et al. (2011), Technometrics, 53.

4 Breast cancer example

4.1 All genes

4.1.1 LDA

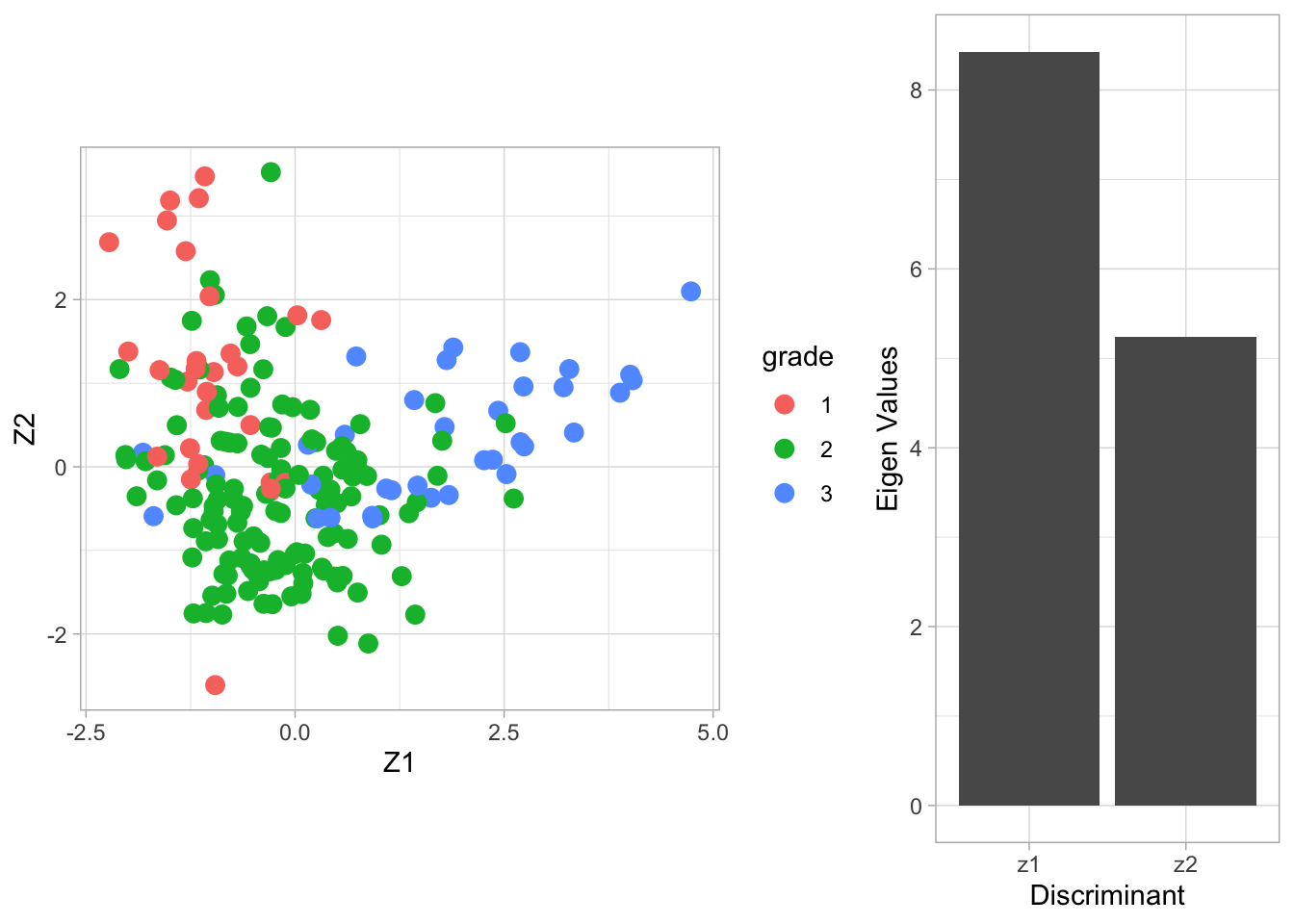

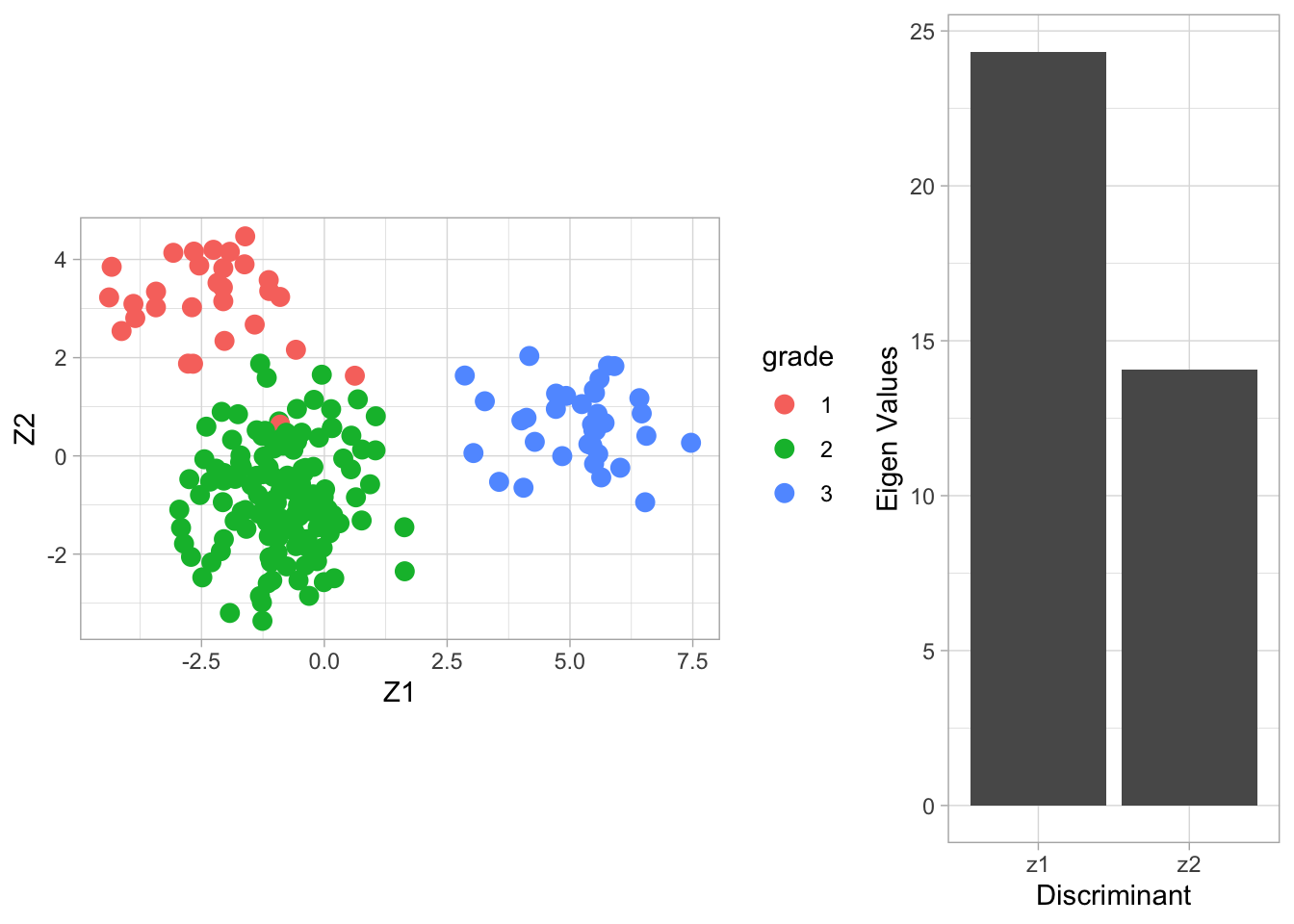

Fisher’s LDA is illustrated on the breast cancer data with all three tumor stages as outcome.

We try to discriminate between the different stages according to the gene expression data of all genes.

We cache the result because the calculation takes 10 minutes.

## Warning in lda.default(x, grouping, ...): variables are collinearVlda <- breast.lda$scaling

colnames(Vlda) <- paste0("V",1:ncol(Vlda))

Zlda <- X%*%Vlda

colnames(Zlda) <- paste0("Z",1:ncol(Zlda))

grid.arrange(

Zlda %>%

as.data.frame %>%

mutate(grade = Y %>% as.factor) %>%

ggplot(aes(x= Z1, y = Z2, color = grade)) +

geom_point(size = 3) +

coord_fixed(),

ggplot() +

geom_bar(aes(x = c("z1","z2"), y = breast.lda$svd), stat = "identity") +

xlab("Discriminant") +

ylab("Eigen Values"),

layout_matrix = matrix(

c(1,1,2),

nrow=1)

)

The columns of the matrix \(\mathbf{V}\) contain the eigenvectors. There are \(\min(3-2,22283,200)=2\) eigenvectors. The \(200\times 2\) matrix \(\mathbf{Z}\) contains the scores on the two Fisher discriminants.

The eigenvalue \(\lambda_j\) can be interpreted as the ratio \[ \frac{\mathbf{v}_j^t\mathbf{B}\mathbf{v}_j}{\mathbf{v}_j^t\mathbf{W}\mathbf{v}_j} , \] or (upon using \(\mathbf{v}_j^t\mathbf{W}\mathbf{v}_j=1\)) the between-centroid sum of squares (in the reduced dimension space of the Fisher discriminants) \[ \mathbf{v}_j^t\mathbf{B}\mathbf{v}_j. \]

From the screeplot of the eigenvalues we see that the first dimension is more important than the second (not hugely) in terms of discriminating between the groups.

From the scatterplot we can see that there is no perfect separation (discrimination) between the three tumor stages (quite some overlap).

To some extent the first Fisher discriminant dimension discriminates stage 3 (green dots) from the other two stages, and the second dimension separates stage 1 (black dots) from the two others.

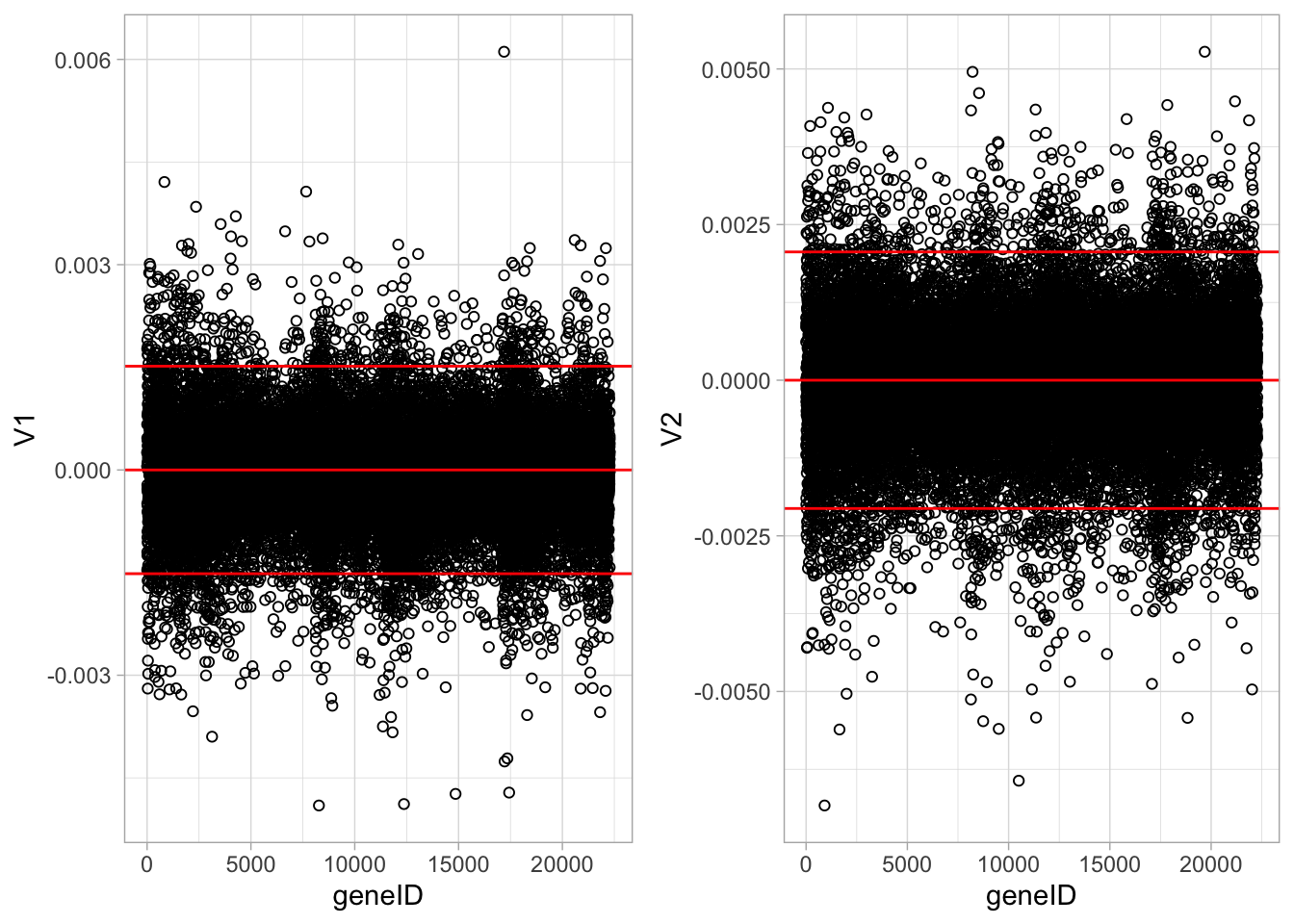

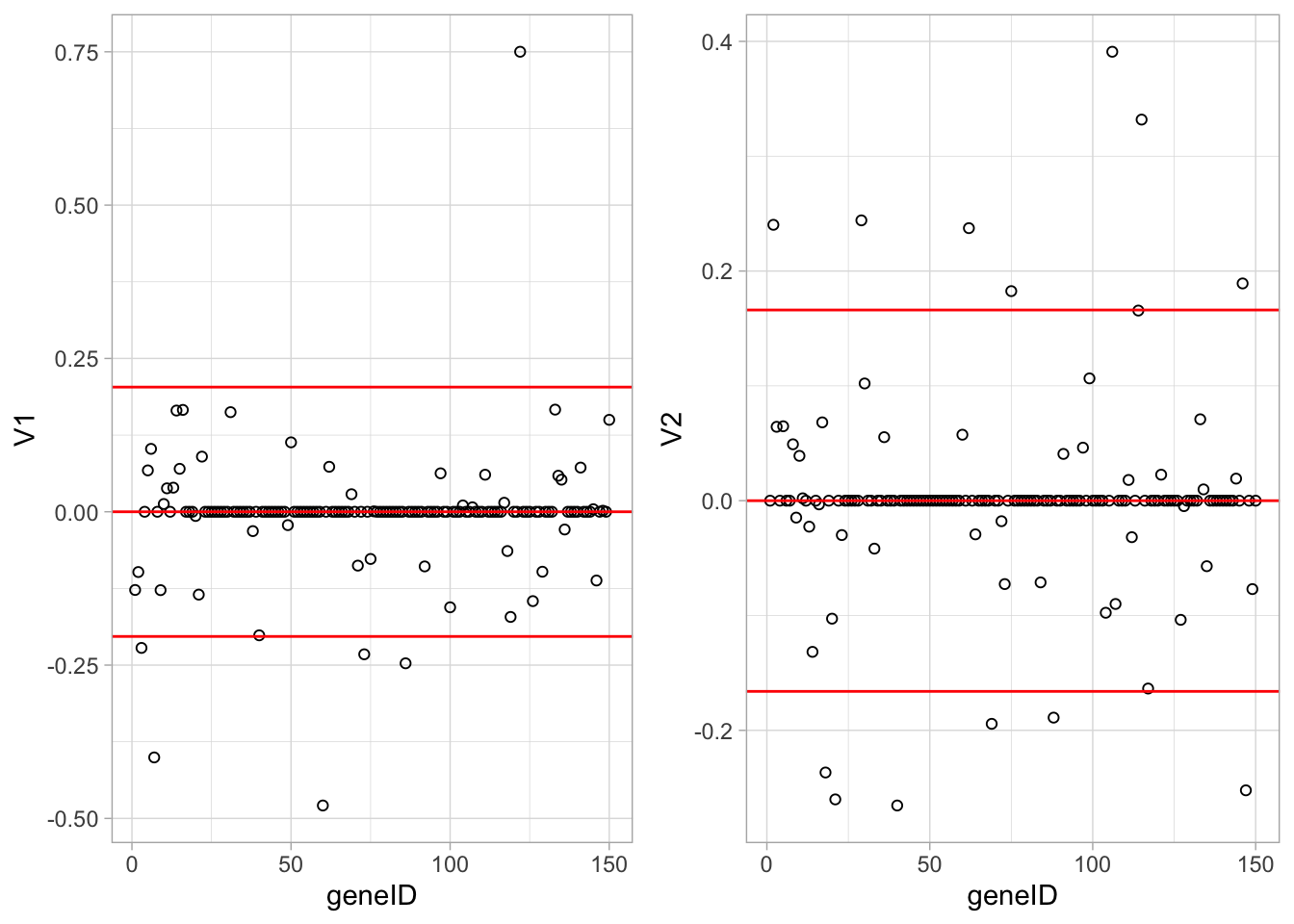

4.1.2 Interpretation of loadings

grid.arrange(

Vlda %>%

as.data.frame %>%

mutate(geneID = 1:nrow(Vlda)) %>%

ggplot(aes(x = geneID, y = V1)) +

geom_point(pch=21) +

geom_hline(yintercept = c(-2,0,2)*sd(Vlda[,1]), col = "red"),

Vlda %>%

as.data.frame %>%

mutate(geneID = 1:nrow(Vlda)) %>%

ggplot(aes(x = geneID, y = V2)) +

geom_point(pch=21) +

geom_hline(yintercept = c(-2,0,2)*sd(Vlda[,2]), col = "red"),

ncol = 2)

The loadings of the Fisher discriminants are within the columns of the \(\mathbf{V}\) matrix.

Since we have 22283 genes, each discriminant is a linear combination of 22283 gene expression. Instead of looking at the listing of 22283 loadings, we made an index plot (no particular ordering of genes on horizontal axis).

The red horizontal reference lines correspond to the average of the loading (close to zero) and the average plus and minus twice the standard deviation of the loadings.

If no genes had any “significant” discriminating power, then we would expect approximately \(95\%\) of all loadings within the band. Thus loadings outside of the band are of potential interest and may perhaps be discriminating between the three tumor stages.

In the graphs presented here we see many loadings within the bands, but also many outside of the band.

We repeat the analysis, but now with the sparse LDA method of Clemmensen et al. (2011).

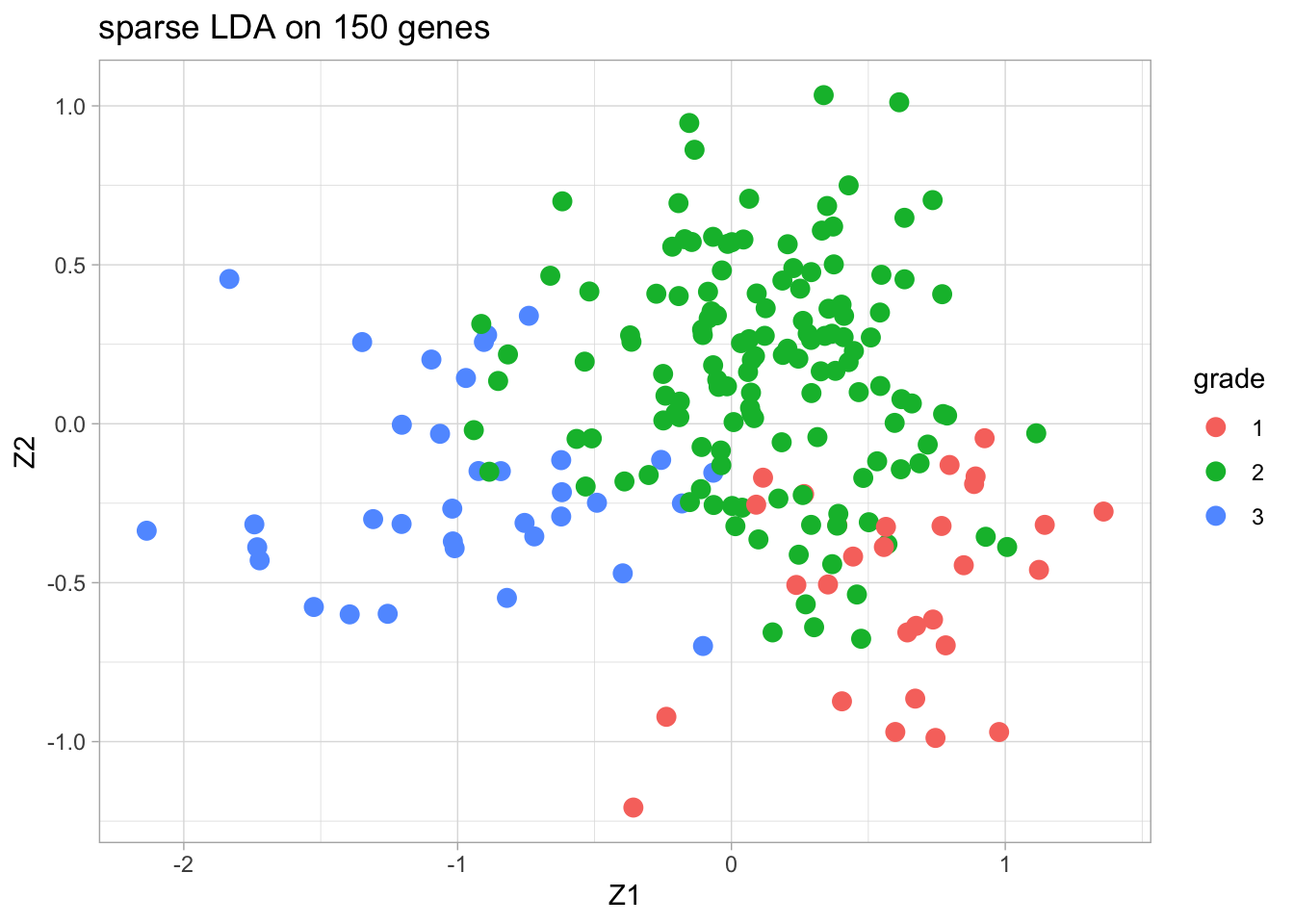

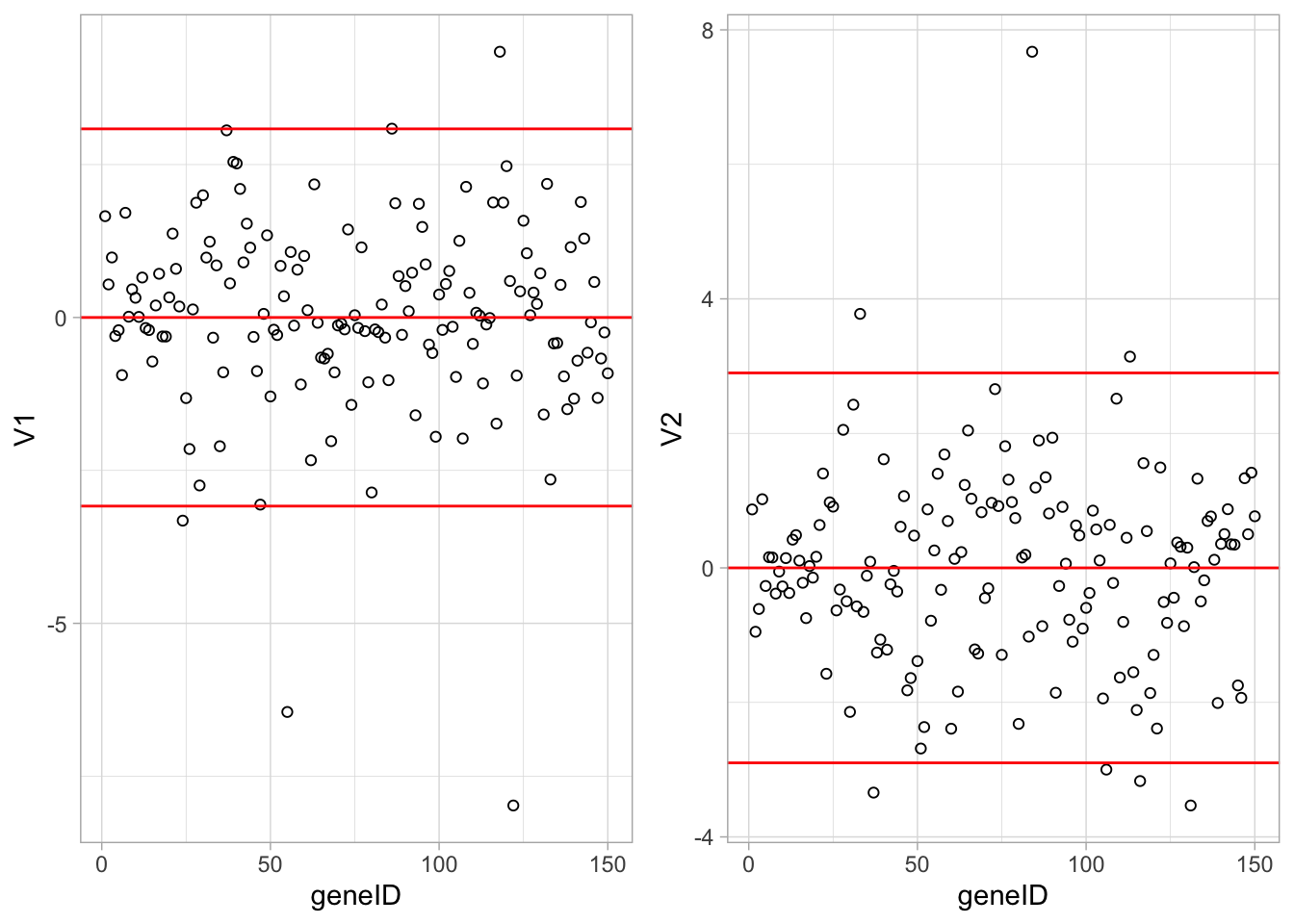

4.2 Sparse LDA based on 150 random genes

We only present the results of the sparse LDA based on a random subset of 150 genes (ordering of genes in datamatrix is random).

The discrimination seems better than with classical LDA based on all genes. This is very likely caused by too much noise in the full data matrix with over 20000 predictors.

##

## Attaching package: 'sparseLDA'## The following object is masked from 'package:scater':

##

## normalize## The following object is masked from 'package:BiocGenerics':

##

## normalizeYDummy <- data.frame(

Y1 = ifelse(Y == 1, 1, 0),

Y2 = ifelse(Y == 2, 1, 0),

Y3 = ifelse(Y == 3, 1, 0)

)

X2 <- X[,1:150]

breast.slda <- sda(x = X2,

y = as.matrix(YDummy),

lambda = 1e-6,

stop = -50,

maxIte = 25,

trace = TRUE)## ite: 1 ridge cost: 95.10454 |b|_1: 5.317484

## ite: 2 ridge cost: 89.93901 |b|_1: 5.733867

## ite: 3 ridge cost: 89.0722 |b|_1: 5.730551

## ite: 4 ridge cost: 87.91059 |b|_1: 5.897303

## ite: 5 ridge cost: 87.87613 |b|_1: 5.888929

## ite: 6 ridge cost: 88.25646 |b|_1: 5.813408

## ite: 7 ridge cost: 87.70664 |b|_1: 5.915829

## ite: 8 ridge cost: 87.89204 |b|_1: 5.880471

## ite: 9 ridge cost: 87.81662 |b|_1: 5.894634

## ite: 10 ridge cost: 87.77749 |b|_1: 5.902013

## ite: 11 ridge cost: 87.7695 |b|_1: 5.903517

## ite: 12 ridge cost: 87.77567 |b|_1: 5.90235

## ite: 13 ridge cost: 87.77871 |b|_1: 5.901775

## ite: 14 ridge cost: 87.78021 |b|_1: 5.901491

## ite: 15 ridge cost: 87.78094 |b|_1: 5.901351

## ite: 16 ridge cost: 87.78131 |b|_1: 5.901282

## ite: 17 ridge cost: 87.78149 |b|_1: 5.901248

## ite: 18 ridge cost: 87.78158 |b|_1: 5.901232

## ite: 19 ridge cost: 87.78162 |b|_1: 5.901223

## ite: 1 ridge cost: 134.7777 |b|_1: 5.379261

## ite: 2 ridge cost: 134.7777 |b|_1: 5.379261

## final update, total ridge cost: 222.5594 |b|_1: 11.28048Vsda <- matrix(0, nrow=ncol(X2), ncol=2)

Vsda[breast.slda$varIndex,] <- breast.slda$beta

colnames(Vsda) <- paste0("V",1:ncol(Vsda))

Zsda <- X2%*%Vsda

colnames(Zsda) <- paste0("Z",1:ncol(Zsda))

Zsda %>%

as.data.frame %>%

mutate(grade = Y %>% as.factor) %>%

ggplot(aes(x= Z1, y = Z2, color = grade)) +

geom_point(size = 3) +

ggtitle("sparse LDA on 150 genes")

grid.arrange(

Vsda %>%

as.data.frame %>%

mutate(geneID = 1:nrow(Vsda)) %>%

ggplot(aes(x = geneID, y = V1)) +

geom_point(pch=21) +

geom_hline(yintercept = c(-2,0,2)*sd(Vsda[,1]), col = "red") ,

Vsda %>%

as.data.frame %>%

mutate(geneID = 1:nrow(Vsda)) %>%

ggplot(aes(x = geneID, y = V2)) +

geom_point(pch=21) +

geom_hline(yintercept = c(-2,0,2)*sd(Vsda[,2]), col = "red"),

ncol = 2)

4.3 LDA based on 150 random genes

breast.lda150 <- MASS::lda(x = X2, grouping = Y)

Vlda <- breast.lda150$scaling

colnames(Vlda) <- paste0("V",1:ncol(Vlda))

Zlda <- X2%*%Vlda

colnames(Zlda) <- paste0("Z",1:ncol(Zlda))

grid.arrange(

Zlda %>%

as.data.frame %>%

mutate(grade = Y %>% as.factor) %>%

ggplot(aes(x= Z1, y = Z2, color = grade)) +

geom_point(size = 3) +

coord_fixed(),

ggplot() +

geom_bar(aes(x = c("z1","z2"), y = breast.lda150$svd), stat = "identity") +

xlab("Discriminant") +

ylab("Eigen Values"),

layout_matrix = matrix(

c(1,1,2),

nrow=1)

)

grid.arrange(

Vlda %>%

as.data.frame %>%

mutate(geneID = 1:nrow(Vlda)) %>%

ggplot(aes(x = geneID, y = V1)) +

geom_point(pch=21) +

geom_hline(yintercept = c(-2,0,2)*sd(Vlda[,1]), col = "red"),

Vlda %>%

as.data.frame %>%

mutate(geneID = 1:nrow(Vlda)) %>%

ggplot(aes(x = geneID, y = V2)) +

geom_point(pch=21) +

geom_hline(yintercept = c(-2,0,2)*sd(Vlda[,2]), col = "red"),

ncol = 2)

4.4 Wrapup

LDA on all 22283 genes gave poorer result than on 150 genes. This is probably caused by numerical instability when working with large \(\mathbf{W}\) and \(\mathbf{B}\) matrices

Sparse LDA gave slightly poorer result than LDA on the subset of 150 genes. This may be caused by overfitting of the LDA.

When (sparse) LDA is used to build a prediction model/classifier, then CV methods, or splitting of dataset into training and test datasets should be used to allow for an honest evaluation of the final prediction model.

The graphs in the first two Fisher discriminant dimensions shown on the previous slides should only be used for data exploration.

When the objective is to try to understand differences between groups in a high dimensional space, Fisher LDA is preferred over PCA.

Acknowledgement

- Olivier Thas for sharing his materials of Analysis of High Dimensional Data 2019-2020, which I used as the starting point for this chapter.

Session info

Session info

## [1] "2021-05-11 14:37:15 UTC"## ─ Session info ───────────────────────────────────────────────────────────────

## setting value

## version R version 4.0.5 (2021-03-31)

## os macOS Catalina 10.15.7

## system x86_64, darwin17.0

## ui X11

## language (EN)

## collate en_US.UTF-8

## ctype en_US.UTF-8

## tz UTC

## date 2021-05-11

##

## ─ Packages ───────────────────────────────────────────────────────────────────

## package * version date lib

## AIMS * 1.22.0 2020-10-27 [1]

## amap 0.8-18 2019-12-12 [1]

## AnnotationDbi 1.52.0 2020-10-27 [1]

## AnnotationHub * 2.22.1 2021-04-16 [1]

## askpass 1.1 2019-01-13 [1]

## assertthat 0.2.1 2019-03-21 [1]

## backports 1.2.1 2020-12-09 [1]

## beachmat 2.6.4 2020-12-20 [1]

## beeswarm 0.3.1 2021-03-07 [1]

## Biobase * 2.50.0 2020-10-27 [1]

## BiocFileCache * 1.14.0 2020-10-27 [1]

## BiocGenerics * 0.36.1 2021-04-16 [1]

## BiocManager 1.30.15 2021-05-11 [1]

## BiocNeighbors 1.8.2 2020-12-07 [1]

## BiocParallel 1.24.1 2020-11-06 [1]

## BiocSingular 1.6.0 2020-10-27 [1]

## BiocVersion 3.12.0 2020-05-14 [1]

## biomaRt * 2.46.3 2021-02-11 [1]

## bit 4.0.4 2020-08-04 [1]

## bit64 4.0.5 2020-08-30 [1]

## bitops 1.0-7 2021-04-24 [1]

## blob 1.2.1 2020-01-20 [1]

## boot * 1.3-28 2021-05-03 [1]

## bootstrap 2019.6 2019-06-17 [1]

## breastCancerMAINZ * 1.28.0 2020-10-29 [1]

## broom 0.7.6 2021-04-05 [1]

## bslib 0.2.4 2021-01-25 [1]

## cachem 1.0.4 2021-02-13 [1]

## callr 3.7.0 2021-04-20 [1]

## CCA * 1.2.1 2021-03-01 [1]

## cellranger 1.1.0 2016-07-27 [1]

## class 7.3-18 2021-01-24 [2]

## cli 2.5.0 2021-04-26 [1]

## cluster * 2.1.2 2021-04-17 [1]

## codetools 0.2-18 2020-11-04 [2]

## colorspace 2.0-1 2021-05-04 [1]

## crayon 1.4.1 2021-02-08 [1]

## curl 4.3.1 2021-04-30 [1]

## DBI 1.1.1 2021-01-15 [1]

## dbplyr * 2.1.1 2021-04-06 [1]

## DelayedArray 0.16.3 2021-03-24 [1]

## DelayedMatrixStats 1.12.3 2021-02-03 [1]

## desc 1.3.0 2021-03-05 [1]

## devtools 2.4.1 2021-05-05 [1]

## digest 0.6.27 2020-10-24 [1]

## dotCall64 * 1.0-1 2021-02-11 [1]

## dplyr * 1.0.6 2021-05-05 [1]

## e1071 * 1.7-6 2021-03-18 [1]

## elasticnet 1.3 2020-05-15 [1]

## ellipsis 0.3.2 2021-04-29 [1]

## evaluate 0.14 2019-05-28 [1]

## ExperimentHub * 1.16.1 2021-04-16 [1]

## fansi 0.4.2 2021-01-15 [1]

## farver 2.1.0 2021-02-28 [1]

## fastmap 1.1.0 2021-01-25 [1]

## fda * 5.1.9 2020-12-16 [1]

## fds * 1.8 2018-10-31 [1]

## fields * 11.6 2020-10-09 [1]

## forcats * 0.5.1 2021-01-27 [1]

## foreach 1.5.1 2020-10-15 [1]

## fs 1.5.0 2020-07-31 [1]

## genefu * 2.22.1 2021-01-26 [1]

## generics 0.1.0 2020-10-31 [1]

## GenomeInfoDb * 1.26.7 2021-04-08 [1]

## GenomeInfoDbData 1.2.4 2021-05-11 [1]

## GenomicRanges * 1.42.0 2020-10-27 [1]

## ggbeeswarm 0.6.0 2017-08-07 [1]

## ggbiplot * 0.55 2021-05-11 [1]

## ggplot2 * 3.3.3 2020-12-30 [1]

## GIGrvg 0.5 2017-06-10 [1]

## git2r 0.28.0 2021-01-10 [1]

## glmnet * 4.1-1 2021-02-21 [1]

## glue 1.4.2 2020-08-27 [1]

## gridExtra * 2.3 2017-09-09 [1]

## gtable 0.3.0 2019-03-25 [1]

## haven 2.4.1 2021-04-23 [1]

## hdrcde 3.4 2021-01-18 [1]

## highr 0.9 2021-04-16 [1]

## hms 1.0.0 2021-01-13 [1]

## htmltools 0.5.1.1 2021-01-22 [1]

## httpuv 1.6.1 2021-05-07 [1]

## httr 1.4.2 2020-07-20 [1]

## HyperbolicDist 0.6-2 2009-09-23 [1]

## iC10 * 1.5 2019-02-08 [1]

## iC10TrainingData * 1.3.1 2018-08-24 [1]

## impute * 1.64.0 2020-10-27 [1]

## interactiveDisplayBase 1.28.0 2020-10-27 [1]

## IRanges * 2.24.1 2020-12-12 [1]

## irlba 2.3.3 2019-02-05 [1]

## iterators 1.0.13 2020-10-15 [1]

## jpeg 0.1-8.1 2019-10-24 [1]

## jquerylib 0.1.4 2021-04-26 [1]

## jsonlite 1.7.2 2020-12-09 [1]

## KernSmooth 2.23-18 2020-10-29 [2]

## knitr 1.33 2021-04-24 [1]

## ks 1.12.0 2021-02-07 [1]

## labeling 0.4.2 2020-10-20 [1]

## lars 1.2 2013-04-24 [1]

## later 1.2.0 2021-04-23 [1]

## lattice 0.20-41 2020-04-02 [2]

## lava 1.6.9 2021-03-11 [1]

## lifecycle 1.0.0 2021-02-15 [1]

## limma * 3.46.0 2020-10-27 [1]

## lubridate 1.7.10 2021-02-26 [1]

## magrittr 2.0.1 2020-11-17 [1]

## maps 3.3.0 2018-04-03 [1]

## MASS * 7.3-53.1 2021-02-12 [2]

## Matrix * 1.3-2 2021-01-06 [2]

## MatrixGenerics * 1.2.1 2021-01-30 [1]

## matrixStats * 0.58.0 2021-01-29 [1]

## mclust * 5.4.7 2020-11-20 [1]

## mda 0.5-2 2020-06-29 [1]

## memoise 2.0.0 2021-01-26 [1]

## mime 0.10 2021-02-13 [1]

## misc3d 0.9-0 2020-09-06 [1]

## modelr 0.1.8 2020-05-19 [1]

## munsell 0.5.0 2018-06-12 [1]

## muscData * 1.4.0 2020-10-29 [1]

## mvtnorm 1.1-1 2020-06-09 [1]

## NormalBetaPrime * 2.2 2019-01-19 [1]

## openssl 1.4.4 2021-04-30 [1]

## pamr * 1.56.1 2019-04-22 [1]

## pcaPP * 1.9-74 2021-04-23 [1]

## pillar 1.6.0 2021-04-13 [1]

## pkgbuild 1.2.0 2020-12-15 [1]

## pkgconfig 2.0.3 2019-09-22 [1]

## pkgload 1.2.1 2021-04-06 [1]

## plot3D * 1.3 2019-12-18 [1]

## pls * 2.7-3 2020-08-07 [1]

## plyr * 1.8.6 2020-03-03 [1]

## pracma 2.3.3 2021-01-23 [1]

## prettyunits 1.1.1 2020-01-24 [1]

## processx 3.5.2 2021-04-30 [1]

## prodlim * 2019.11.13 2019-11-17 [1]

## progress 1.2.2 2019-05-16 [1]

## promises 1.2.0.1 2021-02-11 [1]

## proxy 0.4-25 2021-03-05 [1]

## ps 1.6.0 2021-02-28 [1]

## pscl 1.5.5 2020-03-07 [1]

## purrr * 0.3.4 2020-04-17 [1]

## R6 2.5.0 2020-10-28 [1]

## rainbow * 3.6 2019-01-29 [1]

## rappdirs 0.3.3 2021-01-31 [1]

## Rcpp 1.0.6 2021-01-15 [1]

## RCurl * 1.98-1.3 2021-03-16 [1]

## readr * 1.4.0 2020-10-05 [1]

## readxl 1.3.1 2019-03-13 [1]

## remotes 2.3.0 2021-04-01 [1]

## reprex 2.0.0 2021-04-02 [1]

## rlang 0.4.11 2021-04-30 [1]

## rmarkdown 2.8 2021-05-07 [1]

## rmeta 3.0 2018-03-20 [1]

## rprojroot 2.0.2 2020-11-15 [1]

## RSQLite 2.2.7 2021-04-22 [1]

## rstudioapi 0.13 2020-11-12 [1]

## rsvd 1.0.5 2021-04-16 [1]

## rvest 1.0.0 2021-03-09 [1]

## S4Vectors * 0.28.1 2020-12-09 [1]

## sass 0.3.1 2021-01-24 [1]

## scales * 1.1.1 2020-05-11 [1]

## scater * 1.18.6 2021-02-26 [1]

## scuttle 1.0.4 2020-12-17 [1]

## sessioninfo 1.1.1 2018-11-05 [1]

## shape 1.4.5 2020-09-13 [1]

## shiny 1.6.0 2021-01-25 [1]

## SingleCellExperiment * 1.12.0 2020-10-27 [1]

## spam * 2.6-0 2020-12-14 [1]

## sparseLDA * 0.1-9 2016-09-22 [1]

## sparseMatrixStats 1.2.1 2021-02-02 [1]

## stringi 1.6.1 2021-05-10 [1]

## stringr * 1.4.0 2019-02-10 [1]

## SummarizedExperiment * 1.20.0 2020-10-27 [1]

## SuppDists 1.1-9.5 2020-01-18 [1]

## survcomp * 1.40.0 2020-10-27 [1]

## survival * 3.2-10 2021-03-16 [2]

## survivalROC 1.0.3 2013-01-13 [1]

## testthat 3.0.2 2021-02-14 [1]

## tibble * 3.1.1 2021-04-18 [1]

## tidyr * 1.1.3 2021-03-03 [1]

## tidyselect 1.1.1 2021-04-30 [1]

## tidyverse * 1.3.1 2021-04-15 [1]

## tinytex 0.31 2021-03-30 [1]

## truncnorm 1.0-8 2018-02-27 [1]

## usethis 2.0.1 2021-02-10 [1]

## utf8 1.2.1 2021-03-12 [1]

## vctrs 0.3.8 2021-04-29 [1]

## vipor 0.4.5 2017-03-22 [1]

## viridis 0.6.1 2021-05-11 [1]

## viridisLite 0.4.0 2021-04-13 [1]

## withr 2.4.2 2021-04-18 [1]

## xfun 0.22 2021-03-11 [1]

## XML 3.99-0.6 2021-03-16 [1]

## xml2 1.3.2 2020-04-23 [1]

## xtable 1.8-4 2019-04-21 [1]

## XVector 0.30.0 2020-10-28 [1]

## yaml 2.2.1 2020-02-01 [1]

## zlibbioc 1.36.0 2020-10-28 [1]

## source

## Bioconductor

## CRAN (R 4.0.2)

## Bioconductor

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## Bioconductor

## Bioconductor

## Bioconductor

## CRAN (R 4.0.5)

## Bioconductor

## Bioconductor

## Bioconductor

## Bioconductor

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.5)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.5)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.1)

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## Bioconductor

## Bioconductor

## Bioconductor

## CRAN (R 4.0.2)

## Github (vqv/ggbiplot@7325e88)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## Bioconductor

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.5)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.5)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.5)

## CRAN (R 4.0.5)

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.5)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.5)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.5)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## CRAN (R 4.0.2)

## Bioconductor

## CRAN (R 4.0.2)

## Bioconductor

##

## [1] /Users/runner/work/_temp/Library

## [2] /Library/Frameworks/R.framework/Versions/4.0/Resources/library