The CPTAC A vs B dataset

Our first case-study is a subset of the data of the 6th study of the

Clinical Proteomic Technology Assessment for Cancer (CPTAC). In this

experiment, the authors spiked the Sigma Universal Protein Standard

mixture 1 (UPS1) containing 48 different human proteins in a protein

background of 60 ng/μL Saccharomyces cerevisiae strain BY4741 (MATa,

leu2Δ0, met15Δ0, ura3Δ0, his3Δ1). Two different spike-in

concentrations were used: 6A (0.25 fmol UPS1 proteins/μL) and 6B (0.74

fmol UPS1 proteins/μL) [5]. We limited ourselves to the data of

LTQ-Orbitrap W at site 56. The data were searched with MaxQuant

version 1.5.2.8, and detailed search settings were described in

(Goeminne, Gevaert, and Clement 2016). Three replicates are available for each

concentration.

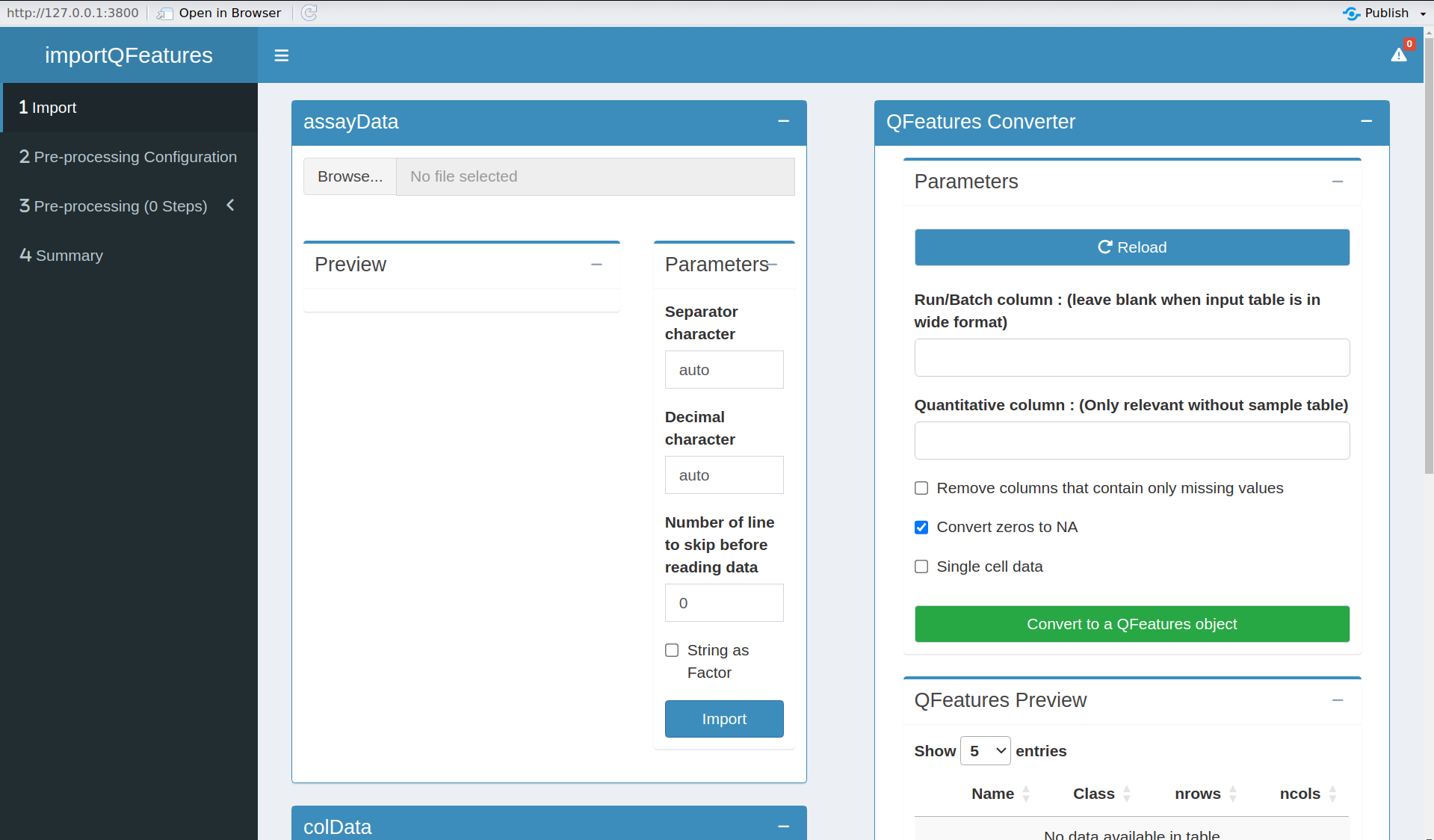

Data preprocessing

Before analysing the data, we must perform data preprocessing. The

will ensure the data meet our model assumptions. Data import and

preprocessing is performed by the QFeaturesGUI application.

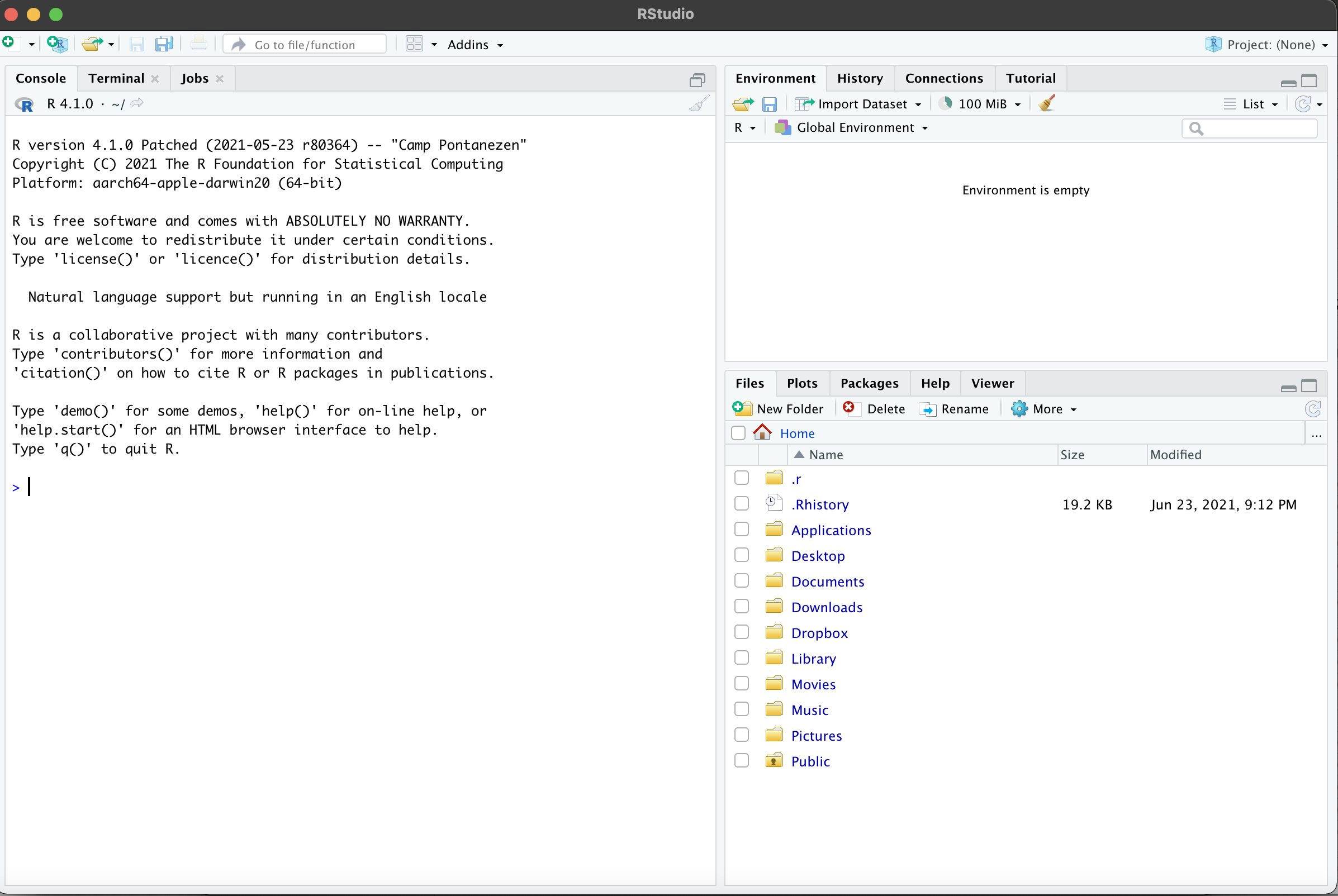

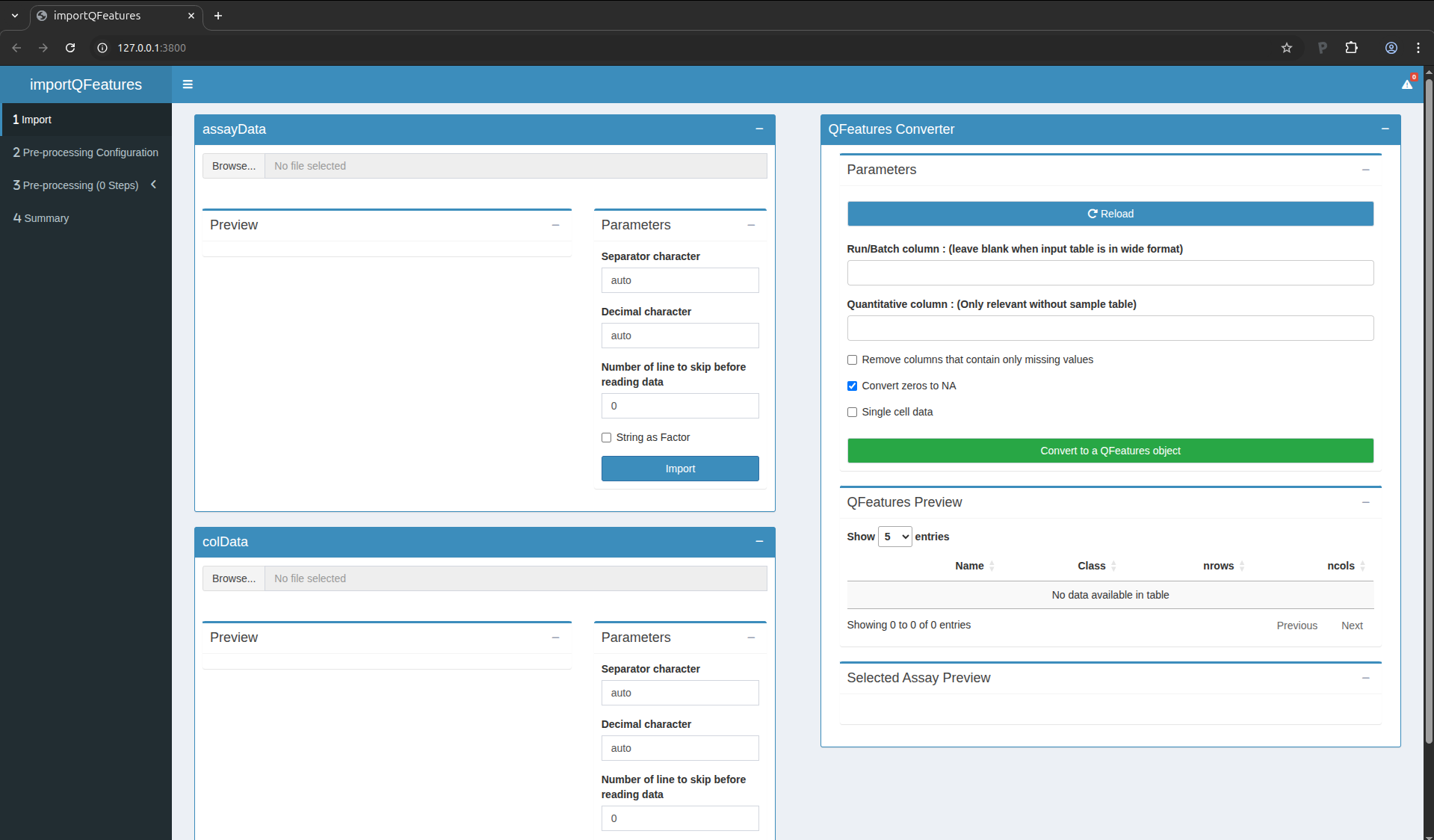

Open QFeaturesGUI

Run the following command (like above):

QFeaturesGUI::importQFeatures()

A new window will open with the shinyApp

Figure 3. QFeaturesGUI ShinyApp

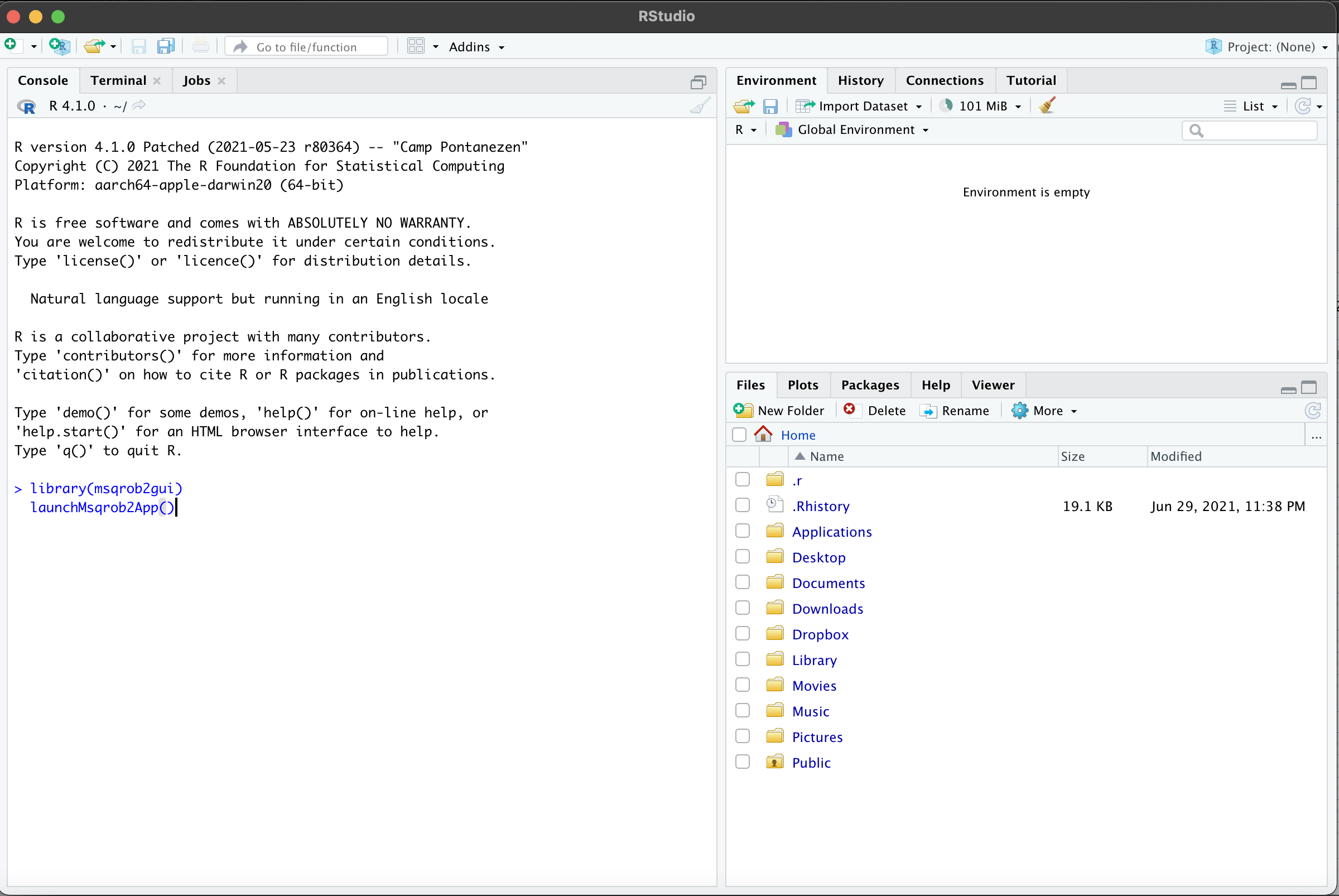

It is advicable to open the shiny app in a webbrowser. You can do

that by clicking on the button Open in Browser, which you can find

above the blue navigation bar and the shinyApp will pop up in a new

browser tab/window.

Figure 4. QFeaturesGUI in browser tab

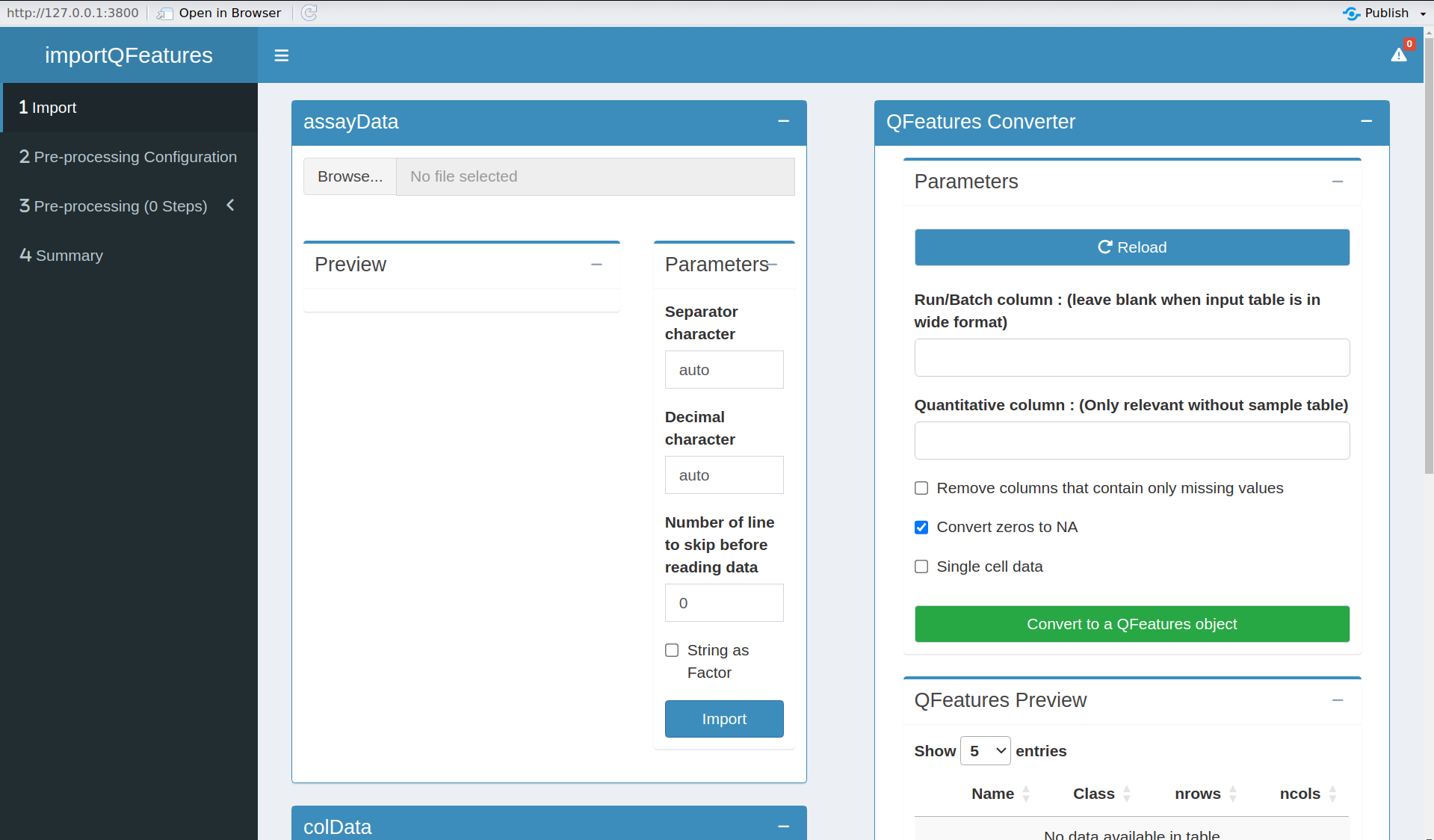

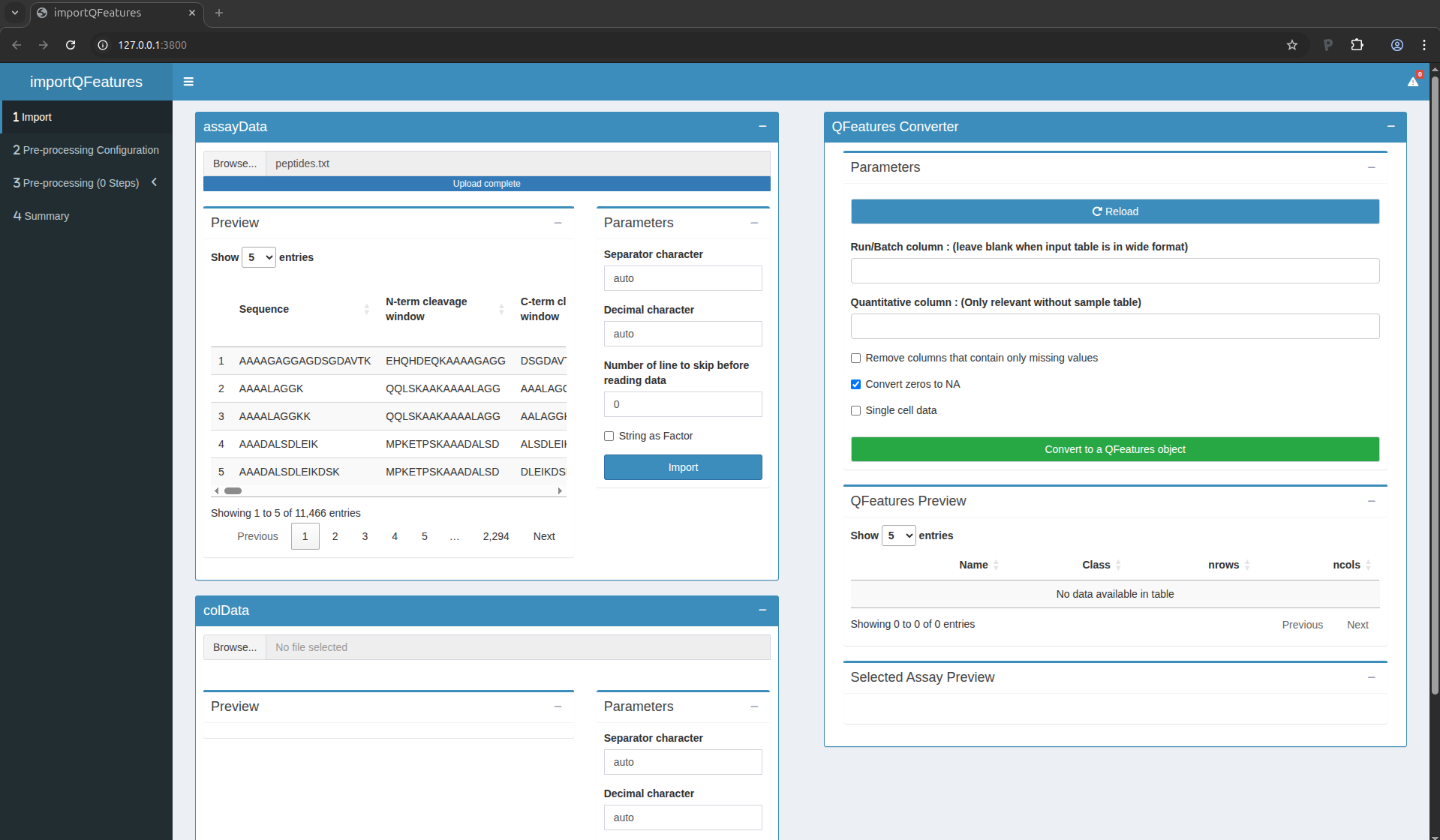

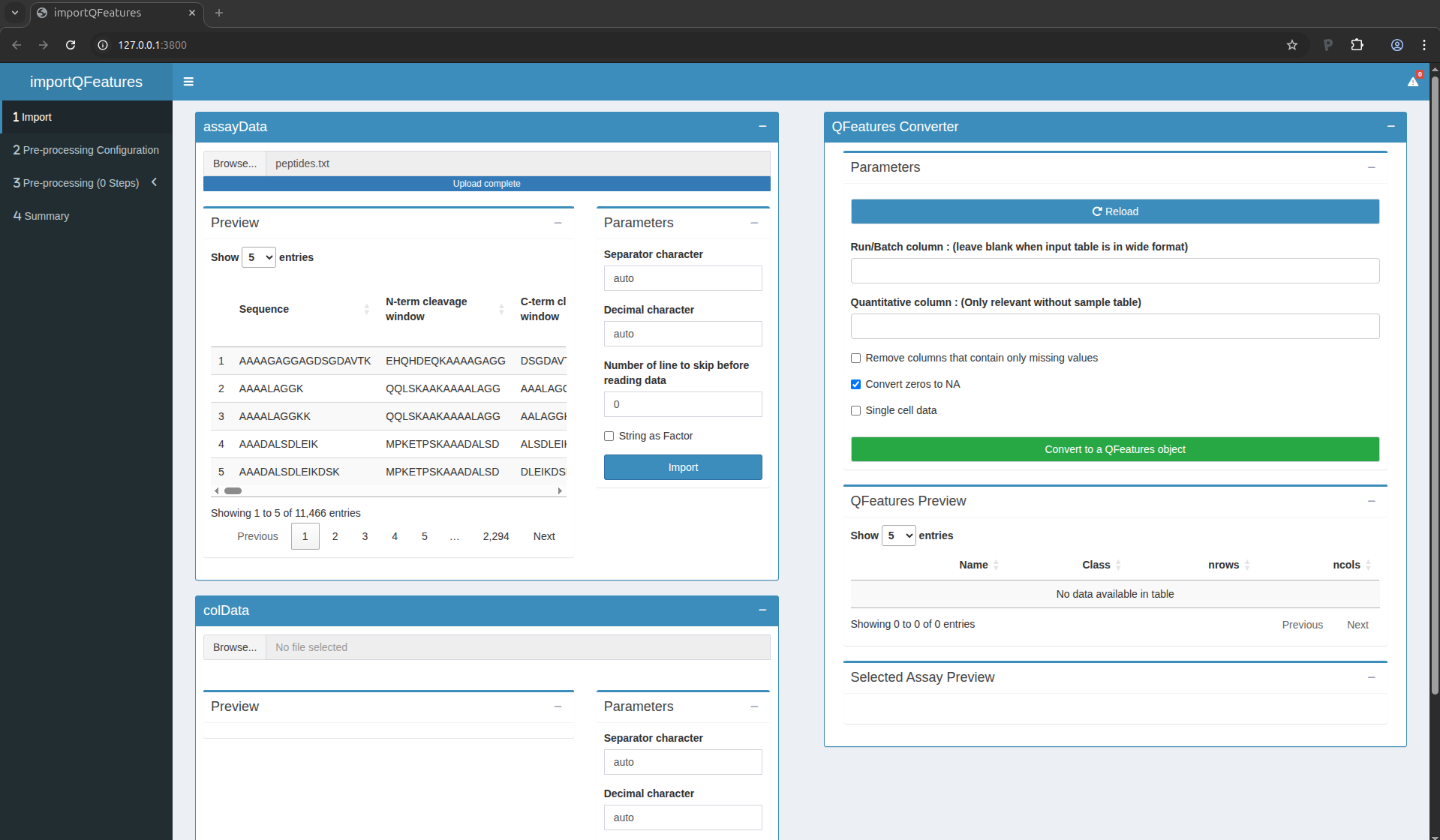

Import the data

Our MS-based proteomics data analysis workflows always start with 2

pieces of data:

- The search engine output. In this tutorial, we will use the

identified and quantified peptide data by MaxQuant, called

peptides.txt.

- An annotation file describing the experimental design. Each line

corresponds to a sample and each column provides a sample

annotation.

The first step is to upload the peptides.txt file. This is the file

that contains your peptide-level intensities. Note that the

intensities measured in each sample are stored in a separate column

and each row represents a peptide. This means that MaxQuant already

performed aggregation of PSM-level data into peptide-level data. For

more control on PSM-level quality control and data aggregation, our

data analysis workflows can also start from the evidence.txt files

which contain PSM-level information, but will not be covered in this

tutorial. For a MaxQuant search, the peptides.txt file can be found

by default in the path_to_raw_files/combined/txt/ folder from the

MaxQuant output, with path_to_raw_files the folder where raw files

were saved. To upload the peptide.txt file, click on the “Browse…”

button under the “assayData” tile title. You don’t need to adapt the

default parameters, you can click the “Import” button right away. A

preview of the table will appear and will let you quickly check the

data.

Figure 5. The peptide data have been succesfully uploaded

You can see that MaxQuant generated a table with 11,466 lines,

corresponding to 11,466 peptides. For each peptide, MaxQuant generated

71 columns containing various pieces of information, like the peptide

sequence, the protein groups the peptide matches to, the charge states

found,… Among these columns, there is also a set of columns

containing the quantified MS intensities for each sample (i.e. columns

named Intensity XXX). These will later be flagged as quantitative

information.

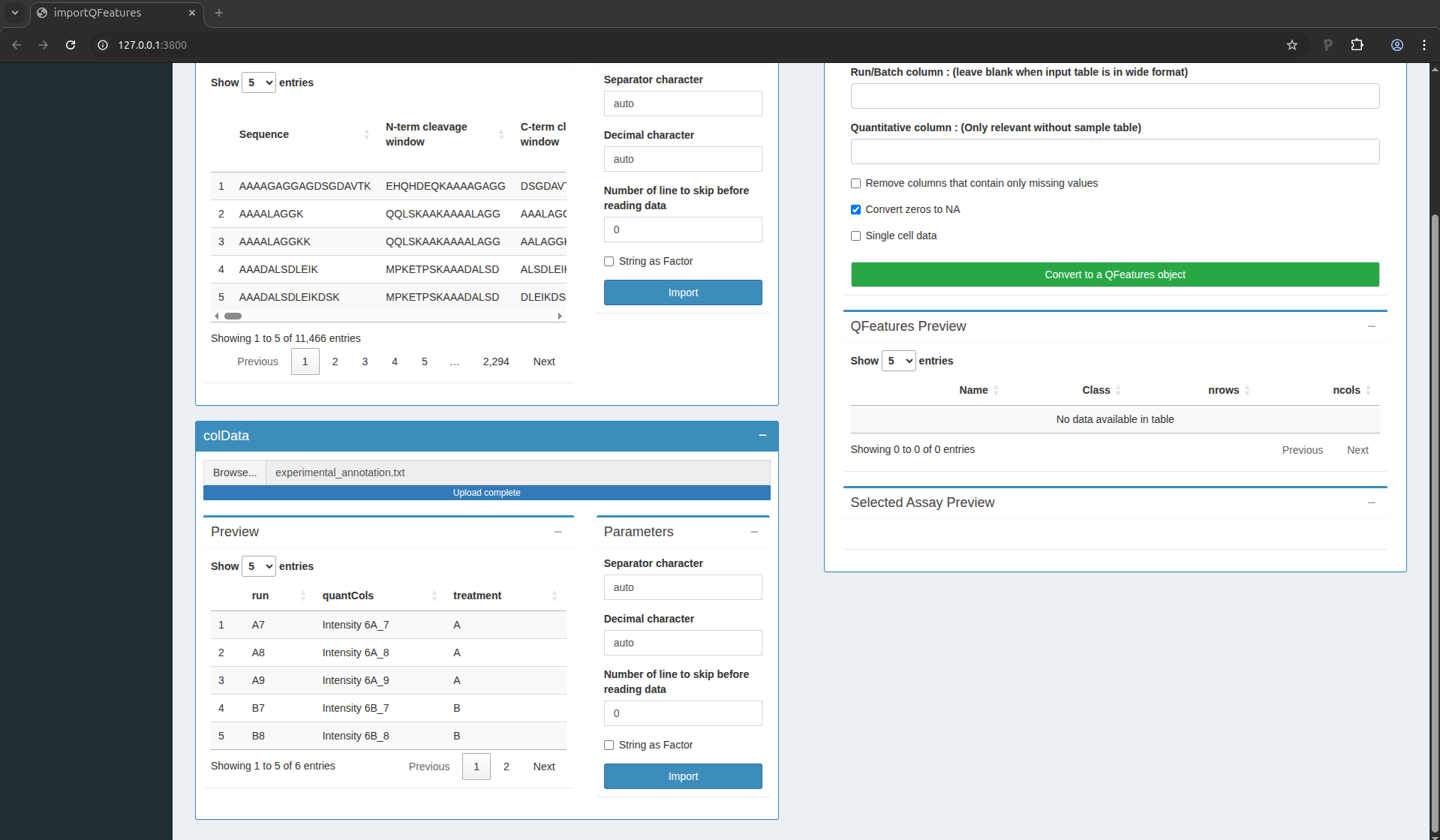

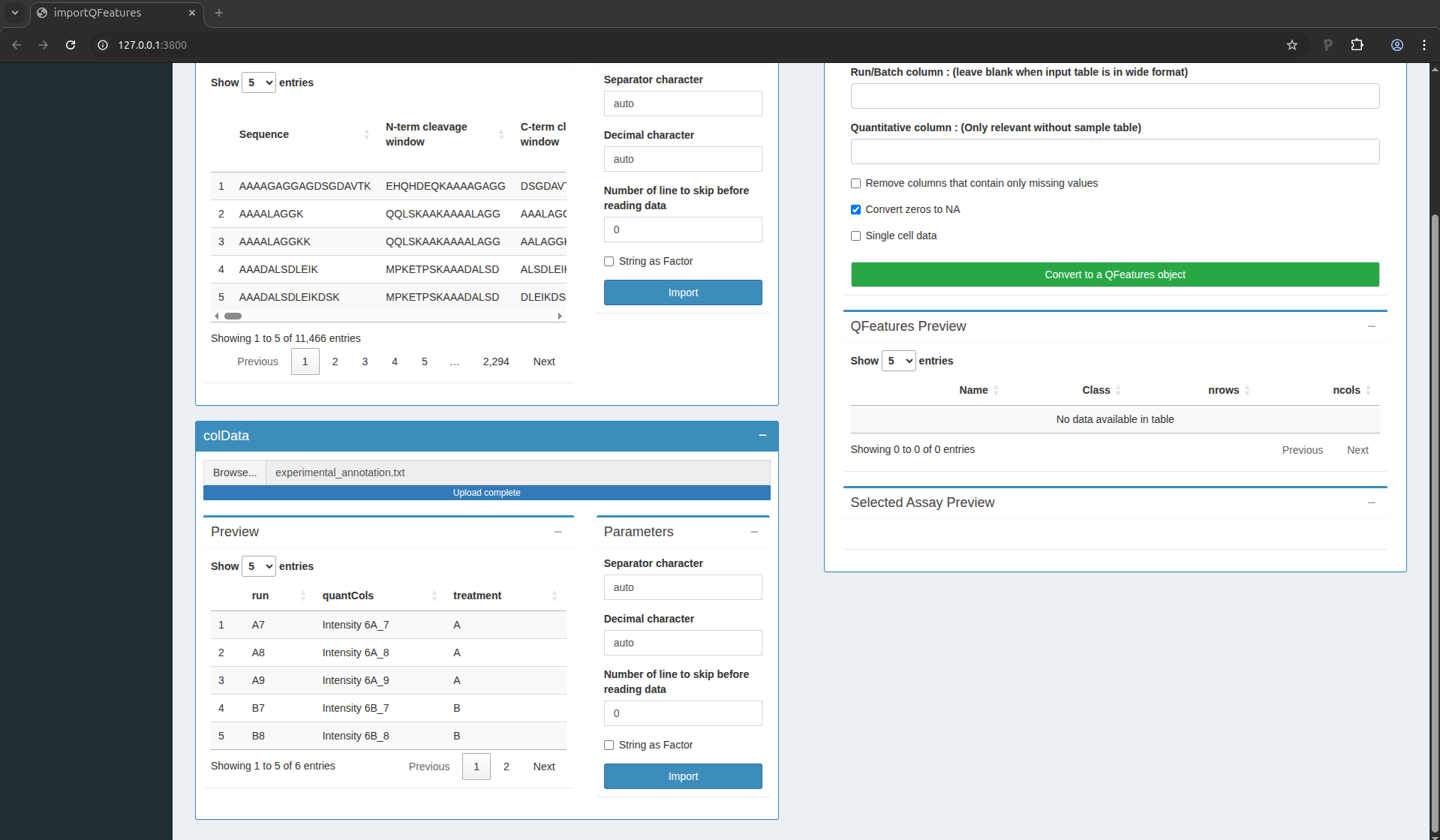

Similarly, we need to upload the experimental annotation file which is

called experimental_annotation.txt. We here provide the table as a

.txt, but you can store your table in many common formats, such as

.csv or .tsv. Note Excel allows you to store spreadsheets in such

formats. To upload the experimental_annotation.txt file, click on

the “Browse…” button under the “colData” tile title. You don’t need

to adapt the default parameters, you can click the “Import” button

right away. A preview of the table will appear and will let you

quickly check the data. Note that uploading the annotation file is

optional for data preprocessing, but you won’t be able to perform data

modelling in the next section.

Figure 6. The sample annotations have been succesfully uploaded

For the CPTAC dataset analysed with MaxQuant, the first column equals

the names given in MaxQuant’s “Experiment” column. The second column

contains the names of the quantitative columns in the table above; it

should always be named quantCols as it will allow the software to

correctly link the two tables. The last column is related to the study

design; here the treatment provides the spike-in condition.

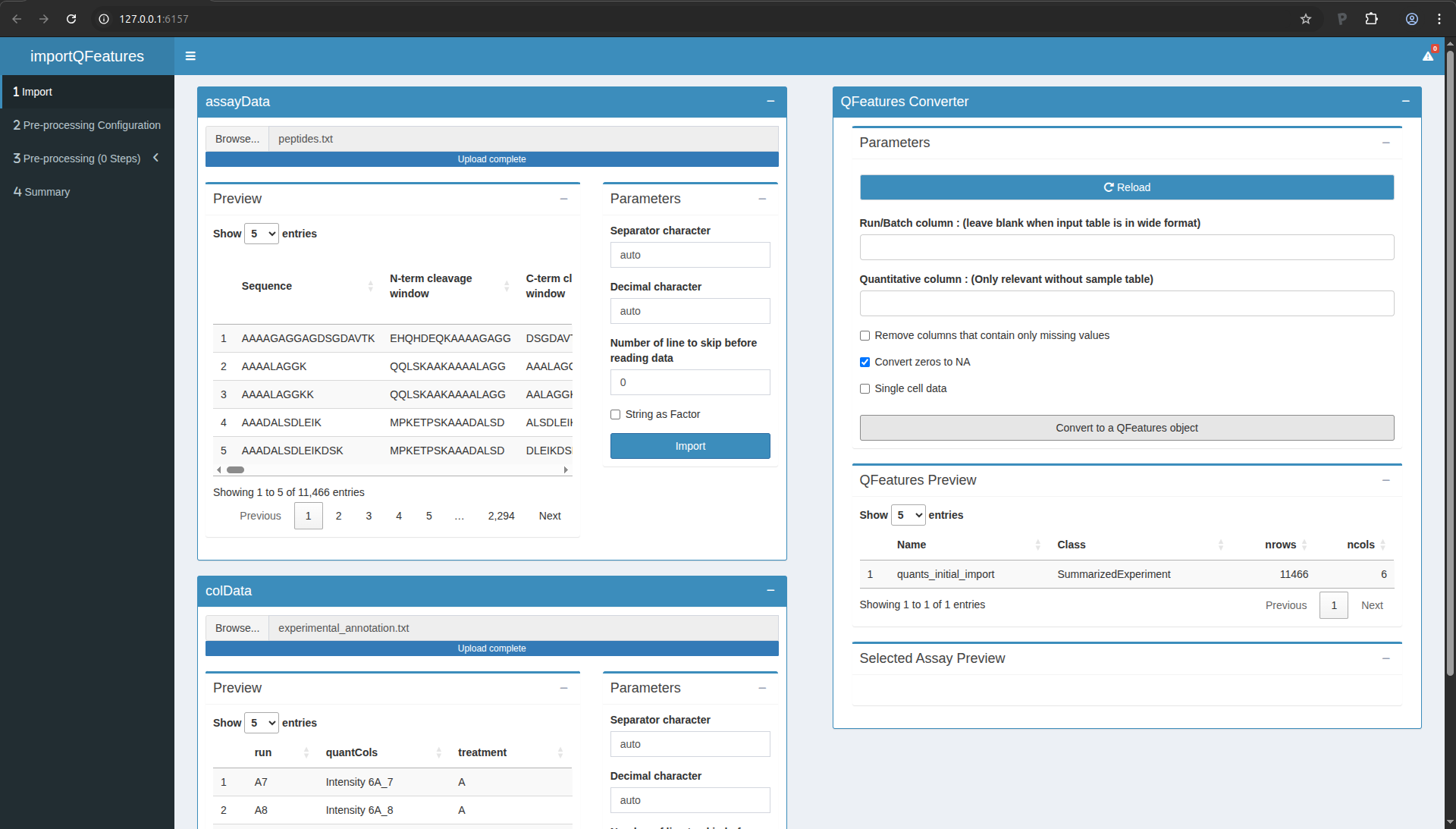

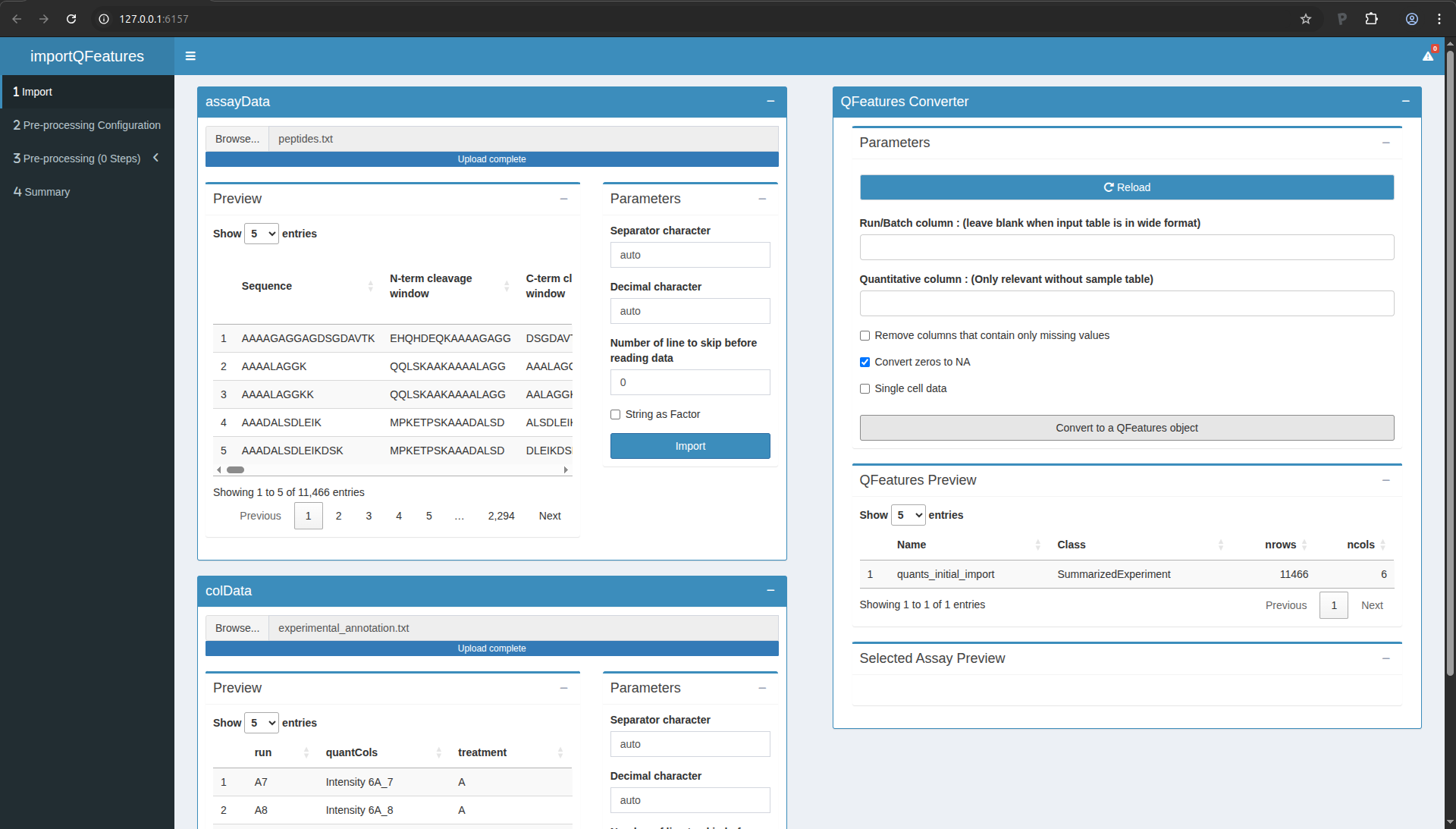

You are now ready to link the two tables into a QFeatures object,

which is the internal representation of the data we will use for the

rest of the workflow. Scroll up to the “QFeatures Converter” tile. You

can leave all the default setting and click on the green button

“Convert to a QFeatures object”.

After uploading your files, a preview of the data appears (feel free

to click on the table row). The window should look as follows:

Figure 7. The QFeatures object is successfully converted

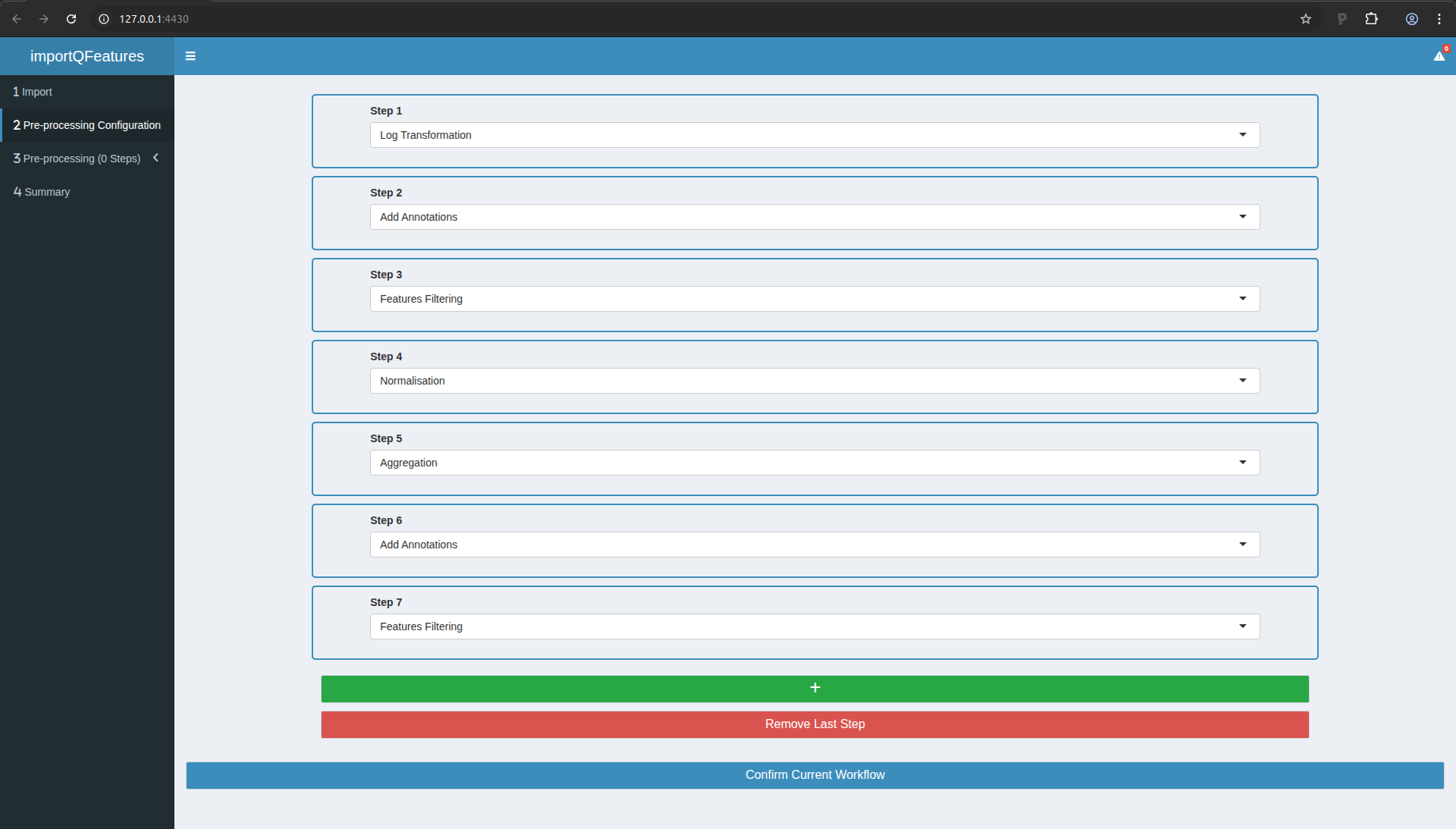

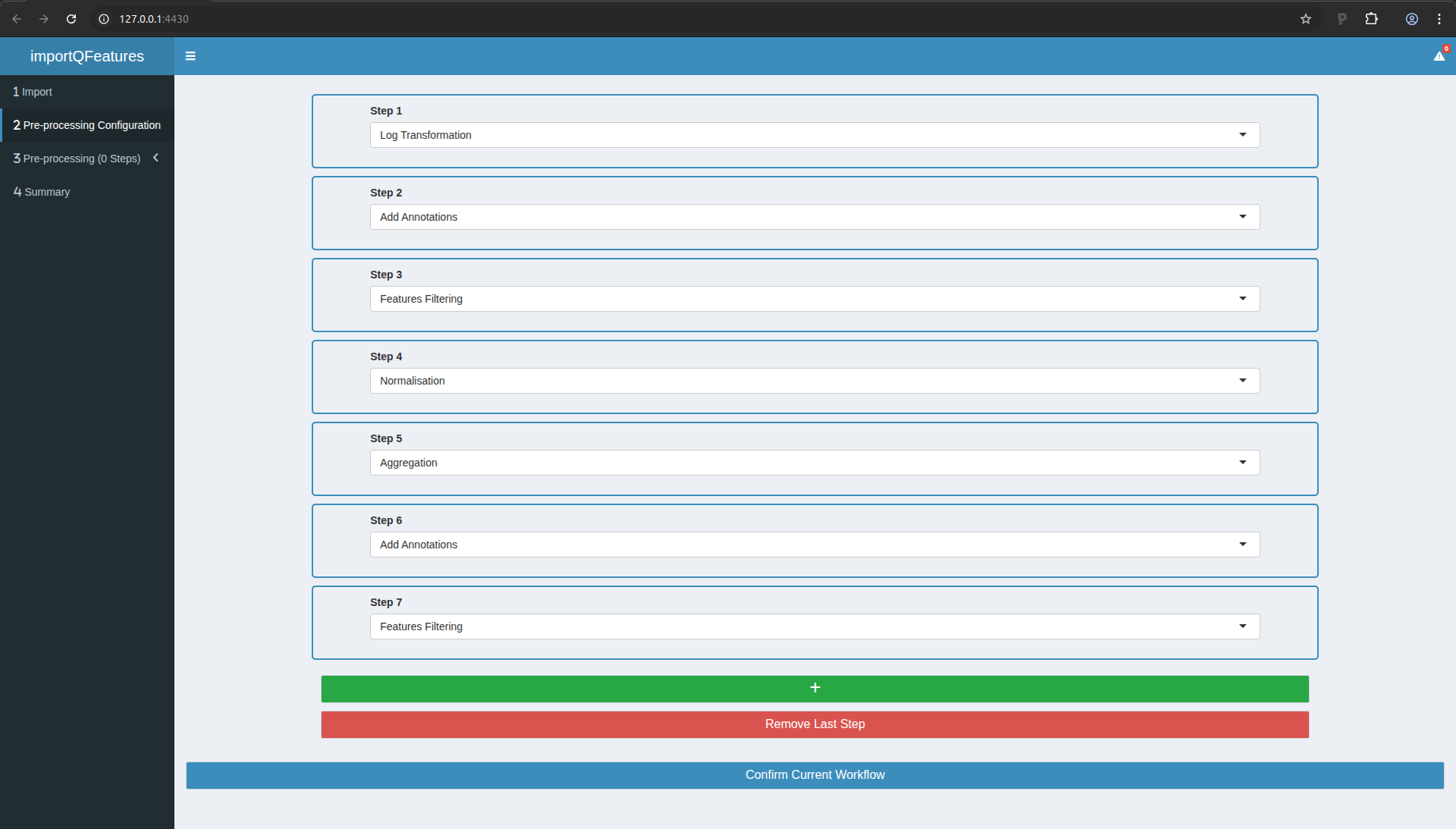

Configuring the preprocessing worflow

Move to the next table by clicking on “Pre-Processing Configuration”

in the menu to the left. Click on the green button “+” to add a step.

In this tutorial, you will need to add 7 steps in total. You should

parameterise them as follows:

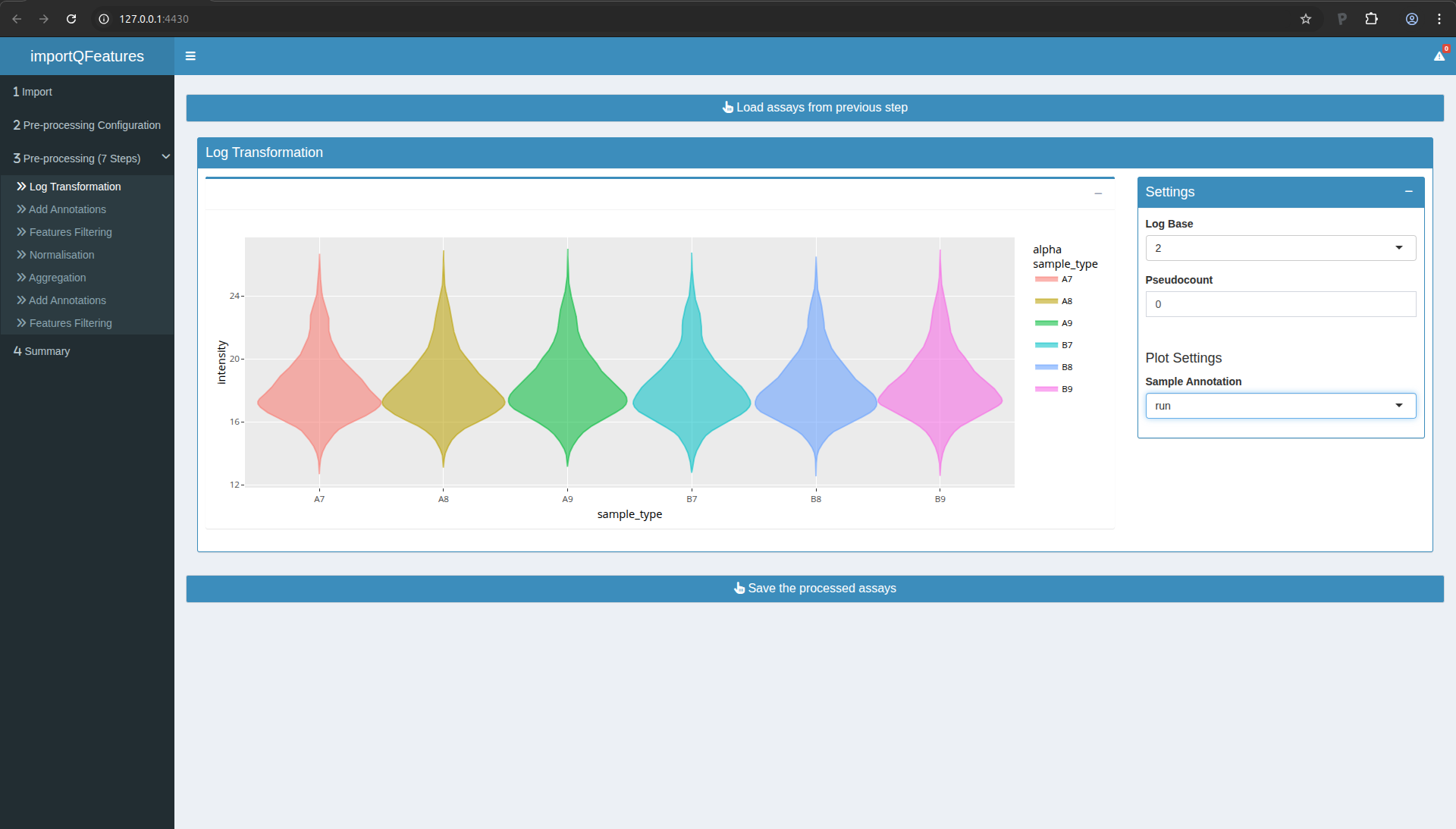

- Log Transformation

- Add Annotations

- Features filtering

- Normalisation

- Aggregation

- Add Annotations

- Features filtering

Once done, your screen should look as follows:

Figure 8. The preprocessing workflow upon configuration

You can now click the “Confirm Current Workflow” button. On the left

menu, you will see that the 3rd entry now mentions “3 Pre-processing

(7 Steps)”. We will now configure and run each of these steps.

Step 2: add annotations

In Step 3, we will filter out features based on then number of missing

values. This information is not yet available in our data for

filtering, so we will add this information as a feature annotation.

- Move to Step 2 in the left menu.

- Click the button to load the previous step.

- Scroll at the bottom an tick the “Add missing values numbers” box

- Click “perform annotation”. You should see a preview of the feature

annotations. You can check that the last column is “nNA” which

provides the number of missing values.

- Click the button to save the processed data.

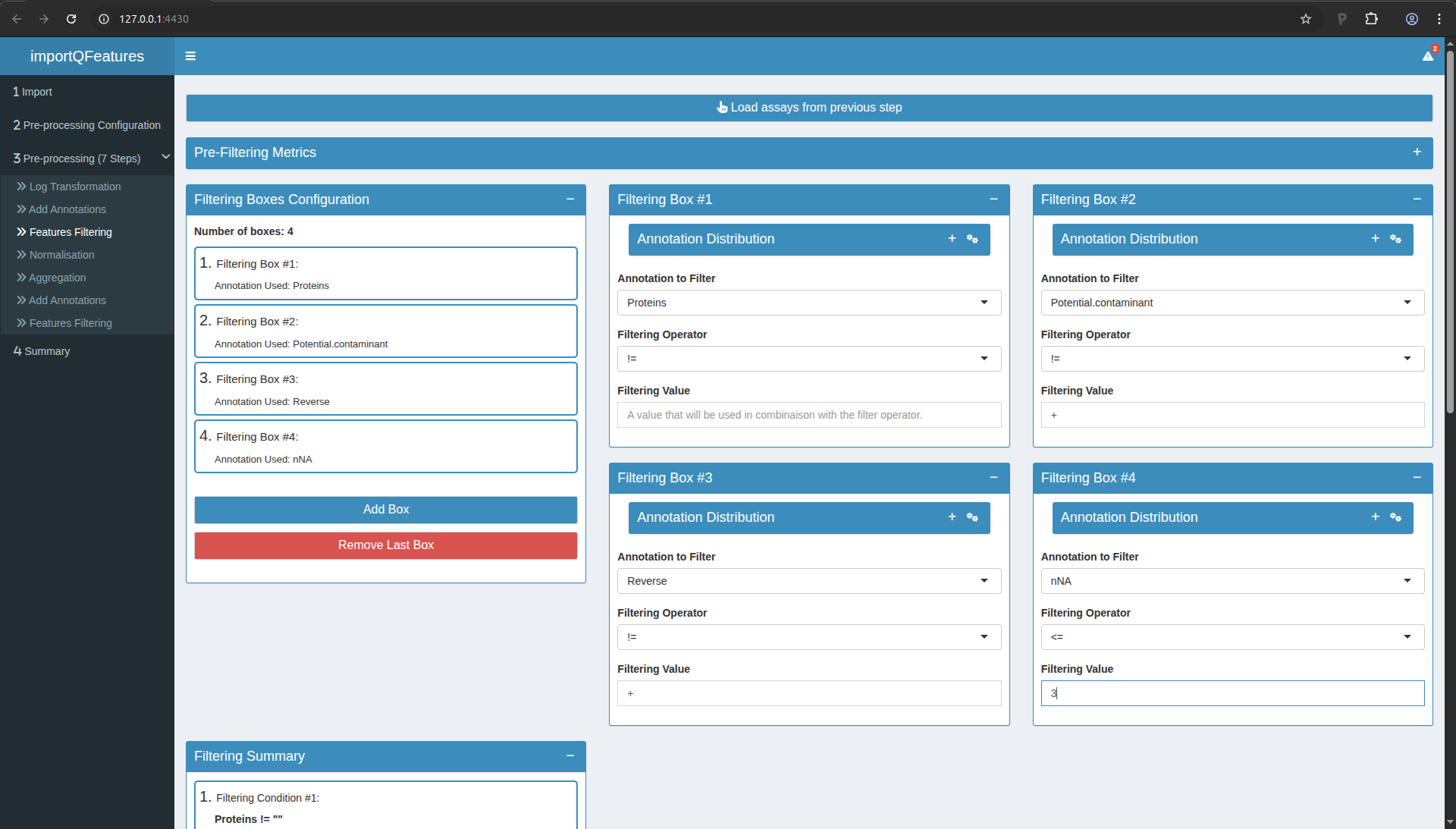

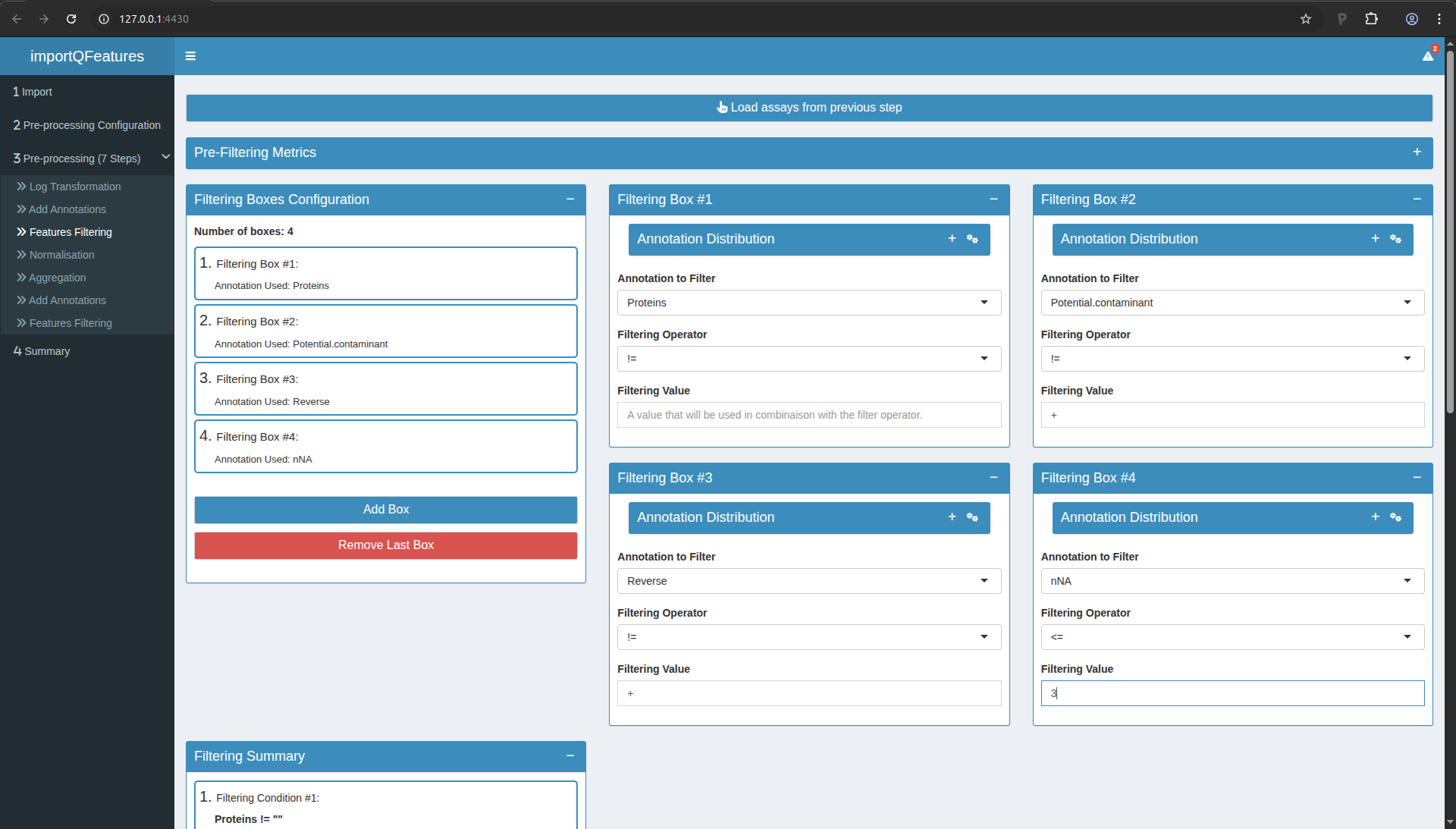

Step 3: peptide filtering

In this step, we will actually remove undesirable peptides. We will

use the following criteria:

- Remove peptides that could not be mapped to a protein. In the peptides.txt file of MaxQuant the variable Proteins consists of an empty character:

Proteins !=

- Remove reverse sequences (decoys). In the peptides.txt file of MaxQuant the variable Reverse is an empty character for forward sequences (targets) and an “+” character for reverse sequences:

Reverse != +

- Remove contaminants. In the peptides.txt file of MaxQuant the variable “Contaminants” or “Potential.contaminant” an empty character for non-contaminant sequences and a “+” character for contaminant sequences:

Potential.contaminant != +

- Remove peptides that were identified in less than three sample. There are 6 samples so there number of NA’s has to be smaller or equal to:

nNA <= 3

To perform this filtering and carry out the sep, you can:

- Move to Step 3 in the left menu.

- Click the button to load the previous step.

- Click 4x the blue “Add Box” button to add 4 filtering boxes.

- Fill in the boxes as shown in the figure below.

- In the “Filtering Summary” tile, click the “Apply Filters” button.

- Click the button to save the processed data.

Figure 10. Step3: Feature filtering. Note the PCA plots are collapsed for better overview of the boxes.

Step 4: Normalisation

We normalise the data by substracting the sample median from every

intensity for peptide \(p\) in a sample \(i\):

\[y_{ip}^\text{norm} = y_{ip} - \hat{\mu_i}\]

with \(\hat\mu_i\) the median intensity over all observed peptides in

sample \(i\).

- Move to Step 4 in the left menu.

- Click the button to load the previous step.

- Median normalisation is configured by default, so you can simply

click the button to save the processed data.

Step 5: Summarisation

In this step, the peptide intensities will be summarised (aka

aggregated) into protein intensities using robust summarisation. You

can perform this as follows:

- Move to Step 5 in the left menu.

- Click the button to load the previous step.

- In the settings, first select “robustSummary” in the first field and

then select “Proteins” as the rowData variable defining the features of

the assay to aggregate.

- Click the button to save the processed data.

Step 6: add annotations

Repeat step 2, this will allow to perform a filtering on missing, but

at the protein-level instead of peptide-level.

Step 7: protein filtering

In this step, we will remove proteins that were identified in less

than 4 samples so that for most proteins at least two protein-level expression values are observed in each treatment group. So we will tolerate at most 6-4 = 2 missing values at the protein-level. You may have guessed it by now, but you can proceed

with:

- Move to Step 7 in the left menu.

- Click the button to load the previous step.

- Click 1x the blue “Add Box” button to add a filtering box.

- Fill in the boxes so that the filter becomes:

nNA <= 2.

- In the “Filtering Summary” tile, click the “Apply Filters” button.

- Click the button to save the processed data.

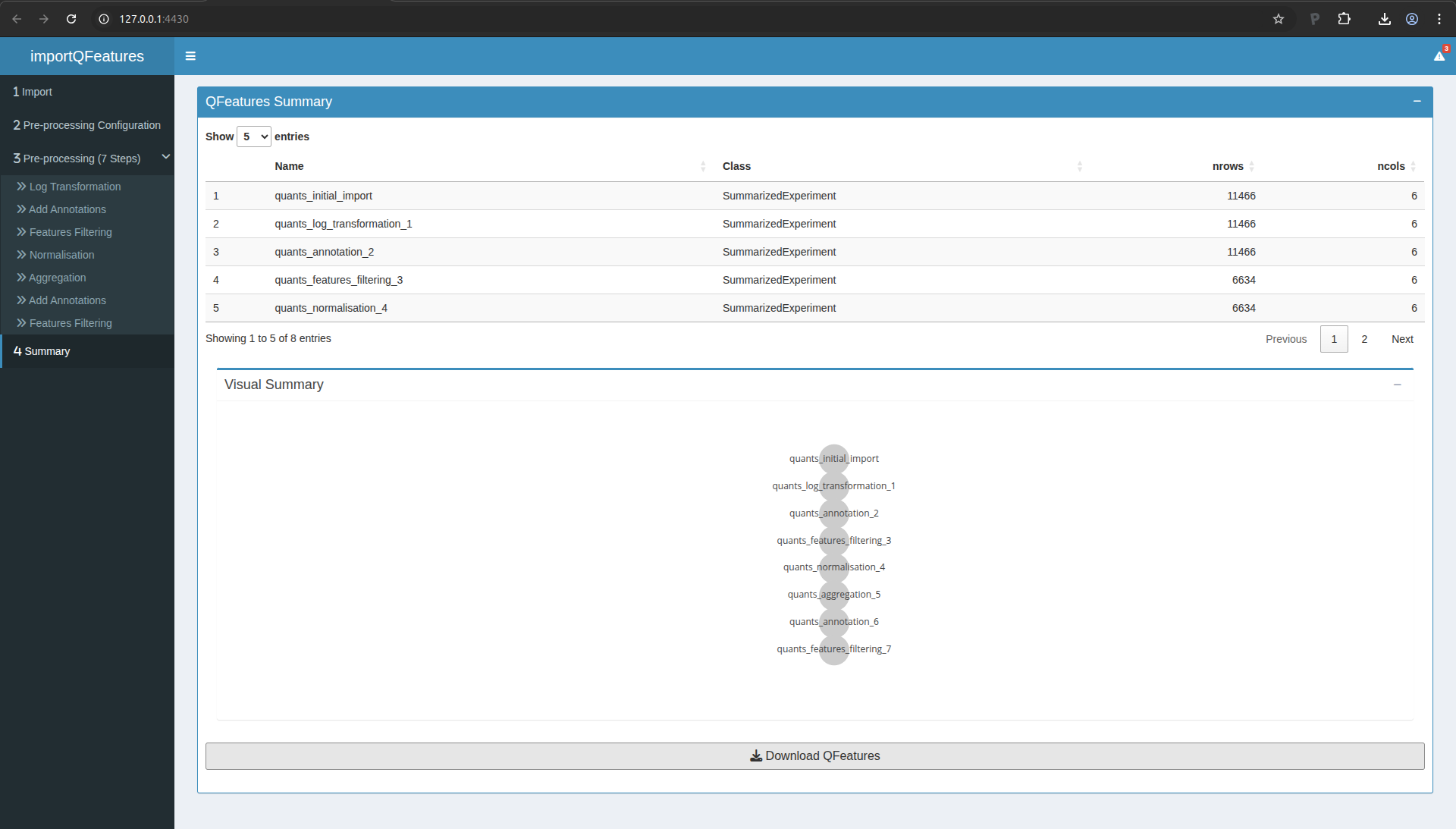

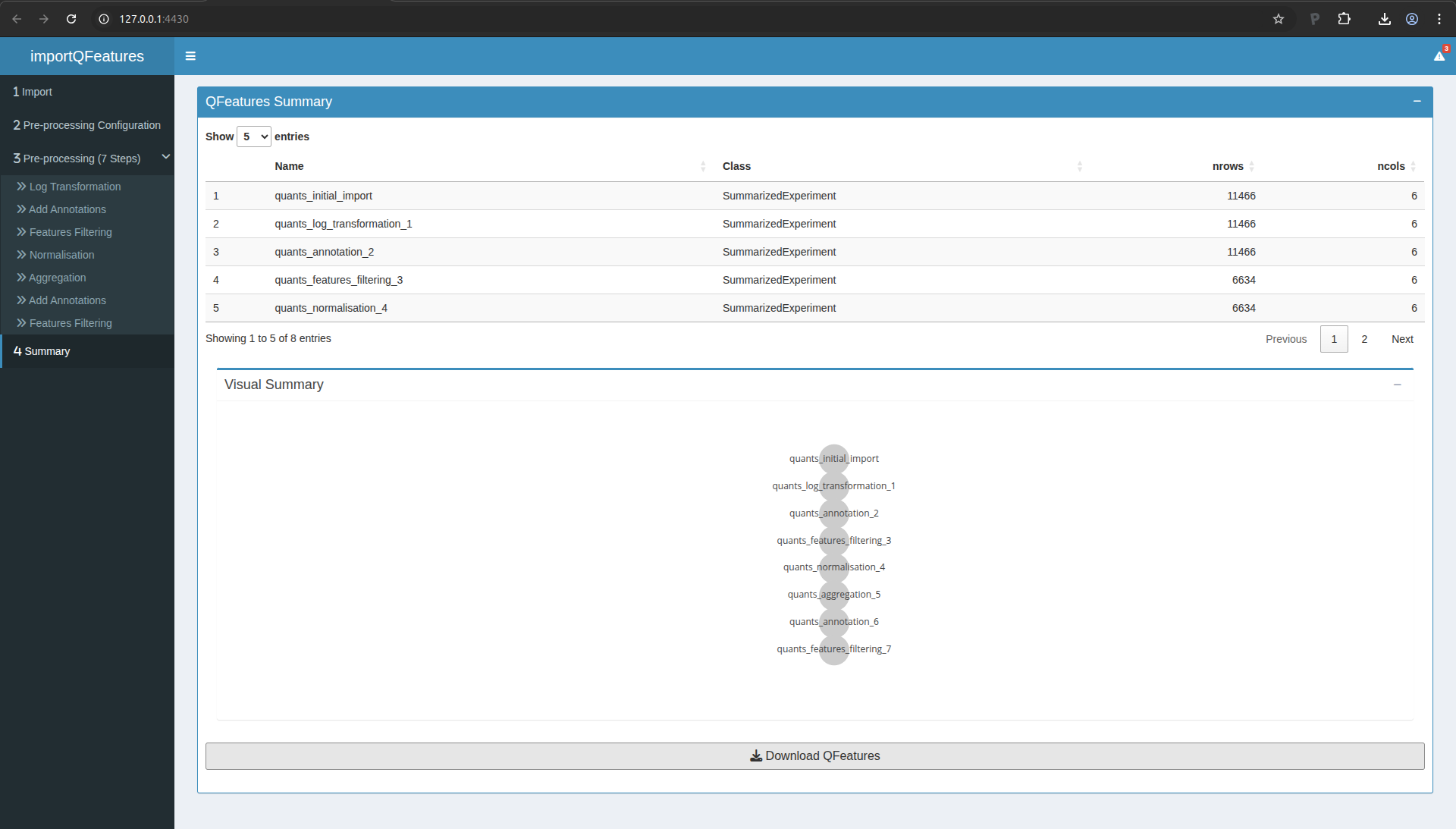

Save your progress

You now have completed the data processing. You can move to the

Summary tab (in the left menu). There you will find an overview of the

data and the data processing steps.

Figure 11. Summary of the data processing.

Go to the bottom of the page and click on the “Download QFeatures”

button. A pop up windown will ask you to select a directory to store

the resulting file. By default the file is called

qfeatures_object.rds, but feel free to rename as you wish but you

cannot change the file extension (.rds).

You can now close the application.

Statistical analysis

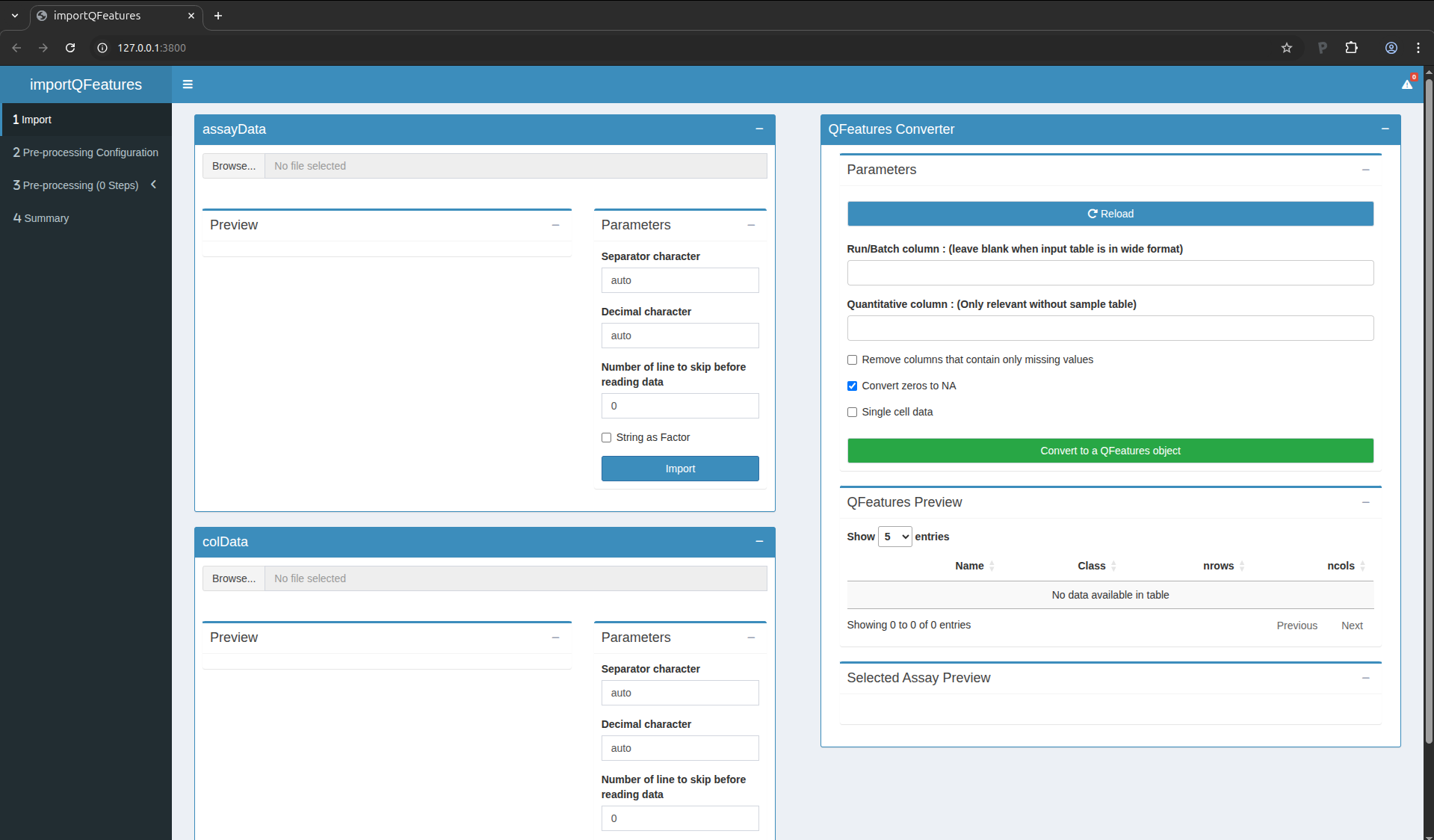

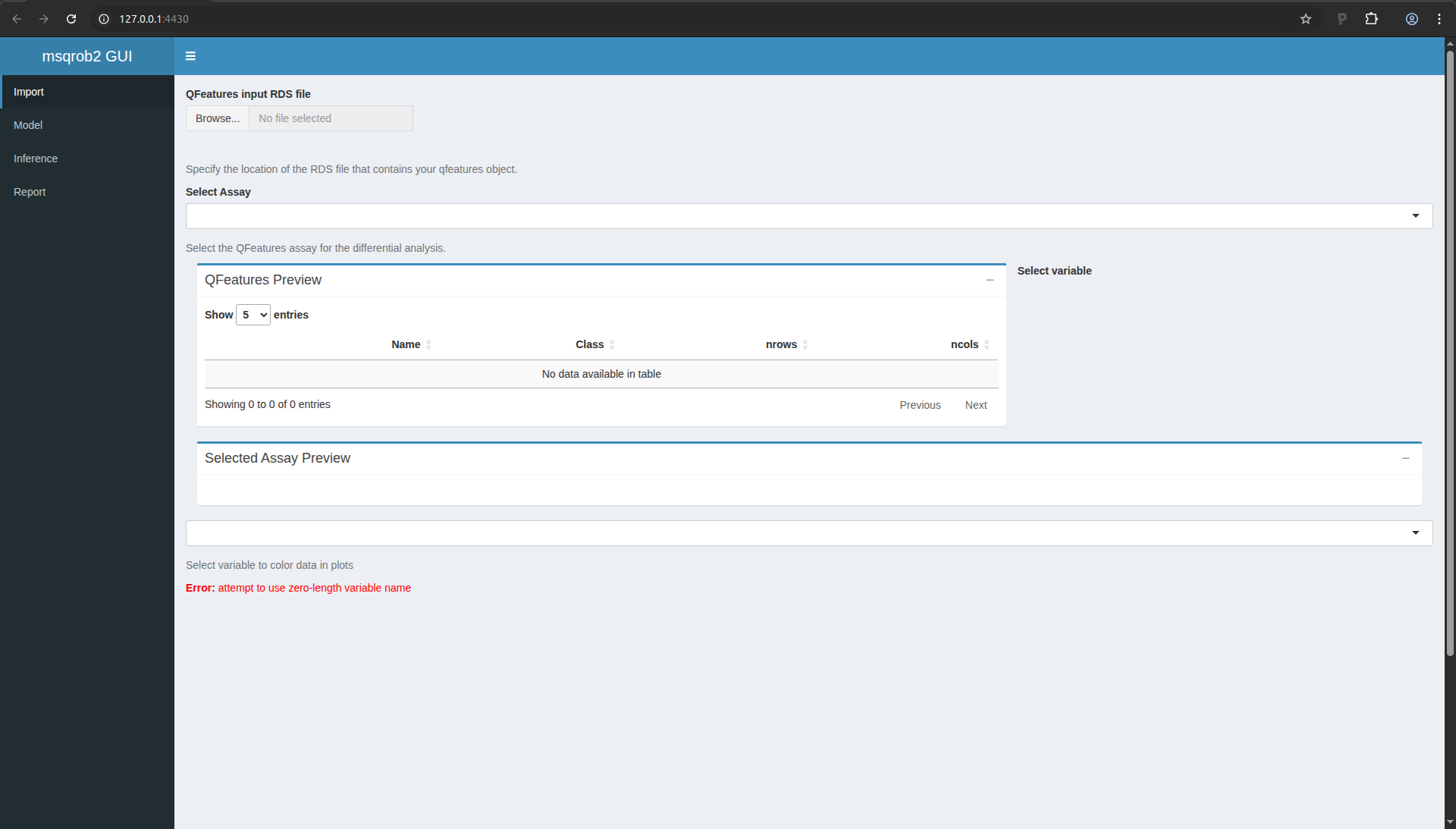

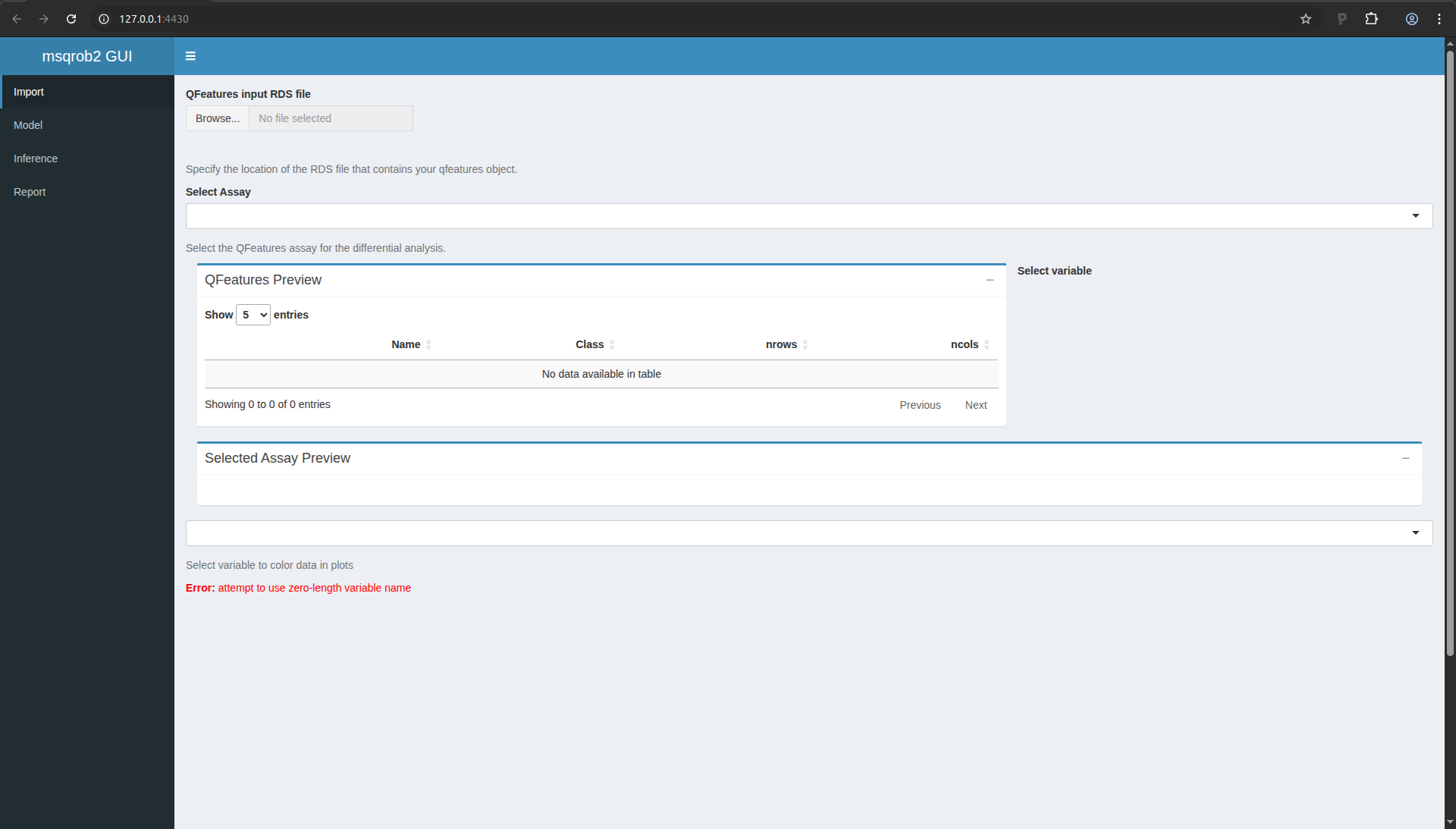

Open msqrob2gui

Return to your Rstudio application and run the following command:

msqrob2gui::launchMsqrob2App()

A new window will open with the shinyApp for data modelling

Figure 12. msqrob2gui ShinyApp

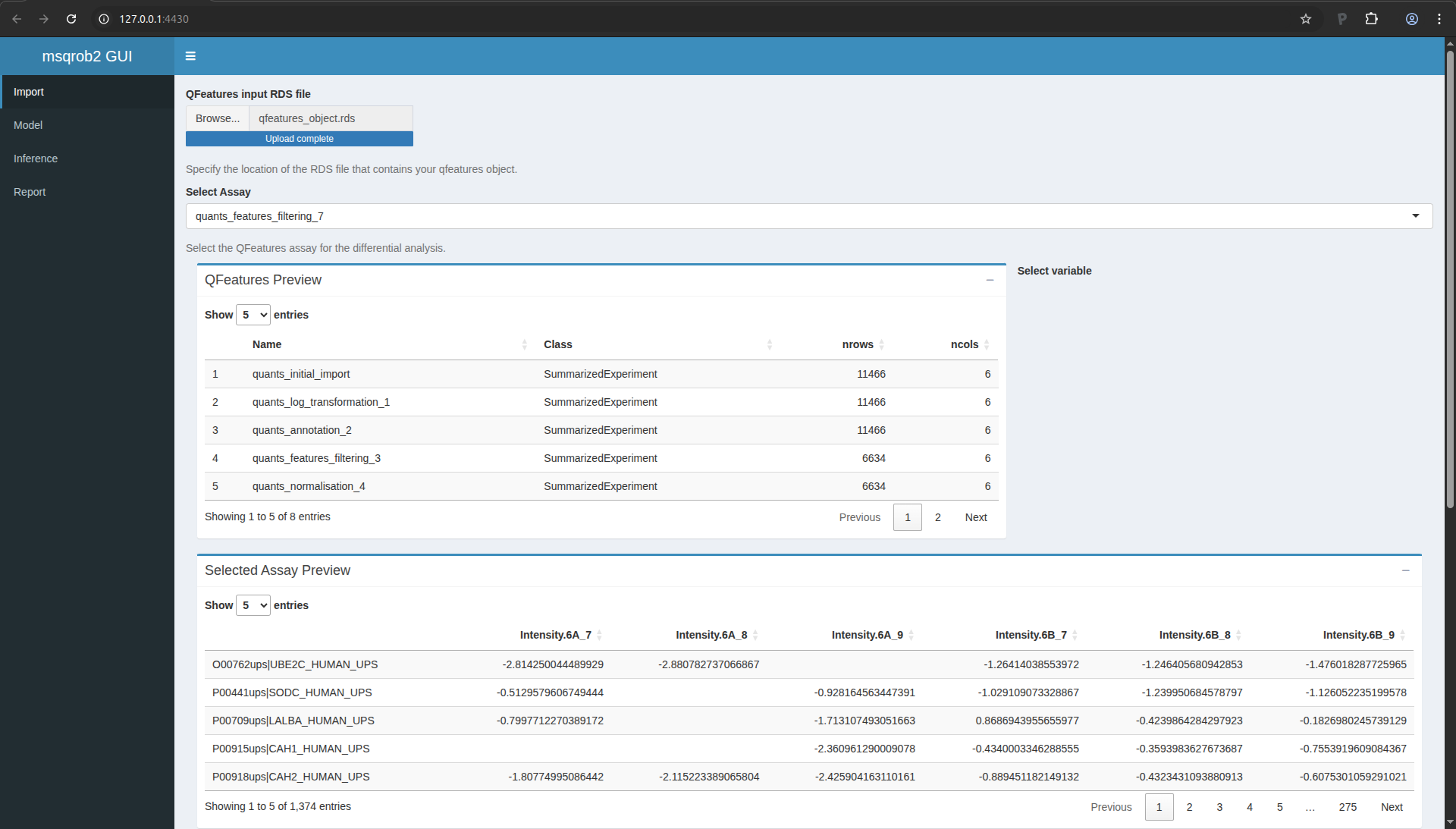

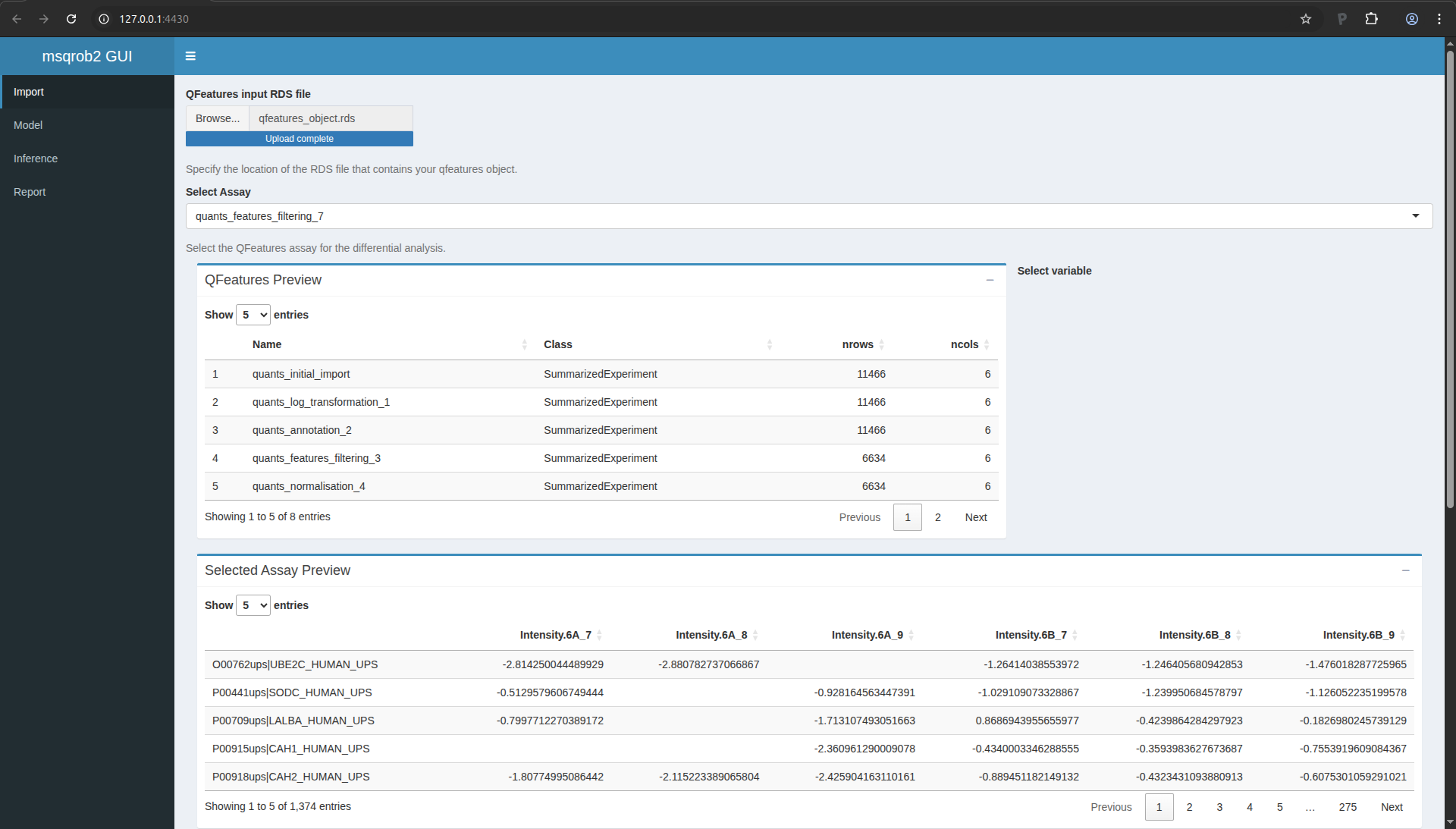

Import the processed data

We start the data modelling workflow with importing the data processed

in the previous section:

- Click on “Browser…” and fetch the file you saved with the

QFeaturesGUI app.

- In the “Select Assay”, select the last item,

“quants_features_filtering_7”.

The application let’s you explore the data tables and provides a plot

with the intensity distributions for each sample.

Figure 13. msqrob2gui application upon data import.

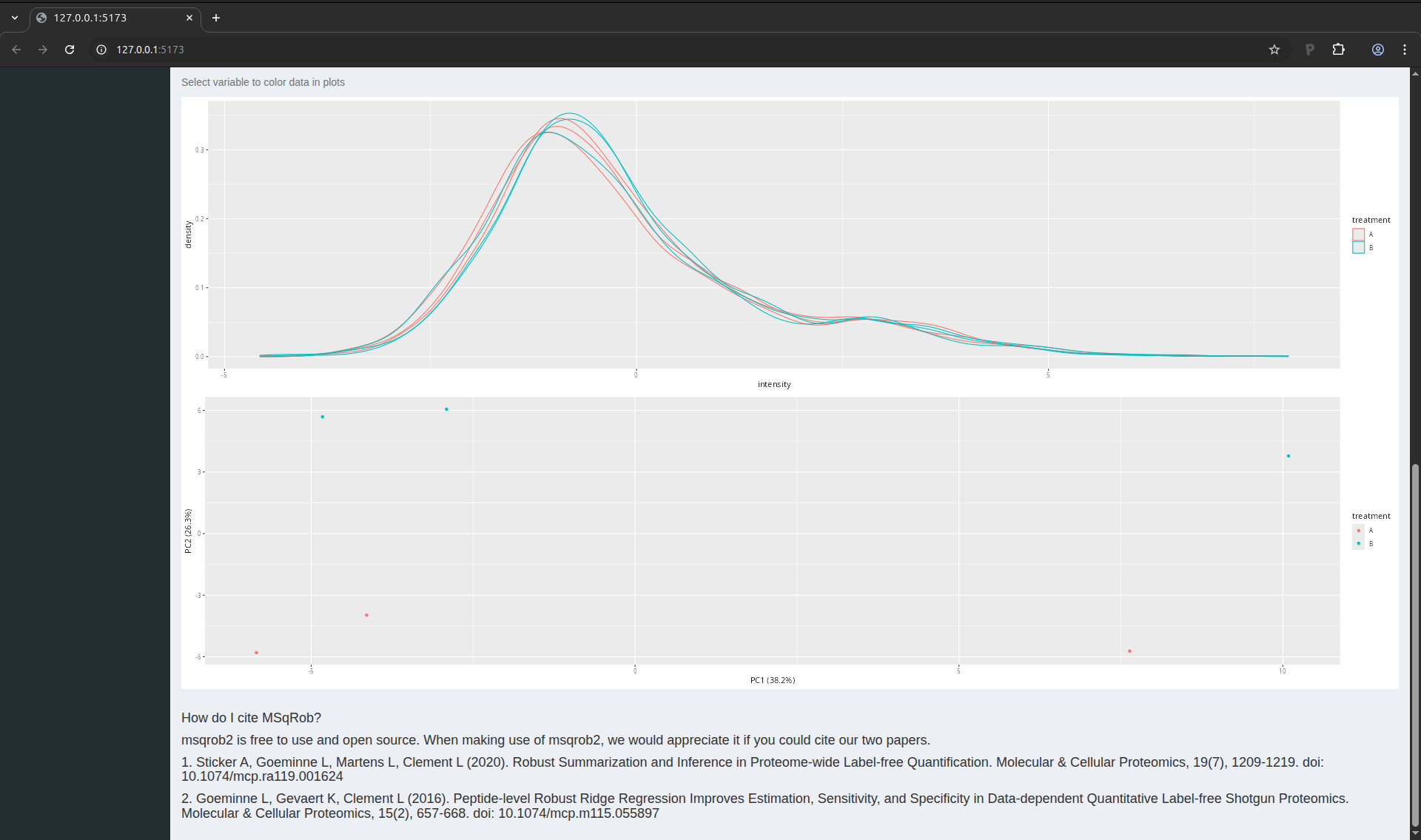

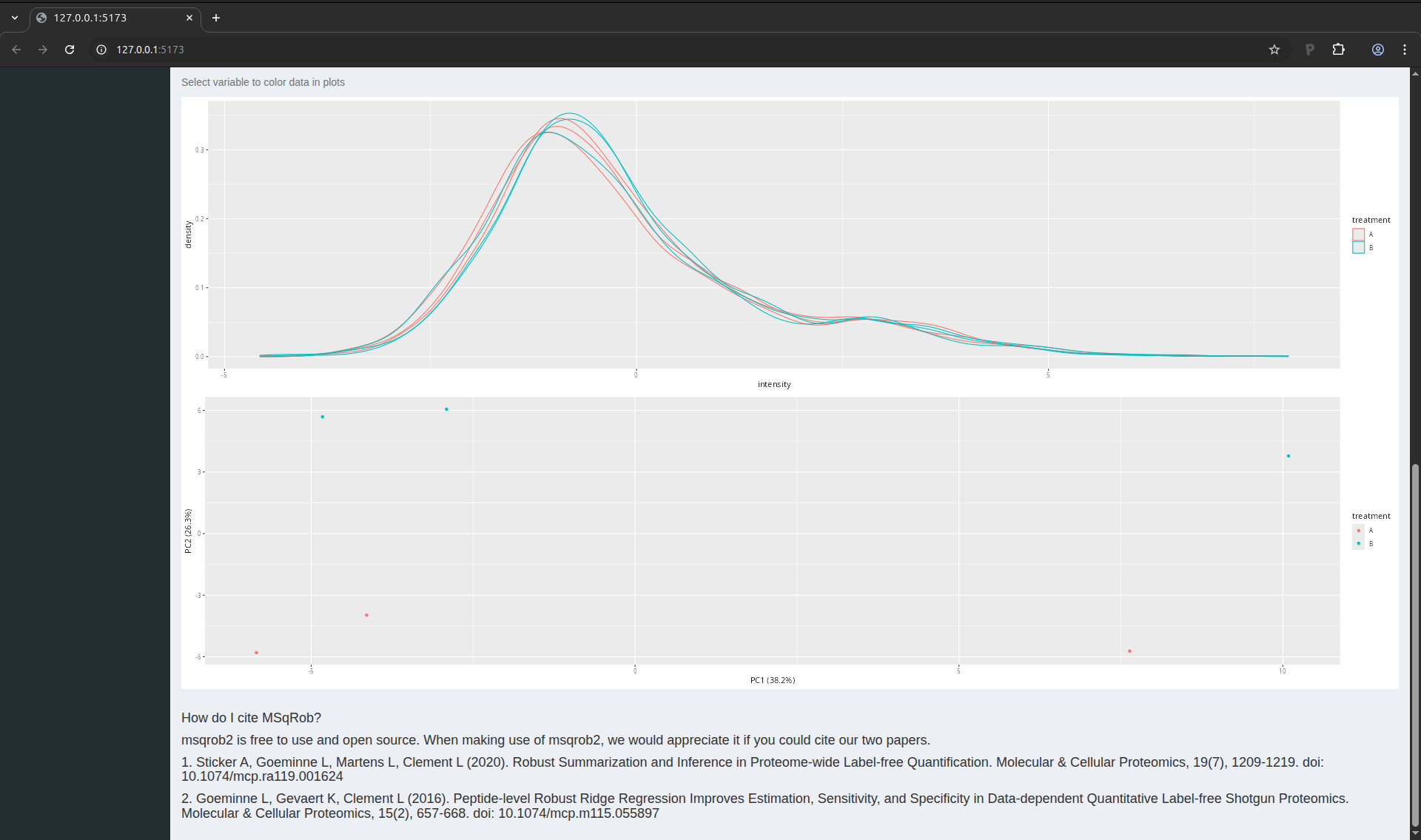

When you scroll down the page, you will find a drop down text selector

(under the table). Change “run” into “treatment” and look at the

principal component analysis (PCA) plot. How would you interpret this

graph?

Figure 14. PCA plot of the CPTAC data set.

The PCA plot indicates that we can expect a treatment effect in the

data as the sample belonging to one treatment can be separated from

the samples belonging to the other treatment. We also see that this

separation mostly occurs in the second principal componen. The first

component, which explains most of the variation, does not seem to bear

correlation associated with treatment. This is expected as we know

that only a few protein were spiked in, so we do not expect an effect

of treatment for most proteins. We will now delve into the data

modelling and inference that will statistically quantify these effects

for each protein individually.

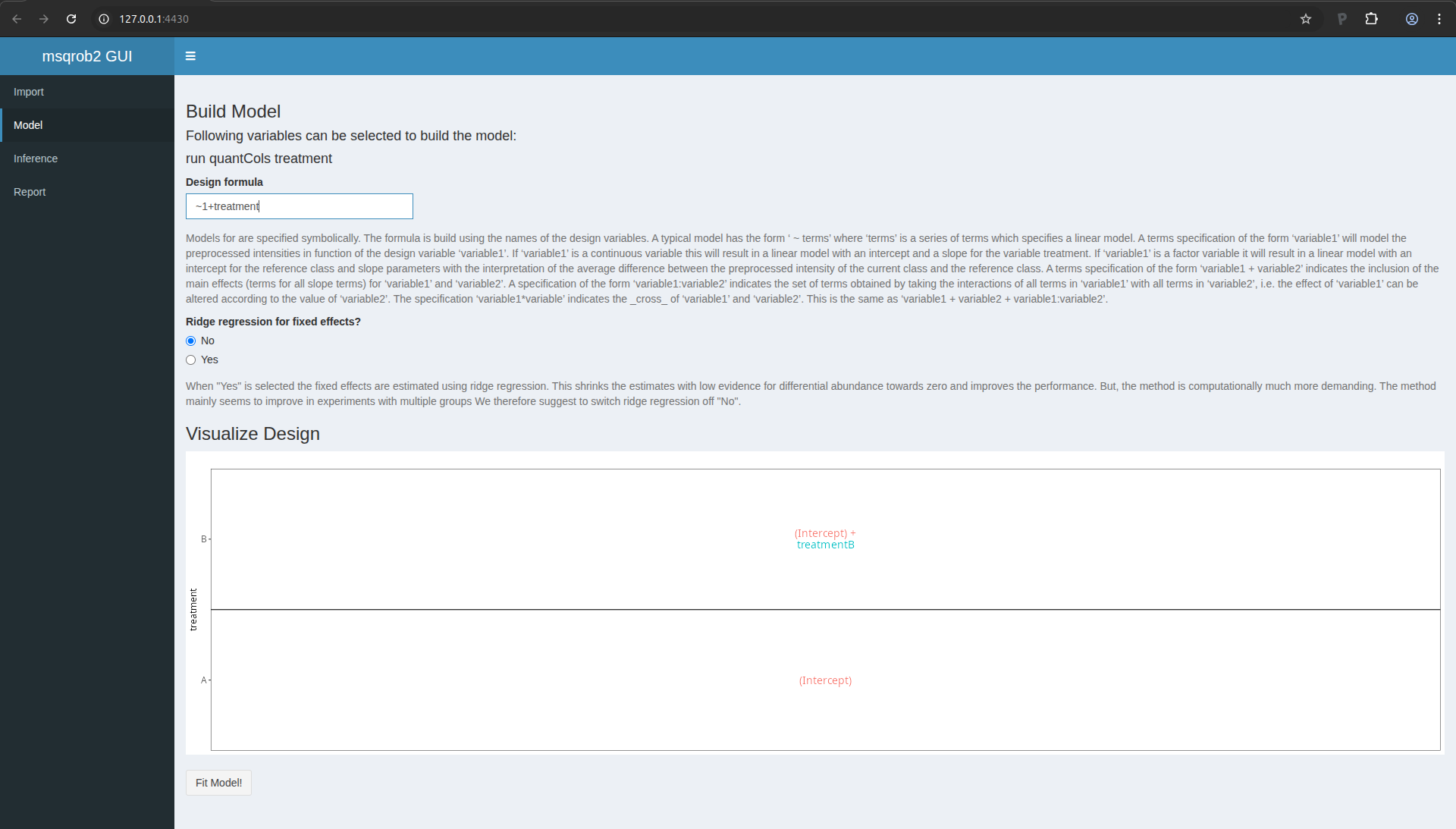

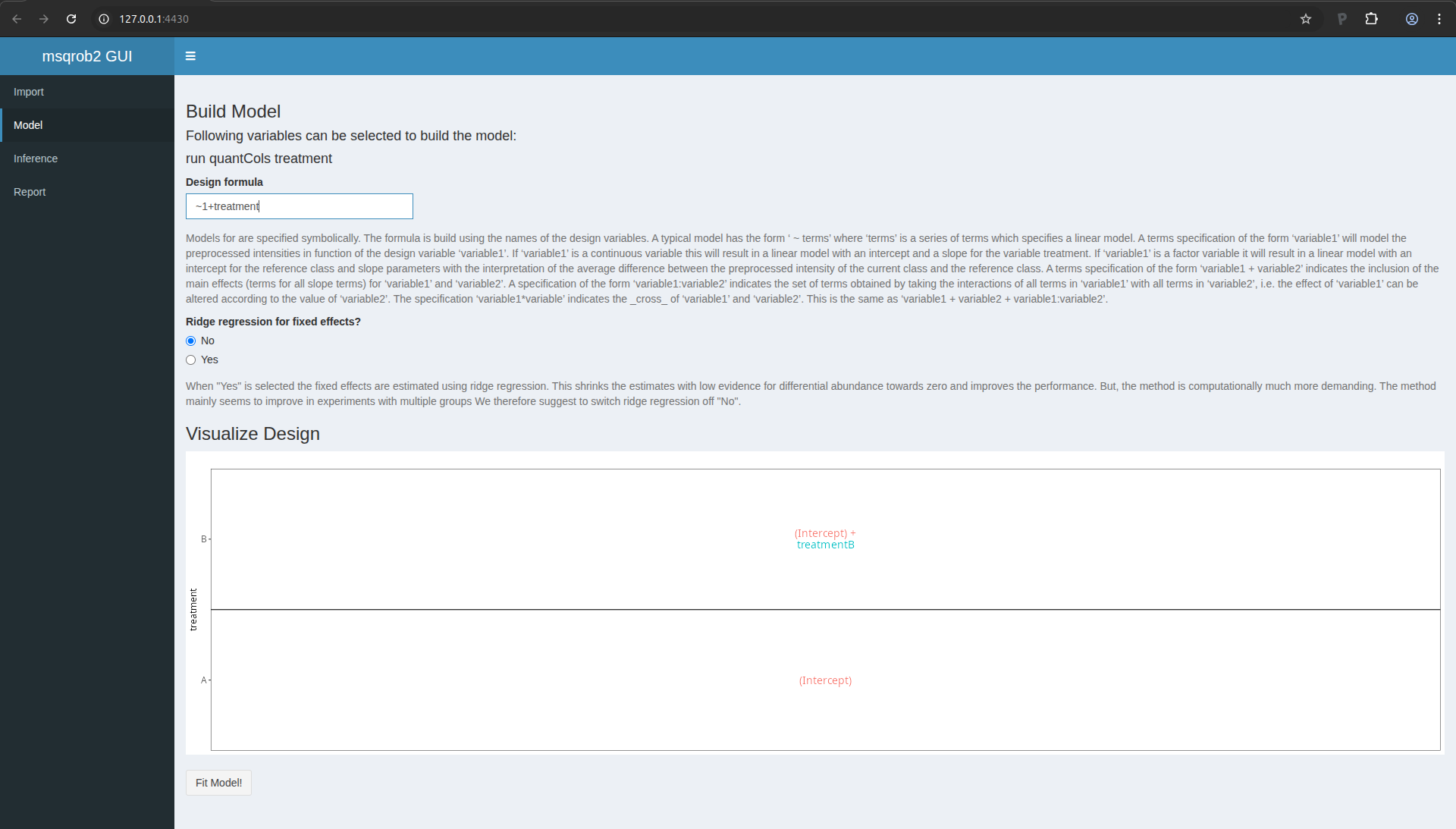

Data modelling

You can now move to the data modelling tab by clicking “Model” in the

menu on the left.

We will model the data with a different group mean for the variable

treatment with two levels: spikein treatment A and B. We can specify

this model by inserting ~1+treatment in the “Design formula”

textbox. Note, that a formula always starts with a symbol ‘~’ (see the

descriptive paragraph in the application).

Note that as soon as we do that, the design is visualised.

Figure 15. msqrob2 Model tab with design

This visualisation shows the different group means that are modelled.

- The group mean for the treatment A is modelled using the parameter

(Intercept).

- The group mean for the treatment B is modelled using a linear

combination of the two model parameters

(Intercept) + treatmentB.

- The average difference in preprocessed protein expression value

between both conditions equals

treatmentB.

Remember that we log-transformed the intensities:

\[

\log_2FC_\text{B}=\log_2 B - \log_2 A = \log_2\frac{B}{A} = \text{treatmentB}

\]

Note that a linear combination of model parameters is also referred to

as a contrast in statistics. This contrast has the interpretation of a

log2 fold change between condition B and condition A. Positive

estimates denote that the abundance of the protein is on average

higher in condition B, negative estimates denote that the abundance is

on average higher in condition A. An estimate equal to 0 indicates

that the estimated abundances are equal.

A log2 FC = 1 indicates that the average abundance in condition B is 2

x higher than the average abundance in condition A, i.e. an 2 fold

upregulation in condition B as compared to condition A.

We now have to click the Fit Model! button to fit the models for

each protein. We are now ready for assessing differential abundance of

each protein using formal hypothesis testing.

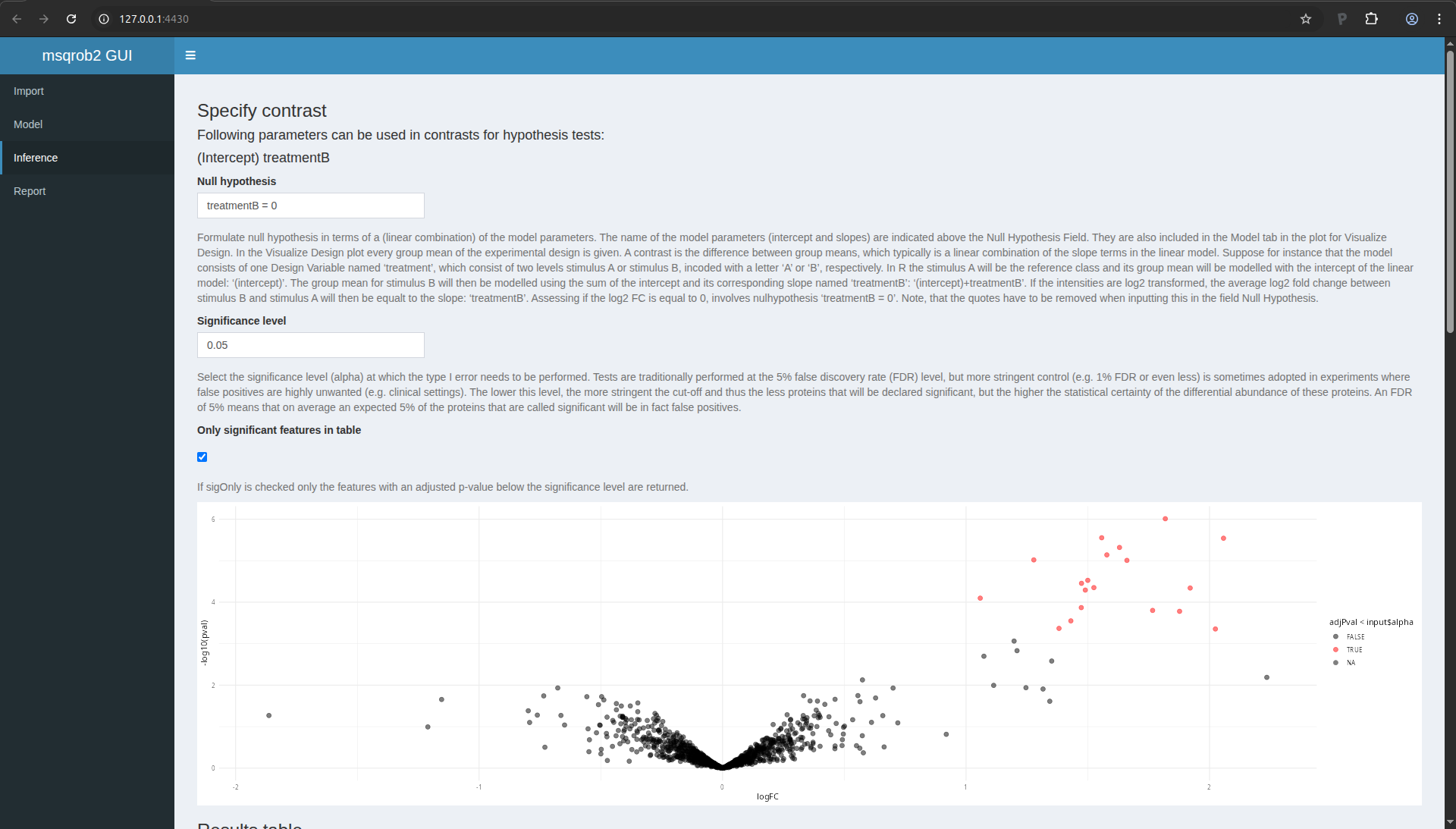

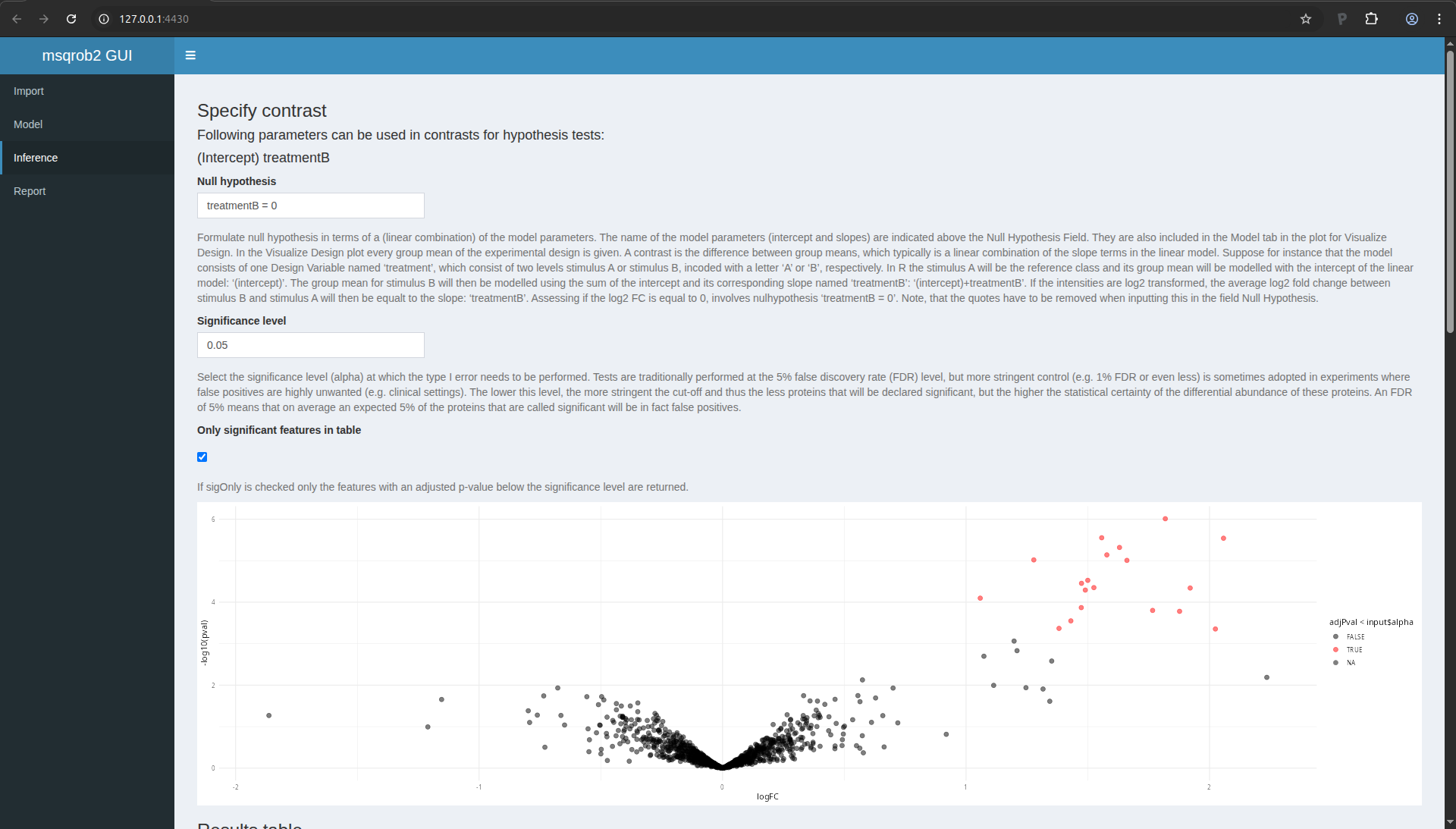

Inference

Click on the “Inference” tab in the left menu.

In the statistical analysis we will want to test the null hypothesis

that

\[ H_0: \log_2 B-\log_2 6A = 0 \]

Against the alternative that

\[ H_1: \log_2 B - \log_2 A \neq 0 \]

We can specify the null hypothesis as a linear combination of the

model parameters, i.e. treatmentB = 0. We will falsify this null

hypothesis for each protein separately based on the linear model. So,

under the null hypothesis we reason that there is no effect of the

spike-in treatment on the abundance of a specific protein. The p-value

of the statistical test than indicates the probability to observe an

effect (fold change), that is as extreme or more extreme (equally or

more up or down regulated) than what is observed in the sample, by

random change (when the null hypothesis is true and when there is in

reality no effect of the treatment).

To proceed, fill in the field “Null hypothesis” with treatmentB = 0.

After 1-2 second, the window is updated

Figure 16. msqrob2 Inference tab with contrast

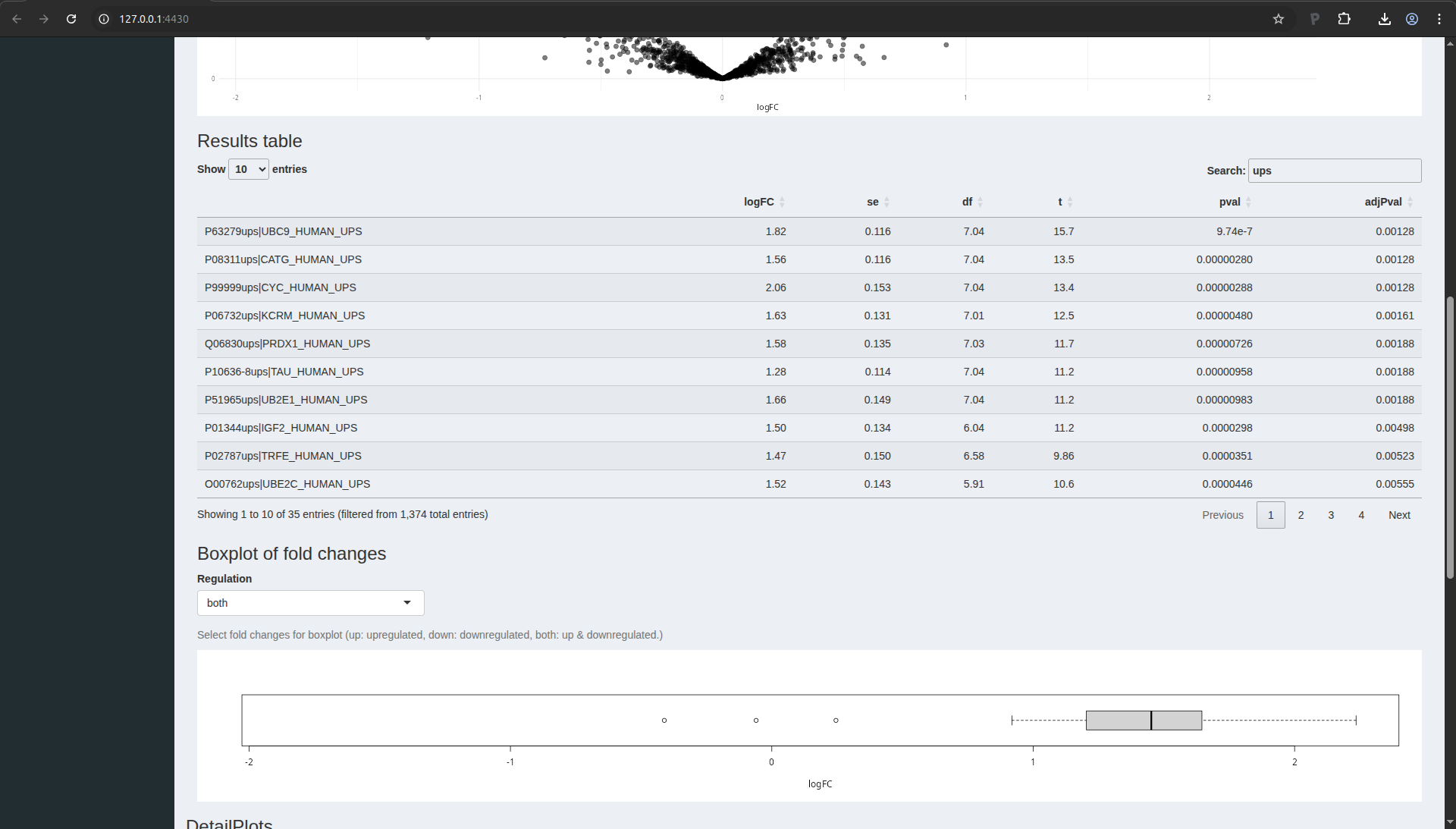

Volcano plot

The application now also provides a volcano plot appears giving you a

view on statistical significance in the y-axis, i.e. the \(-\log_{10}\)

transformed p-value: a value of 1 equals to a p-value of 0.1, a value

of 2 equals a p-value of 0.01, etc against biological relevance/the

effect size in the x-axis, which is the \(\log_2FC\).

Result table

You also get a table with selected feature. By default these are all

proteins that are significant with the specified “Significance level”

field. You also obtain a boxplot of the log2-fold changes for all

proteins that are selected in the table.

Note that 19 proteins are recovered as significant. As expected all

these proteins are UPS proteins which are known to be spiked in the

samples. If you untick the option only significant features in table, all proteins are shown in the table.

You can highlight proteins on the volcano plot by selecting lines of

interest in the result table. You can also search for specific

proteins in the list by using the search field above the table. E.g.

type ups.

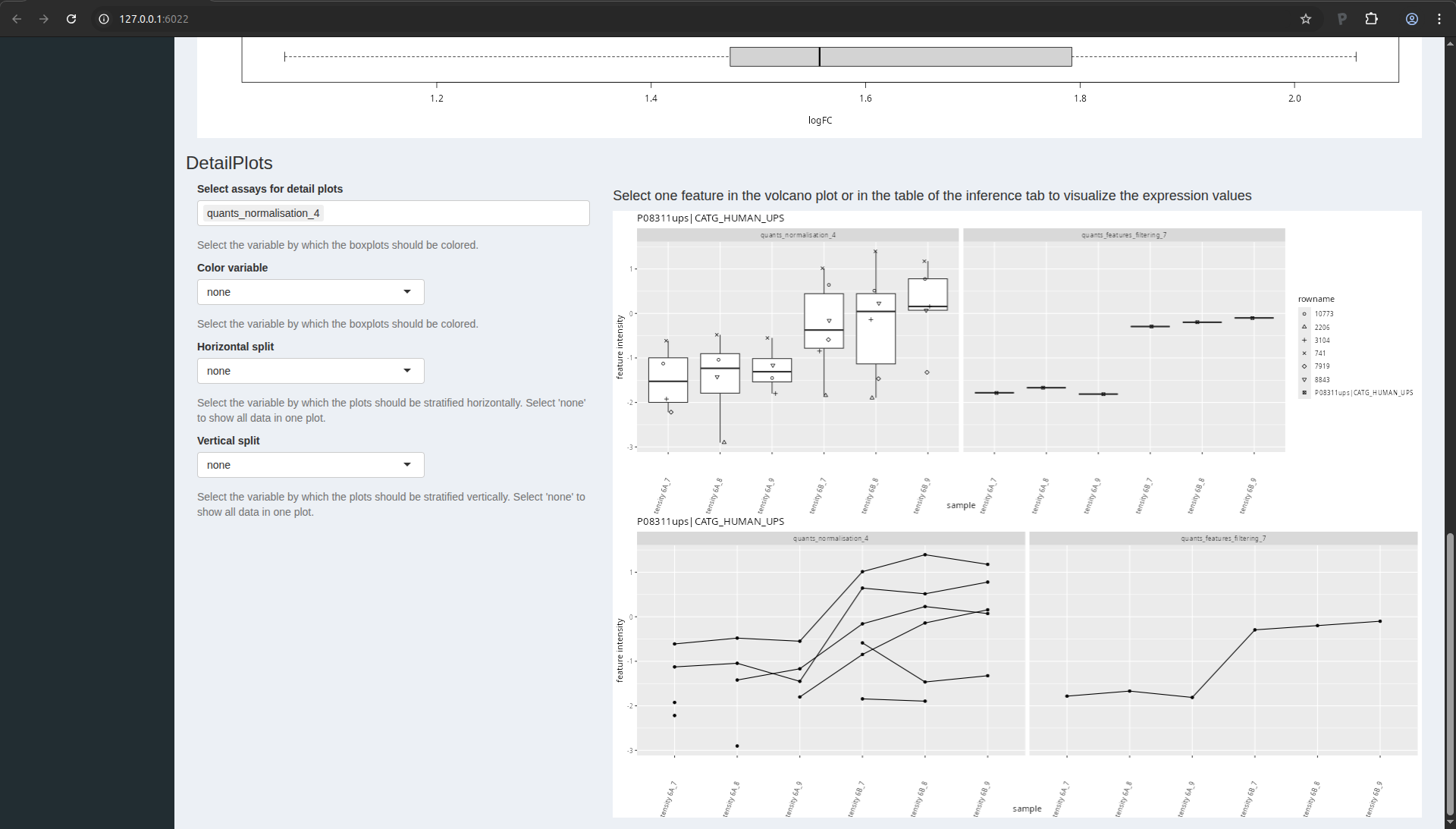

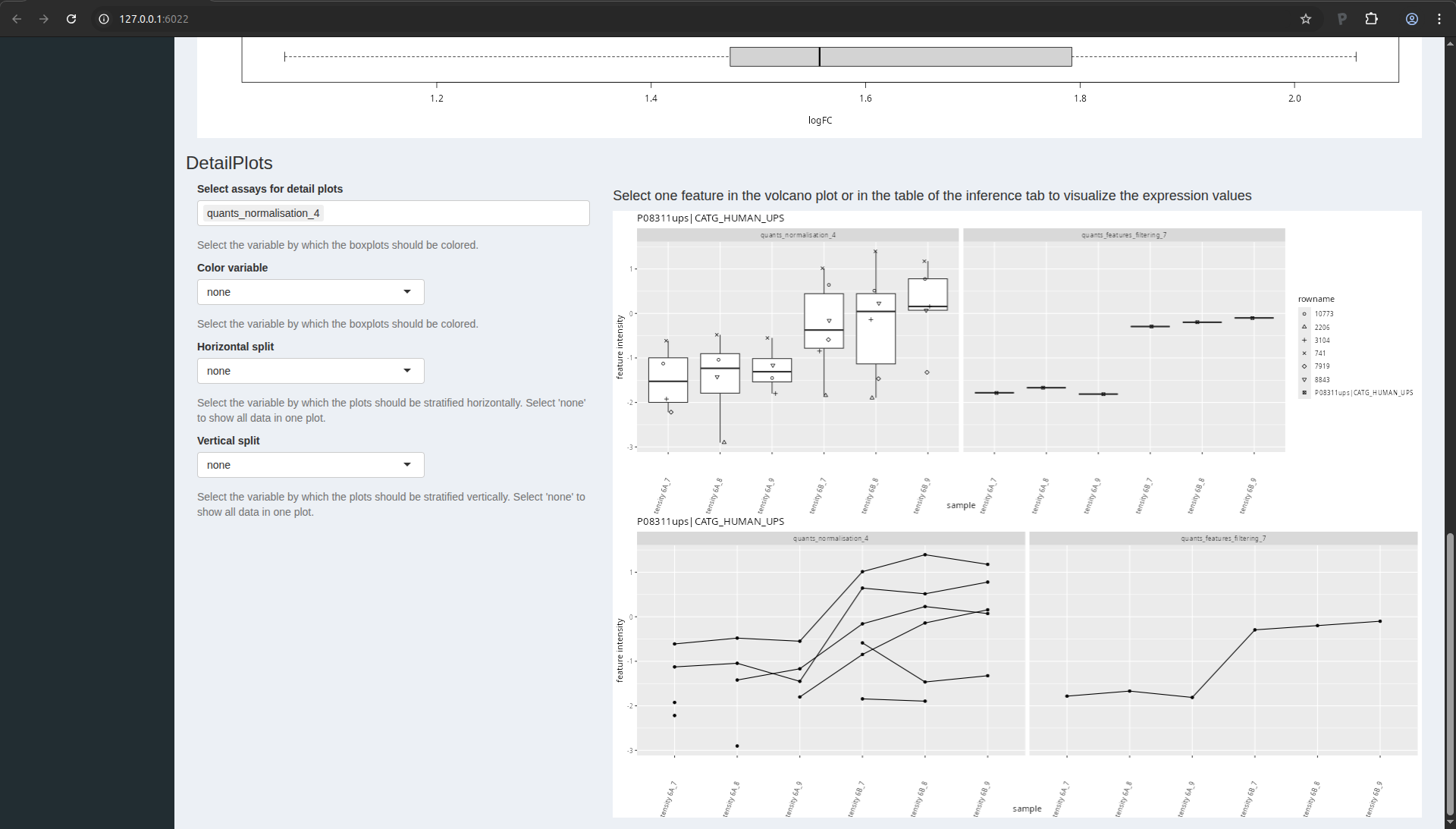

Detail plots

If you select one protein in the table, you can also explore the

underlying normalised peptide intensities and protein intensities of

the underlying data in the “DetailPlots”:

Figure 17. msqrob2 detail plots.

You can further modify the plot by coloring the data according to a

design variable or by splitting the data horizontally or vertically

according to design variables.

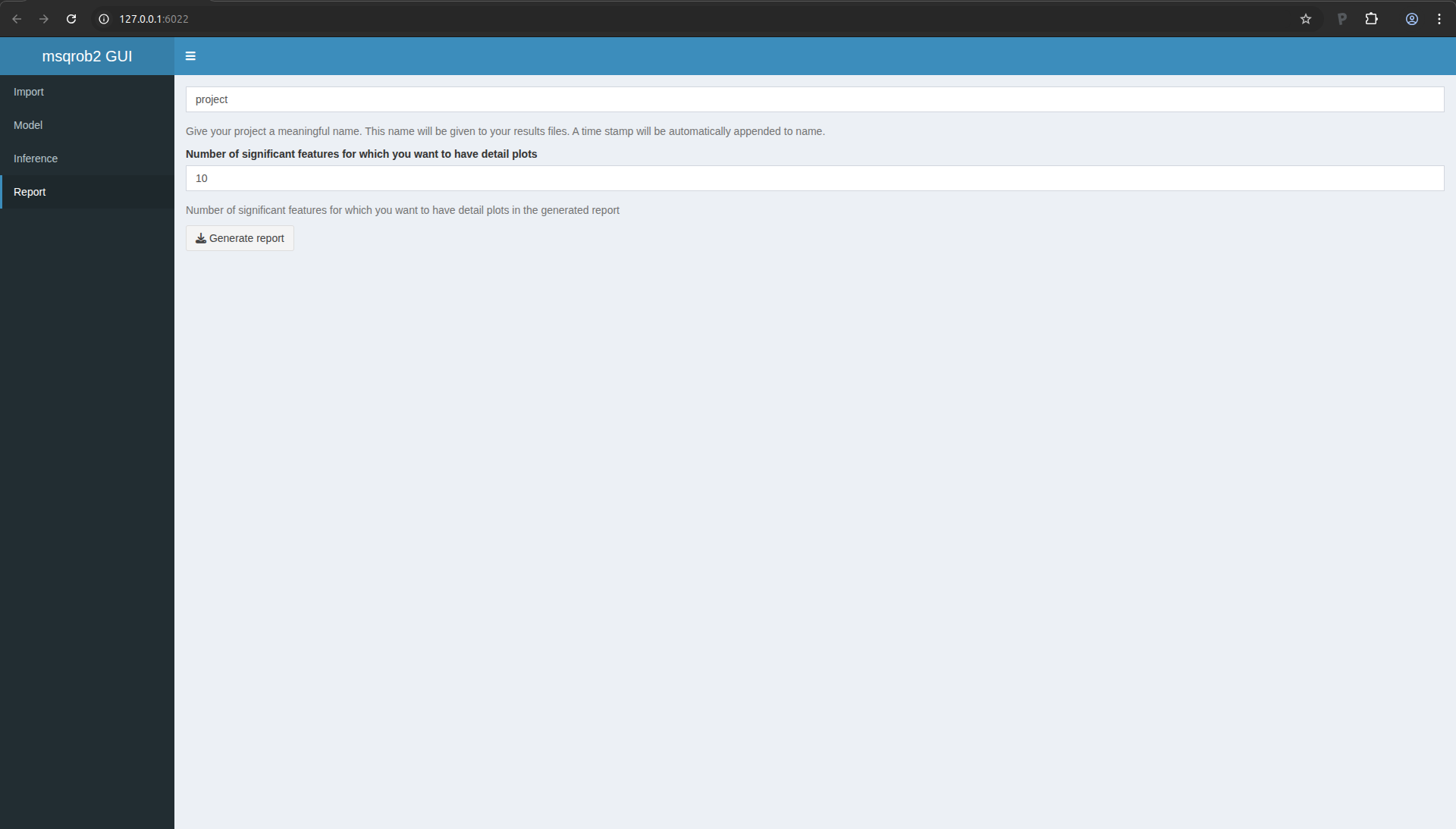

LS0tCnRpdGxlOiAiSW50cm9kdWN0aW9uIHRvIHByb3Rlb21pY3MgZGF0YSBhbmFseXNpcyIKc3VidGl0bGU6ICJJbXBhY3Qgb2Ygc3VtbWFyaXNhdGlvbiIKYXV0aG9yOiAiTGlldmVuIENsZW1lbnQiCmRhdGU6ICJzdGF0T21pY3MsIEdoZW50IFVuaXZlcnNpdHkgKGh0dHBzOi8vc3RhdG9taWNzLmdpdGh1Yi5pbykiCm91dHB1dDoKICAgIGh0bWxfZG9jdW1lbnQ6CiAgICAgIHRoZW1lOiBmbGF0bHkgICAgICAKICAgICAgY29kZV9kb3dubG9hZDogZmFsc2UKICAgICAgdG9jOiBmYWxzZQogICAgICB0b2NfZmxvYXQ6IGZhbHNlCiAgICAgIG51bWJlcl9zZWN0aW9uczogZmFsc2UKYmlibGlvZ3JhcGh5OiBtc3Fyb2IyLmJpYgotLS0KCjxhIHJlbD0ibGljZW5zZSIgaHJlZj0iaHR0cHM6Ly9jcmVhdGl2ZWNvbW1vbnMub3JnL2xpY2Vuc2VzL2J5LW5jLXNhLzQuMCI+PGltZyBhbHQ9IkNyZWF0aXZlIENvbW1vbnMgTGljZW5zZSIgc3R5bGU9ImJvcmRlci13aWR0aDowIiBzcmM9Imh0dHBzOi8vaS5jcmVhdGl2ZWNvbW1vbnMub3JnL2wvYnktbmMtc2EvNC4wLzg4eDMxLnBuZyIgLz48L2E+CgojIyBUaGUgQ1BUQUMgQSB2cyBCIGRhdGFzZXQKCk91ciBmaXJzdCBjYXNlLXN0dWR5IGlzIGEgc3Vic2V0IG9mIHRoZSBkYXRhIG9mIHRoZSA2dGggc3R1ZHkgb2YgdGhlCkNsaW5pY2FsIFByb3Rlb21pYyBUZWNobm9sb2d5IEFzc2Vzc21lbnQgZm9yIENhbmNlciAoQ1BUQUMpLiBJbiB0aGlzCmV4cGVyaW1lbnQsIHRoZSBhdXRob3JzIHNwaWtlZCB0aGUgU2lnbWEgVW5pdmVyc2FsIFByb3RlaW4gU3RhbmRhcmQKbWl4dHVyZSAxIChVUFMxKSBjb250YWluaW5nIDQ4IGRpZmZlcmVudCBodW1hbiBwcm90ZWlucyBpbiBhIHByb3RlaW4KYmFja2dyb3VuZCBvZiA2MCBuZy/OvEwgU2FjY2hhcm9teWNlcyBjZXJldmlzaWFlIHN0cmFpbiBCWTQ3NDEgKE1BVGEsCmxldTLOlDAsIG1ldDE1zpQwLCB1cmEzzpQwLCBoaXMzzpQxKS4gVHdvIGRpZmZlcmVudCBzcGlrZS1pbgpjb25jZW50cmF0aW9ucyB3ZXJlIHVzZWQ6IDZBICgwLjI1IGZtb2wgVVBTMSBwcm90ZWlucy/OvEwpIGFuZCA2QiAoMC43NApmbW9sIFVQUzEgcHJvdGVpbnMvzrxMKSBbNV0uIFdlIGxpbWl0ZWQgb3Vyc2VsdmVzIHRvIHRoZSBkYXRhIG9mCkxUUS1PcmJpdHJhcCBXIGF0IHNpdGUgNTYuIFRoZSBkYXRhIHdlcmUgc2VhcmNoZWQgd2l0aCBNYXhRdWFudAp2ZXJzaW9uIDEuNS4yLjgsIGFuZCBkZXRhaWxlZCBzZWFyY2ggc2V0dGluZ3Mgd2VyZSBkZXNjcmliZWQgaW4KW0Bnb2VtaW5uZTIwMTZdLiBUaHJlZSByZXBsaWNhdGVzIGFyZSBhdmFpbGFibGUgZm9yIGVhY2gKY29uY2VudHJhdGlvbi4KCi0gVGhlIHJhdyBkYXRhIGZpbGVzIGNhbiBiZSBkb3dubG9hZGVkIGZyb20gCiAgaHR0cHM6Ly9jcHRhYy1kYXRhLXBvcnRhbC5nZW9yZ2V0b3duLmVkdS9jcHRhYy9wdWJsaWM/c2NvcGU9UGhhc2UrSQogIChTdHVkeSA2KQoKLSBUaGUgTWF4UXVhbnQgZGF0YSBjYW4gYmUgZG93bmxvYWRlZCBbemlwIGZpbGUgd2l0aCAKICBkYXRhXShodHRwczovL2dpdGh1Yi5jb20vc3RhdE9taWNzL1BEQTI1RUJJL2FyY2hpdmUvcmVmcy9oZWFkcy9kYXRhLnppcCkuCiAgVGhlIHBlcHRpZGVzLnR4dCBmaWxlIGNhbiBiZSBmb3VuZCBpbiAKICBgZGF0YS9xdWFudGlmaWNhdGlvbi9jcHRhY0F2c0JfbGFiM2AuCgojIyBJbnN0YWxsYXRpb24gaW5zdHJ1Y3Rpb25zIGFuZCBzZXR1cAoKWW91IGNhbiBmaW5kIHRoZSBpbnN0YWxsYXRpb24gaW5zdHJ1Y3Rpb25zIGZvciBhbGwgcmVxdWlyZWQgc29mdHdhcmUKdG8gcnVuIHRoaXMgZXhlcmNpc2UgaW4gW3NvZnR3YXJlXSguL3NvZnR3YXJlLmh0bWwpLiBUaGlzIHdpbGwgCmluc3RhbGw6CgotIFIgYW5kIFJTdHVkaW8KLSBgUUZlYXR1cmVzYCB0byByZWFkIGFuZCBwcm9jZXNzIG1hc3Mgc3BlY3Ryb21ldHJ5IChNUykgcXVhbnRpdGF0aXZlIAogIGRhdGEKLSBgUUZlYXR1cmVzR1VJYCB0aGUgZ3JhcGhpY2FsIHVzZXIgaW50ZXJmYWNlIChHVUkpIHRvIFFGZWF0dXJlcwotIGBtc3Fyb2IyYCB0byBwZXJmb3JtIHJvYnVzdCBzdGF0aXN0aWNhbCBpbmZlcmVuY2UgZm9yIHF1YW50aXRhdGl2ZSBNUyAKICBwcm90ZW9taWNzCi0gYG1zcXJvYjJndWlgIHRoZSBHVUkgdG8gbXNxcm9iMgoKVXBvbiBpbnN0YWxsYXRpb24gCgoxLiBPcGVuIFJzdHVkaW8sIHlvdSB3aWxsIHNlZSB0aGUgZm9sbG93aW5nIHdpbmRvdwoKIVtGaWd1cmUgMS4gUlN0dWRpb10oLi9maWd1cmVzL3JzdHVkaW8ucG5nKQoKMi4gQ29weSBhbmQgcGFzdGUgZm9sbG93aW5nIGNvbW1hbmRzIGluIHRoZSBjb25zb2xlIHdpbmRvdyBhbmQgdHlwZSBlbnRlcgoKYGBge3IsIG1lc3NhZ2U9RkFMU0UsIHdhcm5pbmc9RkFMU0V9CmxpYnJhcnkoUUZlYXR1cmVzR1VJKQpsaWJyYXJ5KG1zcXJvYjJndWkpCm9wdGlvbnMoc2hpbnkubWF4UmVxdWVzdFNpemUgPSA1MDAgKiAxMDI0XjIpCmBgYAoKLSBUaGUgZmlyc3QgbGluZSB3aWxsIGxvYWQgdGhlIGBRRmVhdHVyZXNHVUlgIHBhY2thZ2UgYW5kIGl0cyAKICBkZXBlbmRlbmNpZXMgKGluY2x1ZGluZyBgUUZlYXR1cmVzYCkKLSBUaGUgc2Vjb25kIGxpbmUgd2lsbCBsb2FkIHRoZSBgbXNxcm9iMmd1aWAgcGFja2FnZSBhbmQgaXRzCiAgZGVwZW5kZW5jaWVzIChpbmNsdWRpbmcgYG1zcXJvYjJgKQotIFRoZSBsYXN0IGxpbmUgd2lsbCBpbmNyZWFzZSB0aGUgYXZhaWxhYmxlIHRoZSBtYXhpbWFsIFJBTSB1c2FnZSBieSAKICB0aGUgYXBwbGljYXRpb24gdG8gNTAwIE1CLgoKIVtGaWd1cmUgMi4gUnN0dWRpbyB3aXRoIHRoZSBwYWNrYWdlIGxvYWRpbmcgY29tbWFuZHNdKC4vZmlndXJlcy9yc3R1ZGlvMi5wbmcpCgojIyBEYXRhIHByZXByb2Nlc3NpbmcKCkJlZm9yZSBhbmFseXNpbmcgdGhlIGRhdGEsIHdlIG11c3QgcGVyZm9ybSBkYXRhIHByZXByb2Nlc3NpbmcuIFRoZQp3aWxsIGVuc3VyZSB0aGUgZGF0YSBtZWV0IG91ciBtb2RlbCBhc3N1bXB0aW9ucy4gRGF0YSBpbXBvcnQgYW5kCnByZXByb2Nlc3NpbmcgaXMgcGVyZm9ybWVkIGJ5IHRoZSBgUUZlYXR1cmVzR1VJYCBhcHBsaWNhdGlvbi4KCiMjIyBPcGVuIGBRRmVhdHVyZXNHVUlgIAoKUnVuIHRoZSBmb2xsb3dpbmcgY29tbWFuZCAobGlrZSBhYm92ZSk6CgpgYGB7ciwgZXZhbD1GQUxTRX0KUUZlYXR1cmVzR1VJOjppbXBvcnRRRmVhdHVyZXMoKQpgYGAKCkEgbmV3IHdpbmRvdyB3aWxsIG9wZW4gd2l0aCB0aGUgc2hpbnlBcHAKCiFbRmlndXJlIDMuIFFGZWF0dXJlc0dVSSBTaGlueUFwcF0oLi9maWd1cmVzL3FmZWF0dXJlc2d1aV9mcmVzaC5wbmcpCgpJdCBpcyBhZHZpY2FibGUgdG8gb3BlbiB0aGUgc2hpbnkgYXBwIGluIGEgd2ViYnJvd3Nlci4gWW91IGNhbiBkbwp0aGF0IGJ5IGNsaWNraW5nIG9uIHRoZSBidXR0b24gYE9wZW4gaW4gQnJvd3NlcmAsIHdoaWNoIHlvdSBjYW4gZmluZAphYm92ZSB0aGUgYmx1ZSBuYXZpZ2F0aW9uIGJhciBhbmQgdGhlIHNoaW55QXBwIHdpbGwgcG9wIHVwIGluIGEgbmV3CmJyb3dzZXIgdGFiL3dpbmRvdy4KCiFbRmlndXJlIDQuIFFGZWF0dXJlc0dVSSBpbiBicm93c2VyIHRhYl0oLi9maWd1cmVzL1FGZWF0dXJlc0dVSS5wbmcpCgojIyMgSW1wb3J0IHRoZSBkYXRhCgpPdXIgTVMtYmFzZWQgcHJvdGVvbWljcyBkYXRhIGFuYWx5c2lzIHdvcmtmbG93cyBhbHdheXMgc3RhcnQgd2l0aCAyIApwaWVjZXMgb2YgZGF0YTogCgoxLiBUaGUgc2VhcmNoIGVuZ2luZSBvdXRwdXQuIEluIHRoaXMgdHV0b3JpYWwsIHdlIHdpbGwgdXNlIHRoZQogICBpZGVudGlmaWVkIGFuZCBxdWFudGlmaWVkIHBlcHRpZGUgZGF0YSBieSBNYXhRdWFudCwgY2FsbGVkCiAgIGBwZXB0aWRlcy50eHRgLgoyLiBBbiBhbm5vdGF0aW9uIGZpbGUgZGVzY3JpYmluZyB0aGUgZXhwZXJpbWVudGFsIGRlc2lnbi4gRWFjaCBsaW5lCiAgIGNvcnJlc3BvbmRzIHRvIGEgc2FtcGxlIGFuZCBlYWNoIGNvbHVtbiBwcm92aWRlcyBhIHNhbXBsZSAKICAgYW5ub3RhdGlvbi4KClRoZSBmaXJzdCBzdGVwIGlzIHRvIHVwbG9hZCB0aGUgYHBlcHRpZGVzLnR4dGAgZmlsZS4gVGhpcyBpcyB0aGUgZmlsZQp0aGF0IGNvbnRhaW5zIHlvdXIgcGVwdGlkZS1sZXZlbCBpbnRlbnNpdGllcy4gTm90ZSB0aGF0IHRoZQppbnRlbnNpdGllcyBtZWFzdXJlZCBpbiBlYWNoIHNhbXBsZSBhcmUgc3RvcmVkIGluIGEgc2VwYXJhdGUgY29sdW1uCmFuZCBlYWNoIHJvdyByZXByZXNlbnRzIGEgcGVwdGlkZS4gVGhpcyBtZWFucyB0aGF0IE1heFF1YW50IGFscmVhZHkKcGVyZm9ybWVkIGFnZ3JlZ2F0aW9uIG9mIFBTTS1sZXZlbCBkYXRhIGludG8gcGVwdGlkZS1sZXZlbCBkYXRhLiBGb3IKbW9yZSBjb250cm9sIG9uIFBTTS1sZXZlbCBxdWFsaXR5IGNvbnRyb2wgYW5kIGRhdGEgYWdncmVnYXRpb24sIG91cgpkYXRhIGFuYWx5c2lzIHdvcmtmbG93cyBjYW4gYWxzbyBzdGFydCBmcm9tIHRoZSBgZXZpZGVuY2UudHh0YCBmaWxlcwp3aGljaCBjb250YWluIFBTTS1sZXZlbCBpbmZvcm1hdGlvbiwgYnV0IHdpbGwgbm90IGJlIGNvdmVyZWQgaW4gdGhpcwp0dXRvcmlhbC4gRm9yIGEgTWF4UXVhbnQgc2VhcmNoLCB0aGUgYHBlcHRpZGVzLnR4dGAgZmlsZSBjYW4gYmUgZm91bmQKYnkgZGVmYXVsdCBpbiB0aGUgYHBhdGhfdG9fcmF3X2ZpbGVzL2NvbWJpbmVkL3R4dC9gIGZvbGRlciBmcm9tIHRoZQpNYXhRdWFudCBvdXRwdXQsIHdpdGggYHBhdGhfdG9fcmF3X2ZpbGVzYCB0aGUgZm9sZGVyIHdoZXJlIHJhdyBmaWxlcwp3ZXJlIHNhdmVkLiBUbyB1cGxvYWQgdGhlIGBwZXB0aWRlLnR4dGAgZmlsZSwgY2xpY2sgb24gdGhlICJCcm93c2UuLi4iCmJ1dHRvbiB1bmRlciB0aGUgImFzc2F5RGF0YSIgdGlsZSB0aXRsZS4gWW91IGRvbid0IG5lZWQgdG8gYWRhcHQgdGhlCmRlZmF1bHQgcGFyYW1ldGVycywgeW91IGNhbiBjbGljayB0aGUgIkltcG9ydCIgYnV0dG9uIHJpZ2h0IGF3YXkuIEEKcHJldmlldyBvZiB0aGUgdGFibGUgd2lsbCBhcHBlYXIgYW5kIHdpbGwgbGV0IHlvdSBxdWlja2x5IGNoZWNrIHRoZQpkYXRhLgoKIVtGaWd1cmUgNS4gVGhlIHBlcHRpZGUgZGF0YSBoYXZlIGJlZW4gc3VjY2VzZnVsbHkgdXBsb2FkZWRdKC4vZmlndXJlcy9wZXB0aWRlc19pbXBvcnRlZC5wbmcpCgpZb3UgY2FuIHNlZSB0aGF0IE1heFF1YW50IGdlbmVyYXRlZCBhIHRhYmxlIHdpdGggMTEsNDY2IGxpbmVzLApjb3JyZXNwb25kaW5nIHRvIDExLDQ2NiBwZXB0aWRlcy4gRm9yIGVhY2ggcGVwdGlkZSwgTWF4UXVhbnQgZ2VuZXJhdGVkCjcxIGNvbHVtbnMgY29udGFpbmluZyB2YXJpb3VzIHBpZWNlcyBvZiBpbmZvcm1hdGlvbiwgbGlrZSB0aGUgcGVwdGlkZQpzZXF1ZW5jZSwgdGhlIHByb3RlaW4gZ3JvdXBzIHRoZSBwZXB0aWRlIG1hdGNoZXMgdG8sIHRoZSBjaGFyZ2Ugc3RhdGVzCmZvdW5kLC4uLiBBbW9uZyB0aGVzZSBjb2x1bW5zLCB0aGVyZSBpcyBhbHNvIGEgc2V0IG9mIGNvbHVtbnMKY29udGFpbmluZyB0aGUgcXVhbnRpZmllZCBNUyBpbnRlbnNpdGllcyBmb3IgZWFjaCBzYW1wbGUgKGkuZS4gY29sdW1ucwpuYW1lZCBgSW50ZW5zaXR5IFhYWGApLiBUaGVzZSB3aWxsIGxhdGVyIGJlIGZsYWdnZWQgYXMgcXVhbnRpdGF0aXZlIAppbmZvcm1hdGlvbi4KClNpbWlsYXJseSwgd2UgbmVlZCB0byB1cGxvYWQgdGhlIGV4cGVyaW1lbnRhbCBhbm5vdGF0aW9uIGZpbGUgd2hpY2ggaXMKY2FsbGVkIGBleHBlcmltZW50YWxfYW5ub3RhdGlvbi50eHRgLiBXZSBoZXJlIHByb3ZpZGUgdGhlIHRhYmxlIGFzIGEKYC50eHRgLCBidXQgeW91IGNhbiBzdG9yZSB5b3VyIHRhYmxlIGluIG1hbnkgY29tbW9uIGZvcm1hdHMsIHN1Y2ggYXMKYC5jc3ZgIG9yIGAudHN2YC4gTm90ZSBFeGNlbCBhbGxvd3MgeW91IHRvIHN0b3JlIHNwcmVhZHNoZWV0cyBpbiBzdWNoCmZvcm1hdHMuIFRvIHVwbG9hZCB0aGUgYGV4cGVyaW1lbnRhbF9hbm5vdGF0aW9uLnR4dGAgZmlsZSwgY2xpY2sgb24KdGhlICJCcm93c2UuLi4iIGJ1dHRvbiB1bmRlciB0aGUgImNvbERhdGEiIHRpbGUgdGl0bGUuIFlvdSBkb24ndCBuZWVkCnRvIGFkYXB0IHRoZSBkZWZhdWx0IHBhcmFtZXRlcnMsIHlvdSBjYW4gY2xpY2sgdGhlICJJbXBvcnQiIGJ1dHRvbgpyaWdodCBhd2F5LiBBIHByZXZpZXcgb2YgdGhlIHRhYmxlIHdpbGwgYXBwZWFyIGFuZCB3aWxsIGxldCB5b3UKcXVpY2tseSBjaGVjayB0aGUgZGF0YS4gTm90ZSB0aGF0IHVwbG9hZGluZyB0aGUgYW5ub3RhdGlvbiBmaWxlIGlzCm9wdGlvbmFsIGZvciBkYXRhIHByZXByb2Nlc3NpbmcsIGJ1dCB5b3Ugd29uJ3QgYmUgYWJsZSB0byBwZXJmb3JtIGRhdGEKbW9kZWxsaW5nIGluIHRoZSBuZXh0IHNlY3Rpb24uCgohW0ZpZ3VyZSA2LiBUaGUgc2FtcGxlIGFubm90YXRpb25zIGhhdmUgYmVlbiBzdWNjZXNmdWxseSB1cGxvYWRlZF0oLi9maWd1cmVzL2Fubm90YXRpb25zX2ltcG9ydGVkLnBuZykKCkZvciB0aGUgQ1BUQUMgZGF0YXNldCBhbmFseXNlZCB3aXRoIE1heFF1YW50LCB0aGUgZmlyc3QgY29sdW1uIGVxdWFscwp0aGUgbmFtZXMgZ2l2ZW4gaW4gTWF4UXVhbnTigJlzICJFeHBlcmltZW50IiBjb2x1bW4uIFRoZSBzZWNvbmQgY29sdW1uCmNvbnRhaW5zIHRoZSBuYW1lcyBvZiB0aGUgcXVhbnRpdGF0aXZlIGNvbHVtbnMgaW4gdGhlIHRhYmxlIGFib3ZlOyBpdApzaG91bGQgYWx3YXlzIGJlIG5hbWVkIGBxdWFudENvbHNgIGFzIGl0IHdpbGwgYWxsb3cgdGhlIHNvZnR3YXJlIHRvCmNvcnJlY3RseSBsaW5rIHRoZSB0d28gdGFibGVzLiBUaGUgbGFzdCBjb2x1bW4gaXMgcmVsYXRlZCB0byB0aGUgc3R1ZHkKZGVzaWduOyBoZXJlIHRoZSB0cmVhdG1lbnQgcHJvdmlkZXMgdGhlIHNwaWtlLWluIGNvbmRpdGlvbi4KCllvdSBhcmUgbm93IHJlYWR5IHRvIGxpbmsgdGhlIHR3byB0YWJsZXMgaW50byBhIGBRRmVhdHVyZXNgIG9iamVjdCwKd2hpY2ggaXMgdGhlIGludGVybmFsIHJlcHJlc2VudGF0aW9uIG9mIHRoZSBkYXRhIHdlIHdpbGwgdXNlIGZvciB0aGUKcmVzdCBvZiB0aGUgd29ya2Zsb3cuIFNjcm9sbCB1cCB0byB0aGUgIlFGZWF0dXJlcyBDb252ZXJ0ZXIiIHRpbGUuIFlvdQpjYW4gbGVhdmUgYWxsIHRoZSBkZWZhdWx0IHNldHRpbmcgYW5kIGNsaWNrIG9uIHRoZSBncmVlbiBidXR0b24KIkNvbnZlcnQgdG8gYSBRRmVhdHVyZXMgb2JqZWN0Ii4gIAoKQWZ0ZXIgdXBsb2FkaW5nIHlvdXIgZmlsZXMsIGEgcHJldmlldyBvZiB0aGUgZGF0YSBhcHBlYXJzIChmZWVsIGZyZWUKdG8gY2xpY2sgb24gdGhlIHRhYmxlIHJvdykuIFRoZSB3aW5kb3cgc2hvdWxkIGxvb2sgYXMgZm9sbG93czoKCiFbRmlndXJlIDcuIFRoZSBRRmVhdHVyZXMgb2JqZWN0IGlzIHN1Y2Nlc3NmdWxseSBjb252ZXJ0ZWRdKC4vZmlndXJlcy9xZmVhdHVyZXNfaW1wb3J0ZWQucG5nKQoKIyMjIENvbmZpZ3VyaW5nIHRoZSBwcmVwcm9jZXNzaW5nIHdvcmZsb3cKCk1vdmUgdG8gdGhlIG5leHQgdGFibGUgYnkgY2xpY2tpbmcgb24gIlByZS1Qcm9jZXNzaW5nIENvbmZpZ3VyYXRpb24iCmluIHRoZSBtZW51IHRvIHRoZSBsZWZ0LiBDbGljayBvbiB0aGUgZ3JlZW4gYnV0dG9uICIrIiB0byBhZGQgYSBzdGVwLgpJbiB0aGlzIHR1dG9yaWFsLCB5b3Ugd2lsbCBuZWVkIHRvIGFkZCA3IHN0ZXBzIGluIHRvdGFsLiBZb3Ugc2hvdWxkCnBhcmFtZXRlcmlzZSB0aGVtIGFzIGZvbGxvd3M6CgoxLiBMb2cgVHJhbnNmb3JtYXRpb24KMi4gQWRkIEFubm90YXRpb25zCjMuIEZlYXR1cmVzIGZpbHRlcmluZwo0LiBOb3JtYWxpc2F0aW9uCjUuIEFnZ3JlZ2F0aW9uCjYuIEFkZCBBbm5vdGF0aW9ucwo3LiBGZWF0dXJlcyBmaWx0ZXJpbmcKCk9uY2UgZG9uZSwgeW91ciBzY3JlZW4gc2hvdWxkIGxvb2sgYXMgZm9sbG93czoKCiFbRmlndXJlIDguIFRoZSBwcmVwcm9jZXNzaW5nIHdvcmtmbG93IHVwb24gY29uZmlndXJhdGlvbl0oLi9maWd1cmVzL3ByZXByb2Nlc3NpbmdfY29uZmlndXJhdGlvbi5wbmcpCgpZb3UgY2FuIG5vdyBjbGljayB0aGUgIkNvbmZpcm0gQ3VycmVudCBXb3JrZmxvdyIgYnV0dG9uLiBPbiB0aGUgbGVmdAptZW51LCB5b3Ugd2lsbCBzZWUgdGhhdCB0aGUgM3JkIGVudHJ5IG5vdyBtZW50aW9ucyAiMyBQcmUtcHJvY2Vzc2luZwooNyBTdGVwcykiLiBXZSB3aWxsIG5vdyBjb25maWd1cmUgYW5kIHJ1biBlYWNoIG9mIHRoZXNlIHN0ZXBzLgoKIyMjIFN0ZXAgMTogTG9nIHRyYW5zZm9ybWF0aW9uCgpGb3IgZXZlcnkgc3RlcCwgeW91IGFsd2F5cyBuZWVkIHRvIGxvYWQgdGhlIGRhdGEgZnJvbSB0aGUgcHJldmlvdXMKc3RlcCAoaW4gdGhpcyBjYXNlIHRoZSBkYXRhIGltcG9ydGF0aW9uKS4gQ2xpY2sgb24gdGhlICJMb2FkIGFzc2F5cwpmcm9tIHByZXZpb3VzIHN0ZXAiIGJ1dHRvbi4gT25jZSBsb2FkZWQsIHRoZSBkYXRhIGlzIGF1dG9tYXRpY2FsbHkKbG9nMi10cmFuc2Zvcm1lZCBieSBkZWZhdWx0LiBZb3Ugc2hvdWxkIG5vdCBjaGFuZ2UgdGhlIGRlZmF1bHRzLiBZb3UKd2lsbCBzZWUgdGhhdCB0aGUgaW50ZW5zaXR5IGRpc3RyaWJ1dGlvbiB3aXRoaW4gZWFjaCBzYW1wbGUgaXMKYXV0b21hdGljYWxseSBwaWN0dXJlZCwgYW5kIHlvdSBjYW4gcGxheSB3aXRoIHRoZSBwbG90IHNldHRpbmcgKGJvdHRvbQpyaWdodCkgYXMgeW91IHdpc2guIFlvdXIgc2NyZWVuIHNob3VsZCBiZSBzaW1pbGFyIHRvIHRoaXM6IAoKIVtGaWd1cmUgOS4gU3RlcDE6IExvZyB0cmFuc2Zvcm1hdGlvbl0oLi9maWd1cmVzL3N0ZXAxLnBuZykKCk9uY2UgeW91IGFyZSBkb25lLCBjbGljayB0aGUgIlNhdmUgdGhlIHByb2Nlc3NlZCBkYXRhIiBidXR0b24uIFRoaXMKd2lsbCBzdG9yZSB0aGUgY2hhbmdlcyBhbmQgbWFrZSB0aGUgZGF0YSBhdmFpbGFibGUgZm9yIHRoZSBuZXh0IHN0ZXAuCklmIHlvdSBmb3JnZXQgdG8gY2xpY2sgdGhpcyBidXR0b24sIGxvYWRpbmcgdGhlIG5leHQgc3RlcCB3aWxsIGxlYWQgdG8KYW4gZXJyb3IuCgojIyMgU3RlcCAyOiBhZGQgYW5ub3RhdGlvbnMKCkluIFN0ZXAgMywgd2Ugd2lsbCBmaWx0ZXIgb3V0IGZlYXR1cmVzIGJhc2VkIG9uIHRoZW4gbnVtYmVyIG9mIG1pc3NpbmcKdmFsdWVzLiBUaGlzIGluZm9ybWF0aW9uIGlzIG5vdCB5ZXQgYXZhaWxhYmxlIGluIG91ciBkYXRhIGZvcgpmaWx0ZXJpbmcsIHNvIHdlIHdpbGwgYWRkIHRoaXMgaW5mb3JtYXRpb24gYXMgYSBmZWF0dXJlIGFubm90YXRpb24uCgoxLiBNb3ZlIHRvIFN0ZXAgMiBpbiB0aGUgbGVmdCBtZW51LgoyLiBDbGljayB0aGUgYnV0dG9uIHRvIGxvYWQgdGhlIHByZXZpb3VzIHN0ZXAuIAozLiBTY3JvbGwgYXQgdGhlIGJvdHRvbSBhbiB0aWNrIHRoZSAiQWRkIG1pc3NpbmcgdmFsdWVzIG51bWJlcnMiIGJveAo0LiBDbGljayAicGVyZm9ybSBhbm5vdGF0aW9uIi4gWW91IHNob3VsZCBzZWUgYSBwcmV2aWV3IG9mIHRoZSBmZWF0dXJlCiAgIGFubm90YXRpb25zLiBZb3UgY2FuIGNoZWNrIHRoYXQgdGhlIGxhc3QgY29sdW1uIGlzICJuTkEiIHdoaWNoIAogICBwcm92aWRlcyB0aGUgbnVtYmVyIG9mIG1pc3NpbmcgdmFsdWVzLgo1LiBDbGljayB0aGUgYnV0dG9uIHRvIHNhdmUgdGhlIHByb2Nlc3NlZCBkYXRhLgoKIyMjIFN0ZXAgMzogcGVwdGlkZSBmaWx0ZXJpbmcKCkluIHRoaXMgc3RlcCwgd2Ugd2lsbCBhY3R1YWxseSByZW1vdmUgdW5kZXNpcmFibGUgcGVwdGlkZXMuIFdlIHdpbGwKdXNlIHRoZSBmb2xsb3dpbmcgY3JpdGVyaWE6CgotIFJlbW92ZSBwZXB0aWRlcyB0aGF0IGNvdWxkIG5vdCBiZSBtYXBwZWQgdG8gYSBwcm90ZWluLiBJbiB0aGUgcGVwdGlkZXMudHh0IGZpbGUgb2YgTWF4UXVhbnQgdGhlIHZhcmlhYmxlIFByb3RlaW5zIGNvbnNpc3RzIG9mIGFuIGVtcHR5IGNoYXJhY3RlcjogYFByb3RlaW5zICE9IGAgCi0gUmVtb3ZlIHJldmVyc2Ugc2VxdWVuY2VzIChkZWNveXMpLiBJbiB0aGUgcGVwdGlkZXMudHh0IGZpbGUgb2YgTWF4UXVhbnQgdGhlIHZhcmlhYmxlIFJldmVyc2UgaXMgYW4gZW1wdHkgY2hhcmFjdGVyIGZvciBmb3J3YXJkIHNlcXVlbmNlcyAodGFyZ2V0cykgYW5kIGFuICIrIiBjaGFyYWN0ZXIgZm9yIHJldmVyc2Ugc2VxdWVuY2VzOiBgUmV2ZXJzZSAhPSArYAotIFJlbW92ZSBjb250YW1pbmFudHMuIEluIHRoZSBwZXB0aWRlcy50eHQgZmlsZSBvZiBNYXhRdWFudCB0aGUgdmFyaWFibGUgIkNvbnRhbWluYW50cyIgb3IgIlBvdGVudGlhbC5jb250YW1pbmFudCIgYW4gZW1wdHkgY2hhcmFjdGVyIGZvciBub24tY29udGFtaW5hbnQgc2VxdWVuY2VzIGFuZCBhICIrIiBjaGFyYWN0ZXIgZm9yIGNvbnRhbWluYW50IHNlcXVlbmNlczogYFBvdGVudGlhbC5jb250YW1pbmFudCAhPSArYAotIFJlbW92ZSBwZXB0aWRlcyB0aGF0IHdlcmUgaWRlbnRpZmllZCBpbiBsZXNzIHRoYW4gdGhyZWUgc2FtcGxlLiBUaGVyZSBhcmUgNiBzYW1wbGVzIHNvIHRoZXJlIG51bWJlciBvZiBOQSdzIGhhcyB0byBiZSBzbWFsbGVyIG9yIGVxdWFsIHRvOiBgbk5BIDw9IDNgCgpUbyBwZXJmb3JtIHRoaXMgZmlsdGVyaW5nIGFuZCBjYXJyeSBvdXQgdGhlIHNlcCwgeW91IGNhbjoKCjEuIE1vdmUgdG8gU3RlcCAzIGluIHRoZSBsZWZ0IG1lbnUuCjIuIENsaWNrIHRoZSBidXR0b24gdG8gbG9hZCB0aGUgcHJldmlvdXMgc3RlcC4gCjMuIENsaWNrIDR4IHRoZSBibHVlICJBZGQgQm94IiBidXR0b24gdG8gYWRkIDQgZmlsdGVyaW5nIGJveGVzLgo0LiBGaWxsIGluIHRoZSBib3hlcyBhcyBzaG93biBpbiB0aGUgZmlndXJlIGJlbG93Lgo1LiBJbiB0aGUgIkZpbHRlcmluZyBTdW1tYXJ5IiB0aWxlLCBjbGljayB0aGUgIkFwcGx5IEZpbHRlcnMiIGJ1dHRvbi4KNi4gQ2xpY2sgdGhlIGJ1dHRvbiB0byBzYXZlIHRoZSBwcm9jZXNzZWQgZGF0YS4KCiFbRmlndXJlIDEwLiBTdGVwMzogRmVhdHVyZSBmaWx0ZXJpbmcuIE5vdGUgdGhlIFBDQSBwbG90cyBhcmUgY29sbGFwc2VkIGZvciBiZXR0ZXIgb3ZlcnZpZXcgb2YgdGhlIGJveGVzLl0oLi9maWd1cmVzL3N0ZXAzLnBuZykKCiMjIyBTdGVwIDQ6IE5vcm1hbGlzYXRpb24KCldlIG5vcm1hbGlzZSB0aGUgZGF0YSBieSBzdWJzdHJhY3RpbmcgdGhlIHNhbXBsZSBtZWRpYW4gZnJvbSBldmVyeQppbnRlbnNpdHkgZm9yIHBlcHRpZGUgJHAkICBpbiBhIHNhbXBsZSAkaSQ6CgokJHlfe2lwfV5cdGV4dHtub3JtfSA9IHlfe2lwfSAtIFxoYXR7XG11X2l9JCQKCndpdGggJFxoYXRcbXVfaSQgdGhlIG1lZGlhbiBpbnRlbnNpdHkgb3ZlciBhbGwgb2JzZXJ2ZWQgcGVwdGlkZXMgaW4Kc2FtcGxlICRpJC4KCjEuIE1vdmUgdG8gU3RlcCA0IGluIHRoZSBsZWZ0IG1lbnUuCjIuIENsaWNrIHRoZSBidXR0b24gdG8gbG9hZCB0aGUgcHJldmlvdXMgc3RlcC4KMy4gTWVkaWFuIG5vcm1hbGlzYXRpb24gaXMgY29uZmlndXJlZCBieSBkZWZhdWx0LCBzbyB5b3UgY2FuIHNpbXBseQogICBjbGljayB0aGUgYnV0dG9uIHRvIHNhdmUgdGhlIHByb2Nlc3NlZCBkYXRhLgoKIyMjIFN0ZXAgNTogU3VtbWFyaXNhdGlvbgoKSW4gdGhpcyBzdGVwLCB0aGUgcGVwdGlkZSBpbnRlbnNpdGllcyB3aWxsIGJlIHN1bW1hcmlzZWQgKGFrYQphZ2dyZWdhdGVkKSBpbnRvIHByb3RlaW4gaW50ZW5zaXRpZXMgdXNpbmcgcm9idXN0IHN1bW1hcmlzYXRpb24uIFlvdQpjYW4gcGVyZm9ybSB0aGlzIGFzIGZvbGxvd3M6CgoxLiBNb3ZlIHRvIFN0ZXAgNSBpbiB0aGUgbGVmdCBtZW51LgoyLiBDbGljayB0aGUgYnV0dG9uIHRvIGxvYWQgdGhlIHByZXZpb3VzIHN0ZXAuCjMuIEluIHRoZSBzZXR0aW5ncywgZmlyc3Qgc2VsZWN0ICJyb2J1c3RTdW1tYXJ5IiBpbiB0aGUgZmlyc3QgZmllbGQgYW5kCiAgIHRoZW4gc2VsZWN0ICJQcm90ZWlucyIgYXMgdGhlIHJvd0RhdGEgdmFyaWFibGUgZGVmaW5pbmcgdGhlIGZlYXR1cmVzIG9mCiAgIHRoZSBhc3NheSB0byBhZ2dyZWdhdGUuCjQuIENsaWNrIHRoZSBidXR0b24gdG8gc2F2ZSB0aGUgcHJvY2Vzc2VkIGRhdGEuCgojIyMgU3RlcCA2OiBhZGQgYW5ub3RhdGlvbnMKClJlcGVhdCBzdGVwIDIsIHRoaXMgd2lsbCBhbGxvdyB0byBwZXJmb3JtIGEgZmlsdGVyaW5nIG9uIG1pc3NpbmcsIGJ1dAphdCB0aGUgcHJvdGVpbi1sZXZlbCBpbnN0ZWFkIG9mIHBlcHRpZGUtbGV2ZWwuCgojIyMgU3RlcCA3OiBwcm90ZWluIGZpbHRlcmluZwoKSW4gdGhpcyBzdGVwLCB3ZSB3aWxsIHJlbW92ZSBwcm90ZWlucyB0aGF0IHdlcmUgaWRlbnRpZmllZCBpbiBsZXNzCnRoYW4gNCBzYW1wbGVzIHNvIHRoYXQgZm9yIG1vc3QgcHJvdGVpbnMgYXQgbGVhc3QgdHdvIHByb3RlaW4tbGV2ZWwgZXhwcmVzc2lvbiB2YWx1ZXMgYXJlIG9ic2VydmVkIGluIGVhY2ggdHJlYXRtZW50IGdyb3VwLiBTbyB3ZSB3aWxsIHRvbGVyYXRlIGF0IG1vc3QgNi00ID0gMiBtaXNzaW5nIHZhbHVlcyBhdCB0aGUgcHJvdGVpbi1sZXZlbC4gWW91IG1heSBoYXZlIGd1ZXNzZWQgaXQgYnkgbm93LCBidXQgeW91IGNhbiBwcm9jZWVkIAp3aXRoOgoKMS4gTW92ZSB0byBTdGVwIDcgaW4gdGhlIGxlZnQgbWVudS4KMi4gQ2xpY2sgdGhlIGJ1dHRvbiB0byBsb2FkIHRoZSBwcmV2aW91cyBzdGVwLiAKMy4gQ2xpY2sgMXggdGhlIGJsdWUgIkFkZCBCb3giIGJ1dHRvbiB0byBhZGQgYSBmaWx0ZXJpbmcgYm94Lgo0LiBGaWxsIGluIHRoZSBib3hlcyBzbyB0aGF0IHRoZSBmaWx0ZXIgYmVjb21lczogYG5OQSA8PSAyYC4KNS4gSW4gdGhlICJGaWx0ZXJpbmcgU3VtbWFyeSIgdGlsZSwgY2xpY2sgdGhlICJBcHBseSBGaWx0ZXJzIiBidXR0b24uCjYuIENsaWNrIHRoZSBidXR0b24gdG8gc2F2ZSB0aGUgcHJvY2Vzc2VkIGRhdGEuCgojIyMgU2F2ZSB5b3VyIHByb2dyZXNzCgpZb3Ugbm93IGhhdmUgY29tcGxldGVkIHRoZSBkYXRhIHByb2Nlc3NpbmcuIFlvdSBjYW4gbW92ZSB0byB0aGUKU3VtbWFyeSB0YWIgKGluIHRoZSBsZWZ0IG1lbnUpLiBUaGVyZSB5b3Ugd2lsbCBmaW5kIGFuIG92ZXJ2aWV3IG9mIHRoZQpkYXRhIGFuZCB0aGUgZGF0YSBwcm9jZXNzaW5nIHN0ZXBzLgoKIVtGaWd1cmUgMTEuIFN1bW1hcnkgb2YgdGhlIGRhdGEgcHJvY2Vzc2luZy5dKC4vZmlndXJlcy9wcm9jZXNzaW5nX3N1bW1hcnkucG5nKQoKR28gdG8gdGhlIGJvdHRvbSBvZiB0aGUgcGFnZSBhbmQgY2xpY2sgb24gdGhlICJEb3dubG9hZCBRRmVhdHVyZXMiCmJ1dHRvbi4gQSBwb3AgdXAgd2luZG93biB3aWxsIGFzayB5b3UgdG8gc2VsZWN0IGEgZGlyZWN0b3J5IHRvIHN0b3JlCnRoZSByZXN1bHRpbmcgZmlsZS4gQnkgZGVmYXVsdCB0aGUgZmlsZSBpcyBjYWxsZWQKYHFmZWF0dXJlc19vYmplY3QucmRzYCwgYnV0IGZlZWwgZnJlZSB0byByZW5hbWUgYXMgeW91IHdpc2ggYnV0IHlvdQpjYW5ub3QgY2hhbmdlIHRoZSBmaWxlIGV4dGVuc2lvbiAoYC5yZHNgKS4KCllvdSBjYW4gbm93IGNsb3NlIHRoZSBhcHBsaWNhdGlvbi4KCiMjIFN0YXRpc3RpY2FsIGFuYWx5c2lzCgojIyMgT3BlbiBgbXNxcm9iMmd1aWAgCgpSZXR1cm4gdG8geW91ciBSc3R1ZGlvIGFwcGxpY2F0aW9uIGFuZCBydW4gdGhlIGZvbGxvd2luZyBjb21tYW5kOgoKYGBge3IsIGV2YWw9RkFMU0V9Cm1zcXJvYjJndWk6OmxhdW5jaE1zcXJvYjJBcHAoKQpgYGAKCkEgbmV3IHdpbmRvdyB3aWxsIG9wZW4gd2l0aCB0aGUgc2hpbnlBcHAgZm9yIGRhdGEgbW9kZWxsaW5nCgohW0ZpZ3VyZSAxMi4gbXNxcm9iMmd1aSBTaGlueUFwcF0oLi9maWd1cmVzL21zcXJvYjJndWlfZnJlc2gucG5nKQoKIyMjIEltcG9ydCB0aGUgcHJvY2Vzc2VkIGRhdGEKCldlIHN0YXJ0IHRoZSBkYXRhIG1vZGVsbGluZyB3b3JrZmxvdyB3aXRoIGltcG9ydGluZyB0aGUgZGF0YSBwcm9jZXNzZWQKaW4gdGhlIHByZXZpb3VzIHNlY3Rpb246CgoxLiBDbGljayBvbiAiQnJvd3Nlci4uLiIgYW5kIGZldGNoIHRoZSBmaWxlIHlvdSBzYXZlZCB3aXRoIHRoZQogICBgUUZlYXR1cmVzR1VJYCBhcHAuCjIuIEluIHRoZSAiU2VsZWN0IEFzc2F5Iiwgc2VsZWN0IHRoZSBsYXN0IGl0ZW0sCiAgICJxdWFudHNfZmVhdHVyZXNfZmlsdGVyaW5nXzciLgoKVGhlIGFwcGxpY2F0aW9uIGxldCdzIHlvdSBleHBsb3JlIHRoZSBkYXRhIHRhYmxlcyBhbmQgcHJvdmlkZXMgYSBwbG90CndpdGggdGhlIGludGVuc2l0eSBkaXN0cmlidXRpb25zIGZvciBlYWNoIHNhbXBsZS4KCiFbRmlndXJlIDEzLiBtc3Fyb2IyZ3VpIGFwcGxpY2F0aW9uIHVwb24gZGF0YSBpbXBvcnQuXSguL2ZpZ3VyZXMvbXNxcm9iMl9pbXBvcnRlZC5wbmcpCgpXaGVuIHlvdSBzY3JvbGwgZG93biB0aGUgcGFnZSwgeW91IHdpbGwgZmluZCBhIGRyb3AgZG93biB0ZXh0IHNlbGVjdG9yCih1bmRlciB0aGUgdGFibGUpLiBDaGFuZ2UgInJ1biIgaW50byAidHJlYXRtZW50IiBhbmQgbG9vayBhdCB0aGUKcHJpbmNpcGFsIGNvbXBvbmVudCBhbmFseXNpcyAoUENBKSBwbG90LiBIb3cgd291bGQgeW91IGludGVycHJldCB0aGlzCmdyYXBoPwoKIVtGaWd1cmUgMTQuIFBDQSBwbG90IG9mIHRoZSBDUFRBQyBkYXRhIHNldC5dKC4vZmlndXJlcy9tc3Fyb2IyX3BjYS5wbmcpCgpUaGUgUENBIHBsb3QgaW5kaWNhdGVzIHRoYXQgd2UgY2FuIGV4cGVjdCBhIHRyZWF0bWVudCBlZmZlY3QgaW4gdGhlCmRhdGEgYXMgdGhlIHNhbXBsZSBiZWxvbmdpbmcgdG8gb25lIHRyZWF0bWVudCBjYW4gYmUgc2VwYXJhdGVkIGZyb20KdGhlIHNhbXBsZXMgYmVsb25naW5nIHRvIHRoZSBvdGhlciB0cmVhdG1lbnQuIFdlIGFsc28gc2VlIHRoYXQgdGhpcwpzZXBhcmF0aW9uIG1vc3RseSBvY2N1cnMgaW4gdGhlIHNlY29uZCBwcmluY2lwYWwgY29tcG9uZW4uIFRoZSBmaXJzdApjb21wb25lbnQsIHdoaWNoIGV4cGxhaW5zIG1vc3Qgb2YgdGhlIHZhcmlhdGlvbiwgZG9lcyBub3Qgc2VlbSB0byBiZWFyCmNvcnJlbGF0aW9uIGFzc29jaWF0ZWQgd2l0aCB0cmVhdG1lbnQuIFRoaXMgaXMgZXhwZWN0ZWQgYXMgd2Uga25vdwp0aGF0IG9ubHkgYSBmZXcgcHJvdGVpbiB3ZXJlIHNwaWtlZCBpbiwgc28gd2UgZG8gbm90IGV4cGVjdCBhbiBlZmZlY3QKb2YgdHJlYXRtZW50IGZvciBtb3N0IHByb3RlaW5zLiBXZSB3aWxsIG5vdyBkZWx2ZSBpbnRvIHRoZSBkYXRhCm1vZGVsbGluZyBhbmQgaW5mZXJlbmNlIHRoYXQgd2lsbCBzdGF0aXN0aWNhbGx5IHF1YW50aWZ5IHRoZXNlIGVmZmVjdHMKZm9yIGVhY2ggcHJvdGVpbiBpbmRpdmlkdWFsbHkuCgojIyMgRGF0YSBtb2RlbGxpbmcKCllvdSBjYW4gbm93IG1vdmUgdG8gdGhlIGRhdGEgbW9kZWxsaW5nIHRhYiBieSBjbGlja2luZyAiTW9kZWwiIGluIHRoZQptZW51IG9uIHRoZSBsZWZ0LiAKCldlIHdpbGwgbW9kZWwgdGhlIGRhdGEgd2l0aCBhIGRpZmZlcmVudCBncm91cCBtZWFuIGZvciB0aGUgdmFyaWFibGUKYHRyZWF0bWVudGAgd2l0aCB0d28gbGV2ZWxzOiBzcGlrZWluIHRyZWF0bWVudCBBIGFuZCBCLiBXZSBjYW4gc3BlY2lmeQp0aGlzIG1vZGVsIGJ5IGluc2VydGluZyBgfjErdHJlYXRtZW50YCBpbiB0aGUgIkRlc2lnbiBmb3JtdWxhIgp0ZXh0Ym94LiBOb3RlLCB0aGF0IGEgZm9ybXVsYSBhbHdheXMgc3RhcnRzIHdpdGggYSBzeW1ib2wgJ34nIChzZWUgdGhlCmRlc2NyaXB0aXZlIHBhcmFncmFwaCBpbiB0aGUgYXBwbGljYXRpb24pLgoKTm90ZSB0aGF0IGFzIHNvb24gYXMgd2UgZG8gdGhhdCwgdGhlIGRlc2lnbiBpcyB2aXN1YWxpc2VkLiAKCiFbRmlndXJlIDE1LiBtc3Fyb2IyIE1vZGVsIHRhYiB3aXRoIGRlc2lnbl0oLi9maWd1cmVzL21zcXJvYjJfbW9kZWwucG5nKQoKVGhpcyB2aXN1YWxpc2F0aW9uIHNob3dzIHRoZSBkaWZmZXJlbnQgZ3JvdXAgbWVhbnMgdGhhdCBhcmUgbW9kZWxsZWQuIAoKLSBUaGUgZ3JvdXAgbWVhbiBmb3IgdGhlIHRyZWF0bWVudCBBIGlzIG1vZGVsbGVkIHVzaW5nIHRoZSBwYXJhbWV0ZXIgCiAgYChJbnRlcmNlcHQpYC4gCi0gVGhlIGdyb3VwIG1lYW4gZm9yIHRoZSB0cmVhdG1lbnQgQiBpcyBtb2RlbGxlZCB1c2luZyBhIGxpbmVhciAKICBjb21iaW5hdGlvbiBvZiB0aGUgdHdvIG1vZGVsIHBhcmFtZXRlcnMgYChJbnRlcmNlcHQpICsgdHJlYXRtZW50QmAuCi0gVGhlIGF2ZXJhZ2UgZGlmZmVyZW5jZSBpbiBwcmVwcm9jZXNzZWQgcHJvdGVpbiBleHByZXNzaW9uIHZhbHVlIAogIGJldHdlZW4gYm90aCBjb25kaXRpb25zIGVxdWFscyBgdHJlYXRtZW50QmAuCgpSZW1lbWJlciB0aGF0IHdlIGxvZy10cmFuc2Zvcm1lZCB0aGUgaW50ZW5zaXRpZXM6CgokJCAKXGxvZ18yRkNfXHRleHR7Qn09XGxvZ18yIEIgLSBcbG9nXzIgQSA9IFxsb2dfMlxmcmFje0J9e0F9ID0gXHRleHR7dHJlYXRtZW50Qn0KJCQKCk5vdGUgdGhhdCBhIGxpbmVhciBjb21iaW5hdGlvbiBvZiBtb2RlbCBwYXJhbWV0ZXJzIGlzIGFsc28gcmVmZXJyZWQgdG8KYXMgYSBjb250cmFzdCBpbiBzdGF0aXN0aWNzLiBUaGlzIGNvbnRyYXN0IGhhcyB0aGUgaW50ZXJwcmV0YXRpb24gb2YgYQpsb2cyIGZvbGQgY2hhbmdlIGJldHdlZW4gY29uZGl0aW9uIEIgYW5kIGNvbmRpdGlvbiBBLiBQb3NpdGl2ZQplc3RpbWF0ZXMgZGVub3RlIHRoYXQgdGhlIGFidW5kYW5jZSBvZiB0aGUgcHJvdGVpbiBpcyBvbiBhdmVyYWdlCmhpZ2hlciBpbiBjb25kaXRpb24gQiwgbmVnYXRpdmUgZXN0aW1hdGVzIGRlbm90ZSB0aGF0IHRoZSBhYnVuZGFuY2UgaXMKb24gYXZlcmFnZSBoaWdoZXIgaW4gY29uZGl0aW9uIEEuIEFuIGVzdGltYXRlIGVxdWFsIHRvIDAgaW5kaWNhdGVzCnRoYXQgdGhlIGVzdGltYXRlZCBhYnVuZGFuY2VzIGFyZSBlcXVhbC4KCkEgbG9nMiBGQyA9IDEgaW5kaWNhdGVzIHRoYXQgdGhlIGF2ZXJhZ2UgYWJ1bmRhbmNlIGluIGNvbmRpdGlvbiBCIGlzIDIKeCBoaWdoZXIgdGhhbiB0aGUgYXZlcmFnZSBhYnVuZGFuY2UgaW4gY29uZGl0aW9uIEEsIGkuZS4gYW4gMiBmb2xkCnVwcmVndWxhdGlvbiBpbiBjb25kaXRpb24gQiBhcyBjb21wYXJlZCB0byBjb25kaXRpb24gQS4KCldlIG5vdyBoYXZlIHRvIGNsaWNrIHRoZSBgRml0IE1vZGVsIWAgYnV0dG9uIHRvIGZpdCB0aGUgbW9kZWxzIGZvcgplYWNoIHByb3RlaW4uIFdlIGFyZSBub3cgcmVhZHkgZm9yIGFzc2Vzc2luZyBkaWZmZXJlbnRpYWwgYWJ1bmRhbmNlIG9mCmVhY2ggcHJvdGVpbiB1c2luZyBmb3JtYWwgaHlwb3RoZXNpcyB0ZXN0aW5nLgoKIyMjIEluZmVyZW5jZQoKQ2xpY2sgb24gdGhlICJJbmZlcmVuY2UiIHRhYiBpbiB0aGUgbGVmdCBtZW51LgoKSW4gdGhlIHN0YXRpc3RpY2FsIGFuYWx5c2lzIHdlIHdpbGwgd2FudCB0byB0ZXN0IHRoZSBudWxsIGh5cG90aGVzaXMKdGhhdAoKJCQgSF8wOiBcbG9nXzIgQi1cbG9nXzIgNkEgPSAwICQkCgpBZ2FpbnN0IHRoZSBhbHRlcm5hdGl2ZSB0aGF0CgokJCBIXzE6IFxsb2dfMiBCIC0gXGxvZ18yIEEgXG5lcSAwICQkCgpXZSBjYW4gc3BlY2lmeSB0aGUgbnVsbCBoeXBvdGhlc2lzIGFzIGEgbGluZWFyIGNvbWJpbmF0aW9uIG9mIHRoZQptb2RlbCBwYXJhbWV0ZXJzLCBpLmUuIGB0cmVhdG1lbnRCID0gMGAuIFdlIHdpbGwgZmFsc2lmeSB0aGlzIG51bGwKaHlwb3RoZXNpcyBmb3IgZWFjaCBwcm90ZWluIHNlcGFyYXRlbHkgYmFzZWQgb24gdGhlIGxpbmVhciBtb2RlbC4gU28sCnVuZGVyIHRoZSBudWxsIGh5cG90aGVzaXMgd2UgcmVhc29uIHRoYXQgdGhlcmUgaXMgbm8gZWZmZWN0IG9mIHRoZQpzcGlrZS1pbiB0cmVhdG1lbnQgb24gdGhlIGFidW5kYW5jZSBvZiBhIHNwZWNpZmljIHByb3RlaW4uIFRoZSBwLXZhbHVlCm9mIHRoZSBzdGF0aXN0aWNhbCB0ZXN0IHRoYW4gaW5kaWNhdGVzIHRoZSBwcm9iYWJpbGl0eSB0byBvYnNlcnZlIGFuCmVmZmVjdCAoZm9sZCBjaGFuZ2UpLCB0aGF0IGlzIGFzIGV4dHJlbWUgb3IgbW9yZSBleHRyZW1lIChlcXVhbGx5IG9yCm1vcmUgdXAgb3IgZG93biByZWd1bGF0ZWQpIHRoYW4gd2hhdCBpcyBvYnNlcnZlZCBpbiB0aGUgc2FtcGxlLCBieQpyYW5kb20gY2hhbmdlICh3aGVuIHRoZSBudWxsIGh5cG90aGVzaXMgaXMgdHJ1ZSBhbmQgd2hlbiB0aGVyZSBpcyBpbgpyZWFsaXR5IG5vIGVmZmVjdCBvZiB0aGUgdHJlYXRtZW50KS4KClRvIHByb2NlZWQsIGZpbGwgaW4gdGhlIGZpZWxkICJOdWxsIGh5cG90aGVzaXMiIHdpdGggYHRyZWF0bWVudEIgPSAwYC4KQWZ0ZXIgMS0yIHNlY29uZCwgdGhlIHdpbmRvdyBpcyB1cGRhdGVkIAoKIVtGaWd1cmUgMTYuIG1zcXJvYjIgSW5mZXJlbmNlIHRhYiB3aXRoIGNvbnRyYXN0XSguL2ZpZ3VyZXMvbXNxcm9iMl9pbmZlcmVuY2UucG5nKQoKIyMjIyBWb2xjYW5vIHBsb3QKClRoZSBhcHBsaWNhdGlvbiBub3cgYWxzbyBwcm92aWRlcyBhIHZvbGNhbm8gcGxvdCBhcHBlYXJzIGdpdmluZyB5b3UgYQp2aWV3IG9uIHN0YXRpc3RpY2FsIHNpZ25pZmljYW5jZSBpbiB0aGUgeS1heGlzLCBpLmUuIHRoZSAkLVxsb2dfezEwfSQKdHJhbnNmb3JtZWQgcC12YWx1ZTogYSB2YWx1ZSBvZiAxIGVxdWFscyB0byBhIHAtdmFsdWUgb2YgMC4xLCBhIHZhbHVlCm9mIDIgZXF1YWxzIGEgcC12YWx1ZSBvZiAwLjAxLCBldGMgYWdhaW5zdCBiaW9sb2dpY2FsIHJlbGV2YW5jZS90aGUKZWZmZWN0IHNpemUgaW4gdGhlIHgtYXhpcywgd2hpY2ggaXMgdGhlICRcbG9nXzJGQyQuCgojIyMjIFJlc3VsdCB0YWJsZQoKWW91IGFsc28gZ2V0IGEgdGFibGUgd2l0aCBzZWxlY3RlZCBmZWF0dXJlLiBCeSBkZWZhdWx0IHRoZXNlIGFyZSBhbGwKcHJvdGVpbnMgdGhhdCBhcmUgc2lnbmlmaWNhbnQgd2l0aCB0aGUgc3BlY2lmaWVkICJTaWduaWZpY2FuY2UgbGV2ZWwiCmZpZWxkLiBZb3UgYWxzbyBvYnRhaW4gYSBib3hwbG90IG9mIHRoZSBsb2cyLWZvbGQgY2hhbmdlcyBmb3IgYWxsCnByb3RlaW5zIHRoYXQgYXJlIHNlbGVjdGVkIGluIHRoZSB0YWJsZS4KCk5vdGUgdGhhdCAxOSBwcm90ZWlucyBhcmUgcmVjb3ZlcmVkIGFzIHNpZ25pZmljYW50LiBBcyBleHBlY3RlZCBhbGwKdGhlc2UgcHJvdGVpbnMgYXJlIFVQUyBwcm90ZWlucyB3aGljaCBhcmUga25vd24gdG8gYmUgc3Bpa2VkIGluIHRoZQpzYW1wbGVzLiBJZiB5b3UgdW50aWNrIHRoZSBvcHRpb24gYG9ubHkgc2lnbmlmaWNhbnQgZmVhdHVyZXMgaW4KdGFibGVgLCBhbGwgcHJvdGVpbnMgYXJlIHNob3duIGluIHRoZSB0YWJsZS4KCllvdSBjYW4gaGlnaGxpZ2h0IHByb3RlaW5zIG9uIHRoZSB2b2xjYW5vIHBsb3QgYnkgc2VsZWN0aW5nIGxpbmVzIG9mCmludGVyZXN0IGluIHRoZSByZXN1bHQgdGFibGUuIFlvdSBjYW4gYWxzbyBzZWFyY2ggZm9yIHNwZWNpZmljCnByb3RlaW5zIGluIHRoZSBsaXN0IGJ5IHVzaW5nIHRoZSBzZWFyY2ggZmllbGQgYWJvdmUgdGhlIHRhYmxlLiBFLmcuCnR5cGUgYHVwc2AuCgojIyMjIERldGFpbCBwbG90cwoKSWYgeW91IHNlbGVjdCBvbmUgcHJvdGVpbiBpbiB0aGUgdGFibGUsIHlvdSBjYW4gYWxzbyBleHBsb3JlIHRoZQp1bmRlcmx5aW5nIG5vcm1hbGlzZWQgcGVwdGlkZSBpbnRlbnNpdGllcyBhbmQgcHJvdGVpbiBpbnRlbnNpdGllcyBvZgp0aGUgdW5kZXJseWluZyBkYXRhIGluIHRoZSAiRGV0YWlsUGxvdHMiOgoKIVtGaWd1cmUgMTcuIG1zcXJvYjIgZGV0YWlsIHBsb3RzLl0oLi9maWd1cmVzL21zcXJvYjJfZGV0YWlscGxvdHMucG5nKQoKWW91IGNhbiBmdXJ0aGVyIG1vZGlmeSB0aGUgcGxvdCBieSBjb2xvcmluZyB0aGUgZGF0YSBhY2NvcmRpbmcgdG8gYQpkZXNpZ24gdmFyaWFibGUgb3IgYnkgc3BsaXR0aW5nIHRoZSBkYXRhIGhvcml6b250YWxseSBvciB2ZXJ0aWNhbGx5CmFjY29yZGluZyB0byBkZXNpZ24gdmFyaWFibGVzLgoKIyMgUmVwb3J0IFRhYgoKQSByZXByb2R1Y2libGUgUm1hcmtkb3duIHNjcmlwdCBhbmQgaHRtbCByZXBvcnQgd2l0aCB0aGUgYW5hbHlzaXMgeW91CnBlcmZvcm1lZCB3aXRoIHRoZSBHVUkgY2FuIGJlIGRvd25sb2FkZWQgaW4gdGhlICJSZXBvcnQiIHRhYi4KCiFbRmlndXJlIDE4LiBtc3Fyb2IyIHJlcG9ydCB0YWJdKC4vZmlndXJlcy9tc3Fyb2IyX3JlcG9ydC5wbmcpCgoxLiBQcm92aWRlIGEgbmFtZSB0byB5b3VyIHByb2plY3QKMi4gWW91IGNhbiBzcGVjaWZ5IHRoZSBudW1iZXIgb2YgZGV0YWlsIHBsb3RzIHlvdSB3YW50IHRvIGdlbmVyYXRlIGluCiAgIHRoZSByZXBvcnQuIFRoZSBkZWZhdWx0IGlzIDEwLCB3aGljaCBpbmRpY2F0ZXMgdGhhdCBkZXRhaWwgcGxvdHMKICAgd2lsbCBiZSBjb25zdHJ1Y3RlZCBmb3IgdGhlIDEwIG1vc3Qgc2lnbmlmaWNhbnQgcHJvdGVpbiBpbiB5b3VyIHRvcAogICBsaXN0LiBOb3RlLCB0aGF0IHRoZSBudW1iZXIgb2YgZGV0YWlsIHBsb3RzIGNhbiBiZSBzbWFsbGVyIGlmIHRoZXJlCiAgIGFyZSBsZXNzIHRoYW4gMTAgcHJvdGVpbnMgc2lnbmlmaWNhbnQgYXQgdGhlIHNwZWNpZmllZCBGRFItbGV2ZWwuCjMuIENsaWNrIHRoZSAiR2VuZXJhdGUgcmVwb3J0IiBidXR0b24gYW5kIGEgcmVwb3J0IHdpbGwgYmUgY29tcGlsZWQuCgpUaGlzIHdpbGwgY3JlYXRlIGEgemlwIGZpbGUgdGhhdCBjb250YWluczoKCi0gYHFmZWF0dXJlc0ZpbGUucmRzYDogQSB0YWIgZGVsaW1pdGVkIGZpbGUgd2l0aCB0aGUgcmF3IGludGVuc2l0eSAKICBkYXRhLgotIGByZXBvcnQuUm1kYCA6IFIvbWFya2Rvd24gZmlsZSB3aXRoIHRoZSBjb2RlIGZvciB0aGUgcmVwb3J0LiBJZiB5b3UKICBvcGVuIHRoZSBmaWxlIGluIFJzdHVkaW8gYW5kIGlmIHlvdSBoaXQgdGhlIGtuaXQgYnV0dG9uIHRoZSByZXBvcnQKICB3aWxsIGJlIGNvbXBpbGVkIHRvIGh0bWwuCgpTbywgeW91ciBhbmFseXNpcyBpcyBzdG9yZWQgaW4gYSBmdWxseSByZXByb2R1Y2libGUgd2F5LiAKCiMjIFdyYXAgdXAgZXhlcmNpc2U6IGV2YWx1YXRlIHN1bW1hcmlzYXRpb24KCldlIGZ1cnRoZXIgZXhwbG9yZSB0aGUgZGlmZmVyZW5jZSBiZXR3ZWVuIHN1bW1hcmlzYXRpb24gbWV0aG9kcy4gV2UKZmlyc3QgYXNzZXNzIHRoZSBxdWFsaXR5IG9mIHRoZSBmb2xkIGNoYW5nZSBlc3RpbWF0ZXMgZm9yIHRoZSByb2J1c3QKc3VtbWFyaXNhdGlvbi4gCgpHbyBiYWNrIHRvIHRoZSAiSW5mZXJlbmNlIiB0YWIuIFdlIHdpbGwgbWFrZSB1c2Ugb2YgdGhlIGJveHBsb3QgYXQgdGhlCmJvdHRvbSBvZiB0aGUgcXVhbnRpZmljYXRpb24gdGFiLgoKMS4gVW50aWNrIHRoZSBvcHRpb24gYG9ubHkgc2lnbmlmaWNhbnQgZmVhdHVyZXMgaW4gdGFibGVgIHRvIHNob3cgYWxsCiAgIHByb3RlaW5zIGluIHRoZSB0YWJsZS4gVGhlIGJveHBsb3QgYmVsb3cgdGhlIHRhYmxlIHZpc3VhbGlzZXMgdGhlCiAgIGxvZzIgZm9sZCBjaGFuZ2UgKEZDKSBlc3RpbWF0ZXMgZm9yIGFsbCBwcm90ZWlucyBpbiB0aGUgdGFibGUuCjIuIFdlIGNhbiBub3cgZmlsdGVyIHRoZSB1cHMgcHJvdGVpbnMgYnkgdHlwaW5nICJ1cHMiIGluIHRoZSBzZWFyY2gKICAgZmllbGQgYWJvdmUgdGhlIHRhYmxlLiBOb3cgYWxsIHllYXN0IHByb3RlaW5zIGFyZSByZW1vdmVkIGZyb20gdGhlCiAgIHJlc3VsdHMgdGFibGUgYW5kIGEgYm94cGxvdCBvZiB0aGUgdXBzIHByb3RlaW4gbG9nMiBGQ3Mgd2lsbCBiZQogICBtYWRlLgoKIVtGaWd1cmUgMTkuIG1zcXJvYjIgSW5mZXJlbmNlIHRhYiB3aXRoIEZvbGQgQ2hhbmdlIEJveHBsb3QgZm9yIGFsbCBVUFMgcHJvdGVpbnNdKC4vZmlndXJlcy9tc3Fyb2IyX3Vwcy5wbmcpCgpUcnkgYW5zd2VyaW5nIHRoZSBmb2xsb3dpbmcgcXVlc3Rpb25zOgoKLSBXZSBrbm93IHRoZSByZWFsIEZDIGZvciB0aGUgc3Bpa2UgaW4gcHJvdGVpbnMgKHNlZQogIGRlc2NyaXB0aW9uIG9mIHRoZSBkYXRhIDIuMikuIE5vdGUsIHRoYXQgdGhlIGJveHBsb3QgaXMgc2hvd2luZyB0aGUKICBsb2cyIEZDLiBXaGF0IGRvIHlvdSBvYnNlcnZlPwotIE5vdyBzZWxlY3QgYWxsIHllYXN0IHByb3RlaW5zLiBXaGF0IGlzIHRoZSByZWFsIGZvbGQgY2hhbmdlCiAgYW5kIHdoYXQgZG8geW91IG9ic2VydmU/Ci0gQ2xvc2UgYW5kIHJlb3BlbiB0aGUgYWhpbnkgQXBwIGFuZCByZXBlYXQgdGhlIGNvbXBsZXRlIGFuYWx5c2lzLCBidXQgdGhpcyB0aW1lIGFwcGx5IHRoZSBtZWRpYW4gc3VtbWFyaXNhdGlvbiBtZXRob2QgaW4gU3RlcCA1IGluIHRoZSBkYXRhIHByZXByb2Nlc3NpbmcuIFdoYXQgZG8KICB5b3Ugb2JzZXJ2ZSwgaG93IGRvZXMgdGhhdCBjb21wYXJlIHRvIHRoZSByb2J1c3Qgc3VtbWFyaXNhdGlvbiBhbmQKICB0cnkgdG8gZXhwbGFpbiB0aGlzPwoKIyMgRmluYWwgcmVtYXJrCgpOb3RlLCB0aGF0IHRoZSBzaGlueSBhcHBzIGFyZSBpbnRlcmZhY2VzIHRvIHRoZSBzdGF0aXN0aWNhbApwcm9ncmFtbWluZyBzb2Z0d2FyZSBSLgoKVGhlIGFuYWx5c2lzIGNhbiBhbHNvIGJlIGNvbmR1Y3RlZCB1c2luZyBzY3JpcHRzLCB3aGljaCBnaXZlcyB5b3UgbXVjaAptb3JlIGZ1bmN0aW9uYWxpdHkgYW5kIHRoZSBhYmlsaXR5IHRvIHN0cmVhbWxpbmUsIGF1dG9tYXRlIGFuZApkb2N1bWVudCB5b3VyIGFuYWx5c2VzIGluIGEgcmVwcm9kdWNpYmxlIHdheS4K